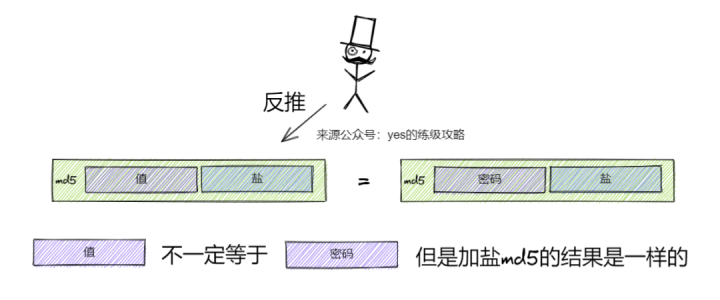

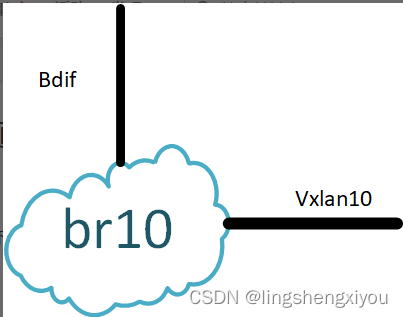

在学习OVS VXLAN实现之前,我们先回顾一下传统VTEP设备是如何处理VXLAN报文的。如下图所示:

vxlan报文进入交换机端口后,根据报文头部信息进行vxlan隧道终结。隧道终结后,根据underlay信息进行overlay映射,得到overlay的bd和vrf.对于上图来说,报文隧道终结后从vxlan10进入br10,就为overlay报文绑定了br10和bdif。其中br10进行同子网FDB转发,如果overlay报文的目的MAC为bdif的mac,那么报文将从bdif进入其所属的vrf进行三层路由。这个过程就是VTEP接收到vxlan报文后的处理流程。

对于overlay报文,在经过overlay路由后,如果其目的bd为br10。那么报文将会从bdif进入br10,经过fdb后从vxlan10输出。vxlan10接口负责为报文构建vxlan封装。vxlan报文封装好后进入underlay路由转发,离开VTEP。

(免费订阅,永久学习)学习地址: Dpdk/网络协议栈/vpp/OvS/DDos/NFV/虚拟化/高性能专家-学习视频教程-腾讯课堂

更多DPDK相关学习资料有需要的可以自行报名学习,免费订阅,永久学习,或点击这里加qun免费

领取,关注我持续更新哦! !

VTEP组成要素

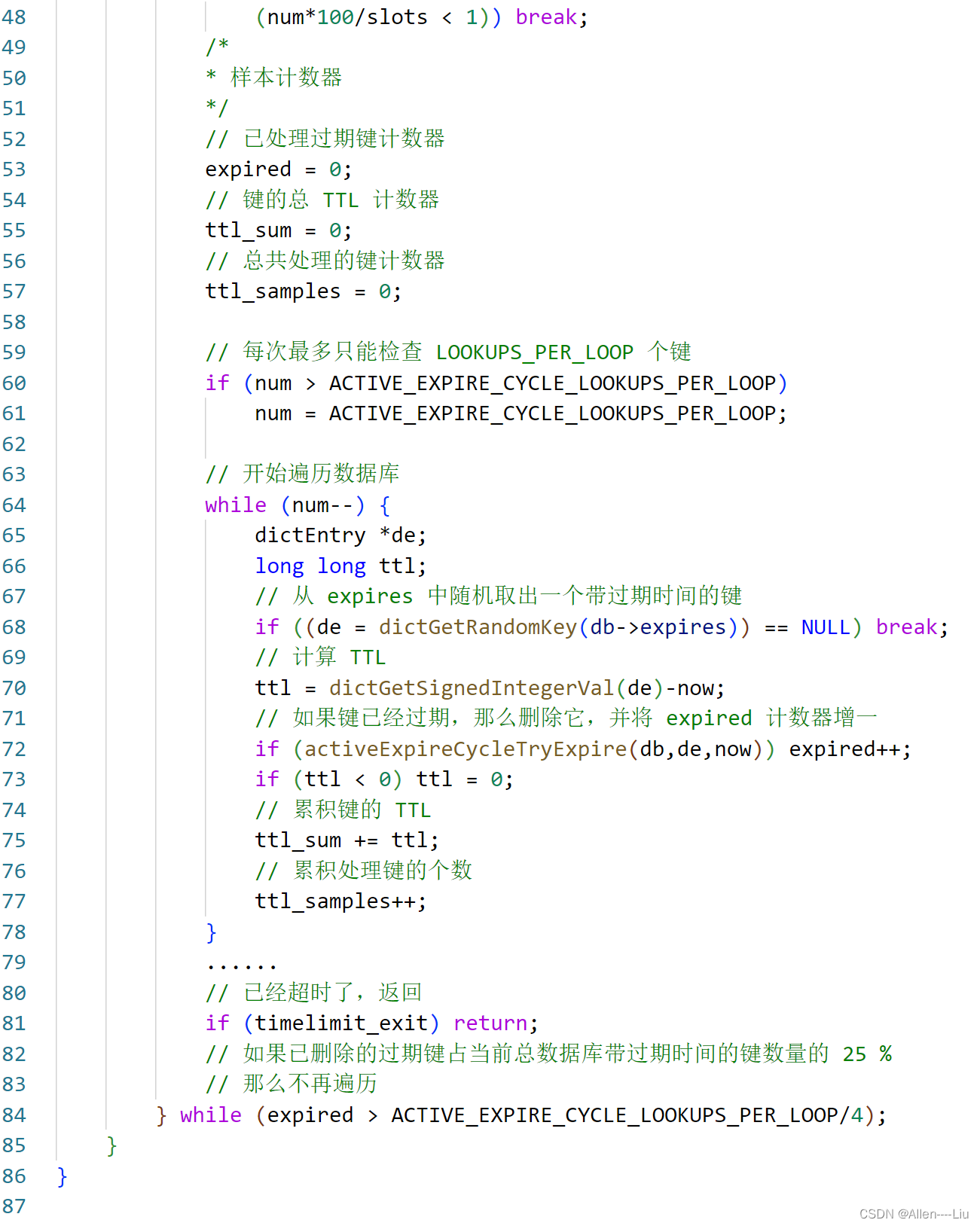

tunnel-terminate table

隧道终结表用于剥掉vxlan报文的underlay报文头。

VXLAN报文进入VTEP后,需要进行隧道终结。VXLAN属于P2MP(点到多点)隧道。进行隧道终结时只需要校验目的IP是本机的IP即可(目的MAC必定是本机的)。当然隧道终结之前,需要确定报文是否为VXLAN报文。隧道终结一般有两种形式:

- 像linux内核那样,不管报文是否为vxlan报文,当成常规的报文进行处理,因为vxlan报文的目的IP是本机,报文将会被送往local的udp进行处理,在udp处理时,根据目的端口为4789,然后将报文转入vlxan端口进行处理,这时overlay报文将会从vxlan中进入协议栈进行第二遍处理。

- 向传统硬件厂商一样,在parser阶段就已经将整个报文的内外层报文头都提取出来,如果是vxlan报文,直接进入tunnel-terminate表进行终结。

tunnel-decap-map table

隧道解封装映射表,用于确定overlay报文的二层广播域和三层路由域,即bd和vrf。

vxlan隧道时点到多点的隧道,可以根据vni来映射其所属的BD和VRF,用于overlay报文的同子网FDB和不同子网的路由。

tunnel-encap table

隧道封装表负责对fdb转发后或者路由后的报文进行vxlan报文封装。对于同子网的报文需要确定vni,underlay源IP,underlay目的IP即可,一般来说同子网转发,vni是不会变的。对于跨子网转发,需要借助路由,路由后确定了overlay-smac,overlay-dmac。对于vni,underlay-sip,underlay-dip的确定,不同的转发模型有很大的不同。传统的转发模型是,overlay-route只负责路由,路由后封装好链路层。报文从bdif输出,bdif连接到一个桥,由桥的vni决定报文的vni。还有一些厂商可以直接由路由决定vni,underlay-sip和underlay-dip,具体可以参考sonic的sai接口设计。

underlay route table

overlay报文封装好后,进入underlay进行路由转发,所以需要underlay路由。传统网络设备需要给vxlan隧道携带一个underlay rif,通过该rif指定underlay vrf。

underlay neighbor

underlay路由后,需要邻居进行underlay链路封装,需要邻居表。

OVS DPDK VXLAN

ovs要实现vtep功能,必定需要实现上面的要素。

主要数据结构

struct tnl_match {ovs_be64 in_key;//vnistruct in6_addr ipv6_src;//源IPstruct in6_addr ipv6_dst;//目的IPodp_port_t odp_port;//对应的接口编号bool in_key_flow;//该标志位false,表示vni需要严格匹配,为true,表示希望流表设置vni。bool ip_src_flow;//为false表示必须匹配源IP,如果源IP为0表示通配所有源IP。为true表示使用openflow流表设置隧道 //tunnel-id进行进一步匹配。bool ip_dst_flow;//为false表示必须匹配目的IP,为true表示使用openflow流表设置隧道的目的IP。

};struct tnl_port {struct hmap_node ofport_node;struct hmap_node match_node;const struct ofport_dpif *ofport;uint64_t change_seq;struct netdev *netdev;struct tnl_match match;//隧道认证元素,唯一标识一个隧道

};// ovs将vxlan port存在一个hash表中:

/* Each hmap contains "struct tnl_port"s.* The index is a combination of how each of the fields listed under "Tunnel* matches" above matches, see the final paragraph for ordering.* vxlan端口映射表。根据in_key_flow,in_dst_flow,in_src_flow三个参数分成12个* 优先级。*/

static struct hmap *tnl_match_maps[N_MATCH_TYPES] OVS_GUARDED_BY(rwlock);

详细说明

struct tnl_match 结构为vxlan端口的核心结构。in_key,ipv6_src,ipv6_dst指定了vxlan封装的核心成员,这些是传统VTEP的vxlan接口必须的成员。它们用于隧道终结和隧道封装。但是在ovs中,设计者添加了是三个重要的标志:

- in_key_flow:有两种取值false表示该隧道VNI由vxlan端口创建时设置,该值会用来进行隧道终结,如果报文从该接口出去的话,会使用该值进行封装。所以为false的时候,优先级会更高。如果为true,则表示隧道终结不匹配vni,具体vni的操作由流表进行处理,封装报文时,vni由流表进行设置。

- ip_src_flow:有三种取值,如果为false,表示隧道终结时需要使用该IP与vxlan报文的目的IP进行匹配。报文封装时,使用该IP作为该vxlan端口出去报文的隧道源IP。为true时有两种情况,src_ip为0表示通配所有IP,否则,只能匹配确定IP。

- ip_dst_flow:有两种值,如果为false,表示隧道终结时需要匹配报文的源IP为该IP。报文从该接口输出时,隧道目的接口为该接口。如果为true的话,都由flow进行处理。

vxlan端口创建示例:

admin@ubuntu:$ sudo ovs-vsctl add-port br0 vxlan1 -- set interface vxlan1 type=vxlan options:remote_ip=flow options:key=flow options:dst_port=8472 options:local_ip=flow admin@ubuntu:$ sudo ovs-vsctl add-port br0 vxlan2 -- set interface vxlan2 type=vxlan options:remote_ip=flow options:key=flow options:dst_port=8472 admin@ubuntu:/var/log/openvswitch$ sudo ovs-vsctl add-port br0 vxlan13 -- set interface vxlan13 type=vxlan options:remote_ip=flow options:key=191 options:dst_port=8472 admin@ubuntu:/var/log/openvswitch$

ovs交换机是一个sdn交换机,其需要核心动作是openflow流表来完成。引入这三个标志正是用来解除传统vxlan设备的限制。这三个要素根据取值的不同,可以有2*2*3=12种组合,即tnl_match_maps数组的大小。

隧道端口查找过程

/* Returns a pointer to the 'tnl_match_maps' element corresponding to 'm''s* matching criteria. * 根据三个标志和配置决定其优先级,即map的索引。这个函数在vxlan接口添加时会调用,决定vxlan接口加入到那个map中*/

static struct hmap **

tnl_match_map(const struct tnl_match *m)

{enum ip_src_type ip_src;ip_src = (m->ip_src_flow ? IP_SRC_FLOW: ipv6_addr_is_set(&m->ipv6_src) ? IP_SRC_CFG: IP_SRC_ANY);return &tnl_match_maps[6 * m->in_key_flow + 3 * m->ip_dst_flow + ip_src];

}/* Returns the tnl_port that is the best match for the tunnel data in 'flow',* or NULL if no tnl_port matches 'flow'. * 隧道终结过程,根据报文信息查找对应的vxlan端口,进行隧道终结*/

static struct tnl_port *

tnl_find(const struct flow *flow) OVS_REQ_RDLOCK(rwlock)

{enum ip_src_type ip_src;int in_key_flow;int ip_dst_flow;int i;i = 0;for (in_key_flow = 0; in_key_flow < 2; in_key_flow++) {//in_key_flow优先级最高,0优先级高于1for (ip_dst_flow = 0; ip_dst_flow < 2; ip_dst_flow++) {//ip_dst_flow优先级次之,即vxlan报文源IPfor (ip_src = 0; ip_src < 3; ip_src++) {//ip_src优先级最低,可能的取值可以查看IP_SRC_CFGstruct hmap *map = tnl_match_maps[i];if (map) {struct tnl_port *tnl_port;struct tnl_match match;memset(&match, 0, sizeof match);/* The apparent mix-up of 'ip_dst' and 'ip_src' below is* correct, because "struct tnl_match" is expressed in* terms of packets being sent out, but we are using it* here as a description of how to treat received* packets. * in_key_flow为真的时候,不需要匹配vni*/match.in_key = in_key_flow ? 0 : flow->tunnel.tun_id;if (ip_src == IP_SRC_CFG) {match.ipv6_src = flow_tnl_dst(&flow->tunnel);}if (!ip_dst_flow) {/* */match.ipv6_dst = flow_tnl_src(&flow->tunnel);}match.odp_port = flow->in_port.odp_port;match.in_key_flow = in_key_flow;match.ip_dst_flow = ip_dst_flow;match.ip_src_flow = ip_src == IP_SRC_FLOW;//进行精确匹配tnl_port = tnl_find_exact(&match, map);if (tnl_port) {return tnl_port;}}i++;}}}return NULL;

}

优势

ovs通过这三个标志的加入,极大的简化了vxlan端口的配置,使得全局一个vxlan端口足以满足应用,其它参数通过流表来操作,突出了SDN的优势,可以适应大规模场景。

terminate table

ovs的隧道终结表是在创建vxlan port的时候构建。

//隧道全局信息初始化。ovs采用分类器来构建隧道终结表cls。全局变量addr_list保存了本地所有的underlay ip地址。

//underlay ip将来会作为隧道终结的目的地址,也会作为隧道封装的源IP地址。

//port_list保存了使用传输层端口的隧道,比如vxlan隧道。

void

tnl_port_map_init(void)

{classifier_init(&cls, flow_segment_u64s);//隧道终结表ovs_list_init(&addr_list);//underlay ip链表ovs_list_init(&port_list);//tnl_port控制块链表unixctl_command_register("tnl/ports/show", "-v", 0, 1, tnl_port_show, NULL);

}

隧道端口添加

/* Adds 'ofport' to the module with datapath port number 'odp_port'. 'ofport's* must be added before they can be used by the module. 'ofport' must be a* tunnel.** Returns 0 if successful, otherwise a positive errno value. * native_tnl表示是否开启了隧道终结*/

int

tnl_port_add(const struct ofport_dpif *ofport, const struct netdev *netdev,odp_port_t odp_port, bool native_tnl, const char name[]) OVS_EXCLUDED(rwlock)

{bool ok;fat_rwlock_wrlock(&rwlock);ok = tnl_port_add__(ofport, netdev, odp_port, true, native_tnl, name);fat_rwlock_unlock(&rwlock);return ok ? 0 : EEXIST;

}//添加隧道端口

static bool

tnl_port_add__(const struct ofport_dpif *ofport, const struct netdev *netdev,odp_port_t odp_port, bool warn, bool native_tnl, const char name[])OVS_REQ_WRLOCK(rwlock)

{const struct netdev_tunnel_config *cfg;struct tnl_port *existing_port;struct tnl_port *tnl_port;struct hmap **map;cfg = netdev_get_tunnel_config(netdev);ovs_assert(cfg);tnl_port = xzalloc(sizeof *tnl_port);tnl_port->ofport = ofport;tnl_port->netdev = netdev_ref(netdev);tnl_port->change_seq = netdev_get_change_seq(tnl_port->netdev);//这些参数不会影响隧道终结tnl_port->match.in_key = cfg->in_key;tnl_port->match.ipv6_src = cfg->ipv6_src;tnl_port->match.ipv6_dst = cfg->ipv6_dst;tnl_port->match.ip_src_flow = cfg->ip_src_flow;tnl_port->match.ip_dst_flow = cfg->ip_dst_flow;tnl_port->match.in_key_flow = cfg->in_key_flow;tnl_port->match.odp_port = odp_port;//根据隧道的匹配条件查找其在map中的位置map = tnl_match_map(&tnl_port->match);//查看是否存在一样的接口existing_port = tnl_find_exact(&tnl_port->match, *map);if (existing_port) {if (warn) {struct ds ds = DS_EMPTY_INITIALIZER;tnl_match_fmt(&tnl_port->match, &ds);VLOG_WARN("%s: attempting to add tunnel port with same config as ""port '%s' (%s)", tnl_port_get_name(tnl_port),tnl_port_get_name(existing_port), ds_cstr(&ds));ds_destroy(&ds);}netdev_close(tnl_port->netdev);free(tnl_port);return false;}hmap_insert(ofport_map, &tnl_port->ofport_node, hash_pointer(ofport, 0));if (!*map) {*map = xmalloc(sizeof **map);hmap_init(*map);}hmap_insert(*map, &tnl_port->match_node, tnl_hash(&tnl_port->match));tnl_port_mod_log(tnl_port, "adding");if (native_tnl) {//如果支持隧道终结,则构建隧道终结表,一般来说dpdk模式下需要开启,内核模式下不需要const char *type;type = netdev_get_type(netdev);tnl_port_map_insert(odp_port, cfg->dst_port, name, type);}return true;

}//对于需要传输层的隧道,则对目的端口进行处理

void

tnl_port_map_insert(odp_port_t port, ovs_be16 tp_port,const char dev_name[], const char type[])

{struct tnl_port *p;struct ip_device *ip_dev;uint8_t nw_proto;nw_proto = tnl_type_to_nw_proto(type);if (!nw_proto) {//不需要传输层,则直接返回return;}//将隧道端口加入链表,这里有一个bug:不是比较tp_port == p->tp_port,而是p->port == port//判断条件修改为(p->port == port && p->nw_proto == nw_proto)ovs_mutex_lock(&mutex);LIST_FOR_EACH(p, node, &port_list) {if (tp_port == p->tp_port && p->nw_proto == nw_proto) {goto out;}}p = xzalloc(sizeof *p);p->port = port;p->tp_port = tp_port;p->nw_proto = nw_proto;ovs_strlcpy(p->dev_name, dev_name, sizeof p->dev_name);ovs_list_insert(&port_list, &p->node);//遍历每一个本机的设备ip地址,使用该设备作为源地址,构建隧道终结表//隧道终结需要参数为:传输层协议,传输层目的端口,本机IP(vxlan报文的目的IP)LIST_FOR_EACH(ip_dev, node, &addr_list) {map_insert_ipdev__(ip_dev, p->dev_name, p->port, p->nw_proto, p->tp_port);}out:ovs_mutex_unlock(&mutex);

}

//ip_dev:源设备

//:设备名字

//:端口编号,

//: 协议,端口

static void

map_insert_ipdev__(struct ip_device *ip_dev, char dev_name[],odp_port_t port, uint8_t nw_proto, ovs_be16 tp_port)

{if (ip_dev->n_addr) {//遍历设备的每一个地址int i;for (i = 0; i < ip_dev->n_addr; i++) {//报文的目的mac必须是ip_dev->macmap_insert(port, ip_dev->mac, &ip_dev->addr[i],nw_proto, tp_port, dev_name);}}

}

隧道终结过程

上面我们看到了如何构建隧道终结表,这里我们看一下ovs是如何执行隧道终结过程。

ovs在设计的时候只能处理一层报文,即对报文进行解析时,只能解析到传输层,无法感知报文的overlay信息。vxlan报文从dpdk接口到达ovs时,其目的mac是internel接口的mac,目的IP是internel接口的IP。使用normal规则进行转发时会将报文转发到internal接口,在构建OUTPUT动作的时候进行隧道终结。

/* 组合报文输出动作 */

static void

compose_output_action(struct xlate_ctx *ctx, ofp_port_t ofp_port,const struct xlate_bond_recirc *xr)

{/* 需要检查一下是否是stp报文 */compose_output_action__(ctx, ofp_port, xr, true);

}/* 组合报文输出动作 */

static void

compose_output_action__(struct xlate_ctx *ctx, ofp_port_t ofp_port,const struct xlate_bond_recirc *xr, bool check_stp)

{const struct xport *xport = get_ofp_port(ctx->xbridge, ofp_port);/* 获取xport */struct flow_wildcards *wc = ctx->wc;/* 获取流通配符 */struct flow *flow = &ctx->xin->flow;/* 获取输入流 */struct flow_tnl flow_tnl;ovs_be16 flow_vlan_tci;uint32_t flow_pkt_mark;uint8_t flow_nw_tos;odp_port_t out_port, odp_port;bool tnl_push_pop_send = false;uint8_t dscp;......if (out_port != ODPP_NONE) {/* 输出转换 */xlate_commit_actions(ctx);/* 转换输出动作 */if (xr) {/* 如果存在bond的重入动作 */struct ovs_action_hash *act_hash;/* Hash action. */act_hash = nl_msg_put_unspec_uninit(ctx->odp_actions,OVS_ACTION_ATTR_HASH,sizeof *act_hash);act_hash->hash_alg = xr->hash_alg;act_hash->hash_basis = xr->hash_basis;/* Recirc action. 添加重入动作,设置重入id */nl_msg_put_u32(ctx->odp_actions, OVS_ACTION_ATTR_RECIRC,xr->recirc_id);} else {if (tnl_push_pop_send) {/* 是否需要进行标签的弹入或者弹出动作 */build_tunnel_send(ctx, xport, flow, odp_port);flow->tunnel = flow_tnl; /* Restore tunnel metadata 报文报文的元数据 */} else {odp_port_t odp_tnl_port = ODPP_NONE;/* XXX: Write better Filter for tunnel port. We can use inport* int tunnel-port flow to avoid these checks completely. * 报文如果是发往local,那么检查一下是否设置了隧道终结功能。进行隧道终结*/if (ofp_port == OFPP_LOCAL &&ovs_native_tunneling_is_on(ctx->xbridge->ofproto)) {odp_tnl_port = tnl_port_map_lookup(flow, wc);}if (odp_tnl_port != ODPP_NONE) {nl_msg_put_odp_port(ctx->odp_actions,OVS_ACTION_ATTR_TUNNEL_POP,odp_tnl_port);} else {/* Tunnel push-pop action is not compatible with* IPFIX action. */compose_ipfix_action(ctx, out_port);/* Handle truncation of the mirrored packet. */if (ctx->mirror_snaplen > 0 &&ctx->mirror_snaplen < UINT16_MAX) {struct ovs_action_trunc *trunc;trunc = nl_msg_put_unspec_uninit(ctx->odp_actions,OVS_ACTION_ATTR_TRUNC,sizeof *trunc);trunc->max_len = ctx->mirror_snaplen;if (!ctx->xbridge->support.trunc) {ctx->xout->slow |= SLOW_ACTION;}}nl_msg_put_odp_port(ctx->odp_actions,OVS_ACTION_ATTR_OUTPUT,out_port);}}}ctx->sflow_odp_port = odp_port;ctx->sflow_n_outputs++;/* 设置出接口 */ctx->nf_output_iface = ofp_port;}/* 出端口镜像处理,这里做的是出镜像 */if (mbridge_has_mirrors(ctx->xbridge->mbridge) && xport->xbundle) {/* 判断该网桥是否支持镜像,并且处在出端口 *//*进行出镜像处理,处理出镜像 */mirror_packet(ctx, xport->xbundle,xbundle_mirror_dst(xport->xbundle->xbridge,xport->xbundle));/* 获取该端口的镜像策略 */}out:/* Restore flow,值写入到动作后,需要进行还原 */flow->vlan_tci = flow_vlan_tci;flow->pkt_mark = flow_pkt_mark;flow->nw_tos = flow_nw_tos;

}/* 'flow' is non-const to allow for temporary modifications during the lookup.* Any changes are restored before returning. * flow参数允许临时修改一些值,但是在返回之前需要被恢复原来的值。*/

odp_port_t

tnl_port_map_lookup(struct flow *flow, struct flow_wildcards *wc)

{//进行分类器规则查找,进行隧道终结const struct cls_rule *cr = classifier_lookup(&cls, OVS_VERSION_MAX, flow,wc);//返回隧道端口编号。return (cr) ? tnl_port_cast(cr)->portno : ODPP_NONE;

}

上面是慢路径查找分类器时执行的处理,报文被处理完后,最终会安装快转流表,执行datapath动作。

/* 动作执行回调函数,参数may_steal表示是否可以释放报文 */

static void

dp_execute_cb(void *aux_, struct dp_packet_batch *packets_,const struct nlattr *a, bool may_steal)

{struct dp_netdev_execute_aux *aux = aux_;/* 动作执行辅助函数 */uint32_t *depth = recirc_depth_get();/* 重入深度 */struct dp_netdev_pmd_thread *pmd = aux->pmd;/* 轮询线程 */struct dp_netdev *dp = pmd->dp;/* 轮询的网桥设备 */int type = nl_attr_type(a);/* 获取动作类型 */long long now = aux->now;/* 获取时间 */struct tx_port *p;/* 发送端口 */switch ((enum ovs_action_attr)type) {/* 动作类型 */......case OVS_ACTION_ATTR_TUNNEL_POP:/* 去掉外层标签,依然需要重入,进行内层报文的处理 */if (*depth < MAX_RECIRC_DEPTH) {struct dp_packet_batch *orig_packets_ = packets_;odp_port_t portno = nl_attr_get_odp_port(a);//查看端口是否存在p = pmd_tnl_port_cache_lookup(pmd, portno);if (p) {struct dp_packet_batch tnl_pkt;int i;if (!may_steal) {dp_packet_batch_clone(&tnl_pkt, packets_);packets_ = &tnl_pkt;dp_packet_batch_reset_cutlen(orig_packets_);}dp_packet_batch_apply_cutlen(packets_);//进行隧道decap,p->port->netdev指导如何decap封装。端口号无意义。netdev_pop_header(p->port->netdev, packets_);if (!packets_->count) {return;}for (i = 0; i < packets_->count; i++) {//overlay报文的输入接口被设置为portno。packets_->packets[i]->md.in_port.odp_port = portno;}(*depth)++;dp_netdev_recirculate(pmd, packets_);(*depth)--;return;}}break;......

}/* vxlan头出栈 */

struct dp_packet *

netdev_vxlan_pop_header(struct dp_packet *packet)

{struct pkt_metadata *md = &packet->md;/* 获取报文的元数据 */struct flow_tnl *tnl = &md->tunnel;/* 获取元数据的隧道信息 */struct vxlanhdr *vxh;unsigned int hlen;pkt_metadata_init_tnl(md);/* 初始化报文的元数据 */if (VXLAN_HLEN > dp_packet_l4_size(packet)) {/* 如果整个报文的大小没有vxlan封装大,那么返回错误 */goto err;}vxh = udp_extract_tnl_md(packet, tnl, &hlen);/* 提取vxlan隧道信息 */if (!vxh) {goto err;}/* 进行vxlan头部校验 */if (get_16aligned_be32(&vxh->vx_flags) != htonl(VXLAN_FLAGS) ||(get_16aligned_be32(&vxh->vx_vni) & htonl(0xff))) {VLOG_WARN_RL(&err_rl, "invalid vxlan flags=%#x vni=%#x\n",ntohl(get_16aligned_be32(&vxh->vx_flags)),ntohl(get_16aligned_be32(&vxh->vx_vni)));goto err;}//提取vni设置成隧道idtnl->tun_id = htonll(ntohl(get_16aligned_be32(&vxh->vx_vni)) >> 8);tnl->flags |= FLOW_TNL_F_KEY;/* 偏移掉隧道头 */dp_packet_reset_packet(packet, hlen + VXLAN_HLEN);return packet;

err:dp_packet_delete(packet);return NULL;

}

提取隧道元数据填充到flow中,将隧道头偏移掉,隧道终结完毕,内层报文准备重入。

tunnel-decap-map table

前面已经说明了隧道终结过程,接下来需要进行解封装后的映射,查找overlay报文的输入VXLAN端口。从而开始overlay报文的处理。ovs是通过vxlan隧道描述控制块实现decap-map功能的。

vxlan接口描述控制块查找

隧道终结后,报文带着隧道元数据调用dp_netdev_recirculate函数进行重入,重入后走慢路径查询分类器。

static struct ofproto_dpif *

xlate_lookup_ofproto_(const struct dpif_backer *backer, const struct flow *flow,ofp_port_t *ofp_in_port, const struct xport **xportp)

{struct xlate_cfg *xcfg = ovsrcu_get(struct xlate_cfg *, &xcfgp);/* 获取当前生效的xlate配置 */const struct xport *xport;xport = xport_lookup(xcfg, tnl_port_should_receive(flow)? tnl_port_receive(flow): odp_port_to_ofport(backer, flow->in_port.odp_port));if (OVS_UNLIKELY(!xport)) {return NULL;}*xportp = xport;if (ofp_in_port) {*ofp_in_port = xport->ofp_port;}return xport->xbridge->ofproto;/* 根据xlate端口找到其openflow交换机描述控制块ofproto */

}

使用函数tnl_port_receive(flow)查询vxlan端口描述控制块。

/* Looks in the table of tunnels for a tunnel matching the metadata in 'flow'.* Returns the 'ofport' corresponding to the new in_port, or a null pointer if* none is found.** Callers should verify that 'flow' needs to be received by calling* tnl_port_should_receive() before this function. */

const struct ofport_dpif *

tnl_port_receive(const struct flow *flow) OVS_EXCLUDED(rwlock)

{char *pre_flow_str = NULL;const struct ofport_dpif *ofport;struct tnl_port *tnl_port;fat_rwlock_rdlock(&rwlock);//找到对应的隧道接口tnl_port = tnl_find(flow);//使用隧道接口作为新的输入接口ofport = tnl_port ? tnl_port->ofport : NULL;if (!tnl_port) {char *flow_str = flow_to_string(flow);VLOG_WARN_RL(&rl, "receive tunnel port not found (%s)", flow_str);free(flow_str);goto out;}if (!VLOG_DROP_DBG(&dbg_rl)) {pre_flow_str = flow_to_string(flow);}if (pre_flow_str) {char *post_flow_str = flow_to_string(flow);char *tnl_str = tnl_port_fmt(tnl_port);VLOG_DBG("flow received\n""%s"" pre: %s\n""post: %s",tnl_str, pre_flow_str, post_flow_str);free(tnl_str);free(pre_flow_str);free(post_flow_str);}out:fat_rwlock_unlock(&rwlock);return ofport;

}/* Returns the tnl_port that is the best match for the tunnel data in 'flow',* or NULL if no tnl_port matches 'flow'. */

static struct tnl_port *

tnl_find(const struct flow *flow) OVS_REQ_RDLOCK(rwlock)

{enum ip_src_type ip_src;int in_key_flow;int ip_dst_flow;int i;i = 0;for (in_key_flow = 0; in_key_flow < 2; in_key_flow++) {for (ip_dst_flow = 0; ip_dst_flow < 2; ip_dst_flow++) {for (ip_src = 0; ip_src < 3; ip_src++) {struct hmap *map = tnl_match_maps[i];if (map) {struct tnl_port *tnl_port;struct tnl_match match;memset(&match, 0, sizeof match);/* The apparent mix-up of 'ip_dst' and 'ip_src' below is* correct, because "struct tnl_match" is expressed in* terms of packets being sent out, but we are using it* here as a description of how to treat received* packets. * in_key_flow为真的时候,不需要匹配vni*/match.in_key = in_key_flow ? 0 : flow->tunnel.tun_id;if (ip_src == IP_SRC_CFG) {match.ipv6_src = flow_tnl_dst(&flow->tunnel);}if (!ip_dst_flow) {/* */match.ipv6_dst = flow_tnl_src(&flow->tunnel);}match.odp_port = flow->in_port.odp_port;match.in_key_flow = in_key_flow;match.ip_dst_flow = ip_dst_flow;match.ip_src_flow = ip_src == IP_SRC_FLOW;//进行精确匹配tnl_port = tnl_find_exact(&match, map);if (tnl_port) {return tnl_port;}}i++;}}}return NULL;

}

tunnel-encap table

这里开始分析vxlan封装。当overlay报文完成了处理之后,如果它是发往另外vtep的话,最后将会从一个tunnel口出去。在慢速路径构建output动作时将会处理隧道相关事情。

/* 组合报文输出动作 */

static void

compose_output_action__(struct xlate_ctx *ctx, ofp_port_t ofp_port,const struct xlate_bond_recirc *xr, bool check_stp)

{const struct xport *xport = get_ofp_port(ctx->xbridge, ofp_port);/* 获取xport */......if (xport->is_tunnel) {/* 如果该端口是隧道接口 */struct in6_addr dst;/* Save tunnel metadata so that changes made due to* the Logical (tunnel) Port are not visible for any further* matches, while explicit set actions on tunnel metadata are.*/flow_tnl = flow->tunnel;/* 先保存隧道元数据 *///odp_port = tnl_port_send(xport->ofport, flow, ctx->wc);if (odp_port == ODPP_NONE) {xlate_report(ctx, OFT_WARN, "Tunneling decided against output");goto out; /* restore flow_nw_tos */}dst = flow_tnl_dst(&flow->tunnel);//if (ipv6_addr_equals(&dst, &ctx->orig_tunnel_ipv6_dst)) {xlate_report(ctx, OFT_WARN, "Not tunneling to our own address");goto out; /* restore flow_nw_tos */}if (ctx->xin->resubmit_stats) {/* 跟新统计信息 */netdev_vport_inc_tx(xport->netdev, ctx->xin->resubmit_stats);}if (ctx->xin->xcache) {/* 添加netdev统计信息 */struct xc_entry *entry;entry = xlate_cache_add_entry(ctx->xin->xcache, XC_NETDEV);entry->dev.tx = netdev_ref(xport->netdev);}out_port = odp_port;//执行隧道添加或者隧道终结if (ovs_native_tunneling_is_on(ctx->xbridge->ofproto)) {xlate_report(ctx, OFT_DETAIL, "output to native tunnel");tnl_push_pop_send = true;} else {xlate_report(ctx, OFT_DETAIL, "output to kernel tunnel");commit_odp_tunnel_action(flow, &ctx->base_flow, ctx->odp_actions);/* 提交隧道动作 */flow->tunnel = flow_tnl; /* Restore tunnel metadata 恢复隧道元数据 */}} else {odp_port = xport->odp_port;out_port = odp_port;}if (out_port != ODPP_NONE) {/* 输出转换 */xlate_commit_actions(ctx);/* 转换输出动作 */if (xr) {/* 如果存在bond的重入动作 */struct ovs_action_hash *act_hash;/* Hash action. */act_hash = nl_msg_put_unspec_uninit(ctx->odp_actions,OVS_ACTION_ATTR_HASH,sizeof *act_hash);act_hash->hash_alg = xr->hash_alg;act_hash->hash_basis = xr->hash_basis;/* Recirc action. 添加重入动作,设置重入id */nl_msg_put_u32(ctx->odp_actions, OVS_ACTION_ATTR_RECIRC,xr->recirc_id);} else {if (tnl_push_pop_send) {/* 是否需要进行标签的弹入或者弹出动作 */build_tunnel_send(ctx, xport, flow, odp_port);flow->tunnel = flow_tnl; /* Restore tunnel metadata 报文报文的元数据 */} else {odp_port_t odp_tnl_port = ODPP_NONE;/* XXX: Write better Filter for tunnel port. We can use inport* int tunnel-port flow to avoid these checks completely. * 报文如果是发往local,那么检查一下是否设置了隧道终结功能。*/if (ofp_port == OFPP_LOCAL &&ovs_native_tunneling_is_on(ctx->xbridge->ofproto)) {odp_tnl_port = tnl_port_map_lookup(flow, wc);}//存在隧道终结表项,添加隧道终结动作。if (odp_tnl_port != ODPP_NONE) {nl_msg_put_odp_port(ctx->odp_actions,OVS_ACTION_ATTR_TUNNEL_POP,odp_tnl_port);} else {/* Tunnel push-pop action is not compatible with* IPFIX action. */compose_ipfix_action(ctx, out_port);/* Handle truncation of the mirrored packet. */if (ctx->mirror_snaplen > 0 &&ctx->mirror_snaplen < UINT16_MAX) {struct ovs_action_trunc *trunc;trunc = nl_msg_put_unspec_uninit(ctx->odp_actions,OVS_ACTION_ATTR_TRUNC,sizeof *trunc);trunc->max_len = ctx->mirror_snaplen;if (!ctx->xbridge->support.trunc) {ctx->xout->slow |= SLOW_ACTION;}}nl_msg_put_odp_port(ctx->odp_actions,OVS_ACTION_ATTR_OUTPUT,out_port);}}}ctx->sflow_odp_port = odp_port;ctx->sflow_n_outputs++;/* 设置出接口 */ctx->nf_output_iface = ofp_port;}/* 出端口镜像处理,这里做的是出镜像 */if (mbridge_has_mirrors(ctx->xbridge->mbridge) && xport->xbundle) {/* 判断该网桥是否支持镜像,并且处在出端口 *//*进行出镜像处理,处理出镜像 */mirror_packet(ctx, xport->xbundle,xbundle_mirror_dst(xport->xbundle->xbridge,xport->xbundle));/* 获取该端口的镜像策略 */}out:/* Restore flow,值写入到动作后,需要进行还原 */flow->vlan_tci = flow_vlan_tci;flow->pkt_mark = flow_pkt_mark;flow->nw_tos = flow_nw_tos;

}/* Given that 'flow' should be output to the ofport corresponding to* 'tnl_port', updates 'flow''s tunnel headers and returns the actual datapath* port that the output should happen on. May return ODPP_NONE if the output* shouldn't occur. * */

odp_port_t

tnl_port_send(const struct ofport_dpif *ofport, struct flow *flow,struct flow_wildcards *wc) OVS_EXCLUDED(rwlock)

{const struct netdev_tunnel_config *cfg;/* 隧道配置信息 */struct tnl_port *tnl_port;char *pre_flow_str = NULL;odp_port_t out_port;fat_rwlock_rdlock(&rwlock);/* 获取读写锁的读锁 */tnl_port = tnl_find_ofport(ofport);/* 获取该端口的隧道端口 */out_port = tnl_port ? tnl_port->match.odp_port : ODPP_NONE;if (!tnl_port) {goto out;}cfg = netdev_get_tunnel_config(tnl_port->netdev);/* 获取端口的隧道配置 */ovs_assert(cfg);if (!VLOG_DROP_DBG(&dbg_rl)) {pre_flow_str = flow_to_string(flow);}if (!cfg->ip_src_flow) {/* 指定了源IP */flow->tunnel.ip_src = in6_addr_get_mapped_ipv4(&tnl_port->match.ipv6_src);if (!flow->tunnel.ip_src) {flow->tunnel.ipv6_src = tnl_port->match.ipv6_src;} else {flow->tunnel.ipv6_src = in6addr_any;}}if (!cfg->ip_dst_flow) {/* 指定了目的IP */flow->tunnel.ip_dst = in6_addr_get_mapped_ipv4(&tnl_port->match.ipv6_dst);if (!flow->tunnel.ip_dst) {flow->tunnel.ipv6_dst = tnl_port->match.ipv6_dst;} else {flow->tunnel.ipv6_dst = in6addr_any;}}flow->tunnel.tp_dst = cfg->dst_port;/* 目的端口号 */if (!cfg->out_key_flow) {flow->tunnel.tun_id = cfg->out_key;}if (cfg->ttl_inherit && is_ip_any(flow)) {wc->masks.nw_ttl = 0xff;/* 需要匹配ttl */flow->tunnel.ip_ttl = flow->nw_ttl;} else {flow->tunnel.ip_ttl = cfg->ttl;}if (cfg->tos_inherit && is_ip_any(flow)) {wc->masks.nw_tos |= IP_DSCP_MASK;flow->tunnel.ip_tos = flow->nw_tos & IP_DSCP_MASK;} else {flow->tunnel.ip_tos = cfg->tos;}/* ECN fields are always inherited. */if (is_ip_any(flow)) {wc->masks.nw_tos |= IP_ECN_MASK;if (IP_ECN_is_ce(flow->nw_tos)) {flow->tunnel.ip_tos |= IP_ECN_ECT_0;} else {flow->tunnel.ip_tos |= flow->nw_tos & IP_ECN_MASK;}}flow->tunnel.flags |= (cfg->dont_fragment ? FLOW_TNL_F_DONT_FRAGMENT : 0)| (cfg->csum ? FLOW_TNL_F_CSUM : 0)| (cfg->out_key_present ? FLOW_TNL_F_KEY : 0);if (pre_flow_str) {char *post_flow_str = flow_to_string(flow);char *tnl_str = tnl_port_fmt(tnl_port);VLOG_DBG("flow sent\n""%s"" pre: %s\n""post: %s",tnl_str, pre_flow_str, post_flow_str);free(tnl_str);free(pre_flow_str);free(post_flow_str);}out:fat_rwlock_unlock(&rwlock);return out_port;

}

构建外层封装

这里只是将外层封装的数据准备好,放在tnl_push_data,为后面写入报文做准备。

static int

build_tunnel_send(struct xlate_ctx *ctx, const struct xport *xport,const struct flow *flow, odp_port_t tunnel_odp_port)

{struct netdev_tnl_build_header_params tnl_params;struct ovs_action_push_tnl tnl_push_data;struct xport *out_dev = NULL;ovs_be32 s_ip = 0, d_ip = 0;struct in6_addr s_ip6 = in6addr_any;struct in6_addr d_ip6 = in6addr_any;struct eth_addr smac;struct eth_addr dmac;int err;char buf_sip6[INET6_ADDRSTRLEN];char buf_dip6[INET6_ADDRSTRLEN];//underlay路由查找,因为隧道的remote-ip根据vxlan端口就已经知道了。err = tnl_route_lookup_flow(flow, &d_ip6, &s_ip6, &out_dev);if (err) {xlate_report(ctx, OFT_WARN, "native tunnel routing failed");return err;}xlate_report(ctx, OFT_DETAIL, "tunneling to %s via %s",ipv6_string_mapped(buf_dip6, &d_ip6),netdev_get_name(out_dev->netdev));/* Use mac addr of bridge port of the peer. 使用桥的mac地址作为源mac地址 */err = netdev_get_etheraddr(out_dev->netdev, &smac);if (err) {xlate_report(ctx, OFT_WARN,"tunnel output device lacks Ethernet address");return err;}d_ip = in6_addr_get_mapped_ipv4(&d_ip6);if (d_ip) {s_ip = in6_addr_get_mapped_ipv4(&s_ip6);}//获取邻居目的mac地址err = tnl_neigh_lookup(out_dev->xbridge->name, &d_ip6, &dmac);if (err) {xlate_report(ctx, OFT_DETAIL,"neighbor cache miss for %s on bridge %s, ""sending %s request",buf_dip6, out_dev->xbridge->name, d_ip ? "ARP" : "ND");if (d_ip) {//进行arp请求tnl_send_arp_request(ctx, out_dev, smac, s_ip, d_ip);} else {tnl_send_nd_request(ctx, out_dev, smac, &s_ip6, &d_ip6);}return err;}if (ctx->xin->xcache) {struct xc_entry *entry;entry = xlate_cache_add_entry(ctx->xin->xcache, XC_TNL_NEIGH);ovs_strlcpy(entry->tnl_neigh_cache.br_name, out_dev->xbridge->name,sizeof entry->tnl_neigh_cache.br_name);entry->tnl_neigh_cache.d_ipv6 = d_ip6;}xlate_report(ctx, OFT_DETAIL, "tunneling from "ETH_ADDR_FMT" %s"" to "ETH_ADDR_FMT" %s",ETH_ADDR_ARGS(smac), ipv6_string_mapped(buf_sip6, &s_ip6),ETH_ADDR_ARGS(dmac), buf_dip6);//构建underlay 链路层信息netdev_init_tnl_build_header_params(&tnl_params, flow, &s_ip6, dmac, smac);//构建隧道外层信息udp,ip,eth层,保存在tnl_push_data中err = tnl_port_build_header(xport->ofport, &tnl_push_data, &tnl_params);if (err) {return err;}//输出端口和隧道端口tnl_push_data.tnl_port = odp_to_u32(tunnel_odp_port);tnl_push_data.out_port = odp_to_u32(out_dev->odp_port);//为报文添加一个隧道封装动作,在执行动作的时候进行最后的报文封装。odp_put_tnl_push_action(ctx->odp_actions, &tnl_push_data);return 0;

}

void

odp_put_tnl_push_action(struct ofpbuf *odp_actions,struct ovs_action_push_tnl *data)

{int size = offsetof(struct ovs_action_push_tnl, header);size += data->header_len;nl_msg_put_unspec(odp_actions, OVS_ACTION_ATTR_TUNNEL_PUSH, data, size);

}

执行push

/* 动作执行回调函数,参数may_steal表示是否可以释放报文 */

static void

dp_execute_cb(void *aux_, struct dp_packet_batch *packets_,const struct nlattr *a, bool may_steal)

{......case OVS_ACTION_ATTR_TUNNEL_PUSH:/* 隧道处理,添加外层标签,需要重入,通常用于处理vxlan等隧道报文 */if (*depth < MAX_RECIRC_DEPTH) {/* 嵌套深度小于限制的最大深度,那么重入 */struct dp_packet_batch tnl_pkt;struct dp_packet_batch *orig_packets_ = packets_;int err;if (!may_steal) {/* 如果调用者不希望发送设备接管报文,那么需要复制一份报文进行处理 */dp_packet_batch_clone(&tnl_pkt, packets_);packets_ = &tnl_pkt;dp_packet_batch_reset_cutlen(orig_packets_);}dp_packet_batch_apply_cutlen(packets_);/* 执行隧道标签添加动作 */err = push_tnl_action(pmd, a, packets_);if (!err) {/* 添加隧道标签后,需要重入处理 */(*depth)++;dp_netdev_recirculate(pmd, packets_);(*depth)--;}return;}break;......dp_packet_delete_batch(packets_, may_steal);

}/* 增加隧道标签动作 */

static int

push_tnl_action(const struct dp_netdev_pmd_thread *pmd,/* 当前的轮询进程 */const struct nlattr *attr,/* 属性 */struct dp_packet_batch *batch)/* 批量报文处理 */

{struct tx_port *tun_port;const struct ovs_action_push_tnl *data;int err;data = nl_attr_get(attr);/* 隧道端口查找 */tun_port = pmd_tnl_port_cache_lookup(pmd, u32_to_odp(data->tnl_port));if (!tun_port) {err = -EINVAL;goto error;}/* 添加隧道头 */err = netdev_push_header(tun_port->port->netdev, batch, data);if (!err) {return 0;}

error:dp_packet_delete_batch(batch, true);return err;

}

/* Push tunnel header (reading from tunnel metadata) and resize* 'batch->packets' for further processing.** The caller must make sure that 'netdev' support this operation by checking* that netdev_has_tunnel_push_pop() returns true. */

int

netdev_push_header(const struct netdev *netdev,struct dp_packet_batch *batch,const struct ovs_action_push_tnl *data)

{int i;for (i = 0; i < batch->count; i++) {/* 逐个处理每一个报文 */netdev->netdev_class->push_header(batch->packets[i], data);//关键函数,对封装好的报文的元数据进行初始化,为报文重入做准备。这里的出接口是unerlay路由//查出来的data->out_port。pkt_metadata_init(&batch->packets[i]->md, u32_to_odp(data->out_port));}return 0;

}

//对于vxlan来说,netdev->netdev_class->push_header函数为

/* 为报文添加隧道头 */

void

netdev_tnl_push_udp_header(struct dp_packet *packet,const struct ovs_action_push_tnl *data)

{struct udp_header *udp;int ip_tot_size;/* 先压入以太网和IP头 */udp = netdev_tnl_push_ip_header(packet, data->header, data->header_len, &ip_tot_size);/* set udp src port 获取随机的udp源端口 */udp->udp_src = netdev_tnl_get_src_port(packet);udp->udp_len = htons(ip_tot_size);/* 设置报文的udp总长度 */if (udp->udp_csum) {/* 进行udp校验码计算 */uint32_t csum;if (netdev_tnl_is_header_ipv6(dp_packet_data(packet))) {csum = packet_csum_pseudoheader6(netdev_tnl_ipv6_hdr(dp_packet_data(packet)));} else {csum = packet_csum_pseudoheader(netdev_tnl_ip_hdr(dp_packet_data(packet)));}csum = csum_continue(csum, udp, ip_tot_size);udp->udp_csum = csum_finish(csum);if (!udp->udp_csum) {udp->udp_csum = htons(0xffff);}}

}//将输出端口变为输入端口,即将三层端口转换为二层端口

static inline void

pkt_metadata_init(struct pkt_metadata *md, odp_port_t port)

{/* It can be expensive to zero out all of the tunnel metadata. However,* we can just zero out ip_dst and the rest of the data will never be* looked at. */memset(md, 0, offsetof(struct pkt_metadata, in_port));/* 将端口之前的数据全部初始化为0 */md->tunnel.ip_dst = 0;md->tunnel.ipv6_dst = in6addr_any;md->in_port.odp_port = port;

}

走到这里后,执行dp_netdev_recirculate进行重入,这个时候vxlan报文经过fdb从dpdk物理口离开服务器。

underlay route table

ovs的路由来自两部分,一部分是同步内核的路由,标记为cached。另外一部分是使用ovs-appctl ovs/route/add等命令添加的路由。

[root@ ~]# ovs-appctl ovs/route/show Route Table: Cached: 1.1.1.1/32 dev tun0 SRC 1.1.1.1 Cached: 10.226.137.204/32 dev eth2 SRC 10.226.137.204 Cached: 10.255.9.204/32 dev br-phy SRC 10.255.9.204 Cached: 127.0.0.1/32 dev lo SRC 127.0.0.1 Cached: 169.254.169.110/32 dev tap_metadata SRC 169.254.169.110 Cached: 169.254.169.240/32 dev tap_proxy SRC 169.254.169.240 Cached: 169.254.169.241/32 dev tap_proxy SRC 169.254.169.241 Cached: 169.254.169.250/32 dev tap_metadata SRC 169.254.169.250 Cached: 169.254.169.254/32 dev tap_metadata SRC 169.254.169.254 Cached: 172.17.0.1/32 dev docker0 SRC 172.17.0.1 Cached: ::1/128 dev lo SRC ::1 Cached: 10.226.137.192/27 dev eth2 SRC 10.226.137.204 Cached: 10.226.137.224/27 dev br-phy GW 10.255.9.193 SRC 10.255.9.204 Cached: 10.254.225.0/27 dev br-phy GW 10.255.9.193 SRC 10.255.9.204 Cached: 10.254.225.224/27 dev br-phy GW 10.255.9.193 SRC 10.255.9.204 Cached: 10.255.8.192/27 dev br-phy GW 10.255.9.193 SRC 10.255.9.204 Cached: 10.255.9.192/27 dev br-phy SRC 10.255.9.204 Cached: 1.1.1.0/24 dev br-phy GW 10.255.9.193 SRC 10.255.9.204 Cached: 10.226.0.0/16 dev eth2 GW 10.226.137.193 SRC 10.226.137.204 Cached: 172.17.0.0/16 dev docker0 SRC 172.17.0.1 Cached: 127.0.0.0/8 dev lo SRC 127.0.0.1 Cached: 0.0.0.0/0 dev eth2 GW 10.226.137.193 SRC 10.226.137.204 Cached: fe80::/64 dev port-r6kxee6d3t SRC fe80::80a0:94ff:fedc:43b [root@A04-R08-I137-204-9320C72 ~]#

路由模块初始化

/* Users of the route_table module should register themselves with this* function before making any other route_table function calls. */

void

route_table_init(void)OVS_EXCLUDED(route_table_mutex)

{ovs_mutex_lock(&route_table_mutex);ovs_assert(!nln);ovs_assert(!route_notifier);ovs_assert(!route6_notifier);ovs_router_init();nln = nln_create(NETLINK_ROUTE, (nln_parse_func *) route_table_parse,&rtmsg);route_notifier =nln_notifier_create(nln, RTNLGRP_IPV4_ROUTE,(nln_notify_func *) route_table_change, NULL);route6_notifier =nln_notifier_create(nln, RTNLGRP_IPV6_ROUTE,(nln_notify_func *) route_table_change, NULL);route_table_reset();name_table_init();ovs_mutex_unlock(&route_table_mutex);

}/* May not be called more than once. */

void

ovs_router_init(void)

{classifier_init(&cls, NULL);//使用分类器实现路由查找。unixctl_command_register("ovs/route/add", "ip_addr/prefix_len out_br_name gw", 2, 3,ovs_router_add, NULL);unixctl_command_register("ovs/route/show", "", 0, 0, ovs_router_show, NULL);unixctl_command_register("ovs/route/del", "ip_addr/prefix_len", 1, 1, ovs_router_del,NULL);unixctl_command_register("ovs/route/lookup", "ip_addr", 1, 1,ovs_router_lookup_cmd, NULL);

}

使用netlink监听内核路由事件

//该函数设置路由事件变化标志

static void

route_table_change(const struct route_table_msg *change OVS_UNUSED,void *aux OVS_UNUSED)

{route_table_valid = false;

}/* Run periodically to update the locally maintained routing table. */

//周期性处理路由变化函数

void

route_table_run(void)OVS_EXCLUDED(route_table_mutex)

{ovs_mutex_lock(&route_table_mutex);if (nln) {rtnetlink_run();nln_run(nln);if (!route_table_valid) {route_table_reset();}}ovs_mutex_unlock(&route_table_mutex);

}static int

route_table_reset(void)

{struct nl_dump dump;struct rtgenmsg *rtmsg;uint64_t reply_stub[NL_DUMP_BUFSIZE / 8];struct ofpbuf request, reply, buf;route_map_clear();//删除所有路由netdev_get_addrs_list_flush();route_table_valid = true;rt_change_seq++;ofpbuf_init(&request, 0);nl_msg_put_nlmsghdr(&request, sizeof *rtmsg, RTM_GETROUTE, NLM_F_REQUEST);rtmsg = ofpbuf_put_zeros(&request, sizeof *rtmsg);rtmsg->rtgen_family = AF_UNSPEC;//重新添加所有路由nl_dump_start(&dump, NETLINK_ROUTE, &request);ofpbuf_uninit(&request);ofpbuf_use_stub(&buf, reply_stub, sizeof reply_stub);while (nl_dump_next(&dump, &reply, &buf)) {struct route_table_msg msg;if (route_table_parse(&reply, &msg)) {route_table_handle_msg(&msg);}}ofpbuf_uninit(&buf);return nl_dump_done(&dump);

}

underlay neighbor

dpdk-ovs为隧道维护了一个underlay neighbor信息。

static void

dp_initialize(void)

{static struct ovsthread_once once = OVSTHREAD_ONCE_INITIALIZER;if (ovsthread_once_start(&once)) {int i;tnl_conf_seq = seq_create();dpctl_unixctl_register();tnl_port_map_init();tnl_neigh_cache_init();route_table_init();for (i = 0; i < ARRAY_SIZE(base_dpif_classes); i++) {dp_register_provider(base_dpif_classes[i]);}ovsthread_once_done(&once);}

}void

tnl_neigh_cache_init(void)

{unixctl_command_register("tnl/arp/show", "", 0, 0, tnl_neigh_cache_show, NULL);unixctl_command_register("tnl/arp/set", "BRIDGE IP MAC", 3, 3, tnl_neigh_cache_add, NULL);unixctl_command_register("tnl/arp/flush", "", 0, 0, tnl_neigh_cache_flush, NULL);unixctl_command_register("tnl/neigh/show", "", 0, 0, tnl_neigh_cache_show, NULL);unixctl_command_register("tnl/neigh/set", "BRIDGE IP MAC", 3, 3, tnl_neigh_cache_add, NULL);unixctl_command_register("tnl/neigh/flush", "", 0, 0, tnl_neigh_cache_flush, NULL);

}

使用命令查看邻居

[root@ ~]# ovs-appctl tnl/arp/show IP MAC Bridge ========================================================================== 10.255.9.193 9c:e8:95:0f:49:16 br-phy [root@ ~]#

ovs-dpdk通过在数据面处理arp报文和neigh报文来获取邻居信息。

/* 执行动作转换 */

static void

do_xlate_actions(const struct ofpact *ofpacts, size_t ofpacts_len,struct xlate_ctx *ctx)

{struct flow_wildcards *wc = ctx->wc;/* 通配符 */struct flow *flow = &ctx->xin->flow;/* 需要处理的流 */const struct ofpact *a;/* 只有开启隧道的时候才启用邻居侦听,主要是侦听arp包和icmpv6报文进行邻居学习 */if (ovs_native_tunneling_is_on(ctx->xbridge->ofproto)) {tnl_neigh_snoop(flow, wc, ctx->xbridge->name);}/* dl_type already in the mask, not set below. */......

}

//进行邻居学习。

int

tnl_neigh_snoop(const struct flow *flow, struct flow_wildcards *wc,const char name[IFNAMSIZ])

{int res;res = tnl_arp_snoop(flow, wc, name);if (res != EINVAL) {return res;}return tnl_nd_snoop(flow, wc, name);

}

static int

tnl_arp_snoop(const struct flow *flow, struct flow_wildcards *wc,const char name[IFNAMSIZ])

{if (flow->dl_type != htons(ETH_TYPE_ARP)|| FLOW_WC_GET_AND_MASK_WC(flow, wc, nw_proto) != ARP_OP_REPLY|| eth_addr_is_zero(FLOW_WC_GET_AND_MASK_WC(flow, wc, arp_sha))) {return EINVAL;}tnl_arp_set(name, FLOW_WC_GET_AND_MASK_WC(flow, wc, nw_src), flow->arp_sha);return 0;

}

static int

tnl_nd_snoop(const struct flow *flow, struct flow_wildcards *wc,const char name[IFNAMSIZ])

{if (!is_nd(flow, wc) || flow->tp_src != htons(ND_NEIGHBOR_ADVERT)) {return EINVAL;}/* - RFC4861 says Neighbor Advertisements sent in response to unicast Neighbor* Solicitations SHOULD include the Target link-layer address. However, Linux* doesn't. So, the response to Solicitations sent by OVS will include the* TLL address and other Advertisements not including it can be ignored.* - OVS flow extract can set this field to zero in case of packet parsing errors.* For details refer miniflow_extract()*/if (eth_addr_is_zero(FLOW_WC_GET_AND_MASK_WC(flow, wc, arp_tha))) {return EINVAL;}memset(&wc->masks.ipv6_src, 0xff, sizeof wc->masks.ipv6_src);memset(&wc->masks.ipv6_dst, 0xff, sizeof wc->masks.ipv6_dst);memset(&wc->masks.nd_target, 0xff, sizeof wc->masks.nd_target);tnl_neigh_set__(name, &flow->nd_target, flow->arp_tha);return 0;

}

邻居定期老化

void

tnl_neigh_cache_run(void)

{struct tnl_neigh_entry *neigh;bool changed = false;ovs_mutex_lock(&mutex);CMAP_FOR_EACH(neigh, cmap_node, &table) {if (neigh->expires <= time_now()) {tnl_neigh_delete(neigh);changed = true;}}ovs_mutex_unlock(&mutex);if (changed) {seq_change(tnl_conf_seq);}

}

原文链接:https://segmentfault.com/a/1190000020337904

![[附源码]计算机毕业设计springboot基于Web的软考题库平台](https://img-blog.csdnimg.cn/52f1d34bfd9141668329f0f098ccaf28.png)

![[论文阅读] Curriculum Semi-supervised Segmentation](https://img-blog.csdnimg.cn/fccbd9557c7f4a8f918c4ab0deb7d8d3.jpeg#pic_center)

![[附源码]Python计算机毕业设计Django的疫苗接种管理系统](https://img-blog.csdnimg.cn/a01b45b9dd50463585ab95d280c2c76f.png)