2019独角兽企业重金招聘Python工程师标准>>>

博主今天分享大型项目的分析demo,下面是假设某个公司的网站项目想要得到活动日、节假日的网站流量分析。此类需求使用hadoop离线平台来分析性价比百分百完美。下面博主直接上教程。。。

操作步骤:

一、下载日志

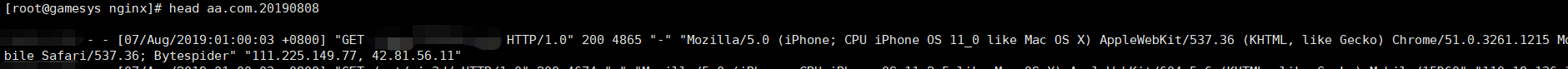

由于很多时候,网站项目是部署在客户内网的,而hadoop平台部署在自己的内部,两边网络不通,无法做日志自动收集。所以,此处博主就手动下载了;大家需要的话,可以自行编写脚本或flume来收集。

#1.下载日志

lcd E:\xhexx-logs-anlily\2018-11-11

get /opt/xxx/apache-tomcat-7.0.57/logs/localhost_access_log.2018-11-11.txt

get /opt/xxx/t1/logs/localhost_access_log.2018-11-11.txt

get /opt/xxx/apache-tomcat-7.0.57_msg/logs/localhost_access_log.2018-11-11.txt

#2.修改日志文件名

localhost_access_log_10_2_4_155_1_2018-11-11.txt

localhost_access_log_10_2_4_155_2_2018-11-11.txt日志格式样例:

1x.x.x.xxx - - [11/Nov/2018:15:58:27 +0800] "GET /xxx/xhexx_ui/bbs/js/swiper-3.3.1.min.js HTTP/1.0" 200 78313

1x.x.x.xxx - - [11/Nov/2018:15:58:37 +0800] "GET /xxx/doSign/showSign HTTP/1.0" 200 2397

1x.x.x.xxx - - [11/Nov/2018:15:58:41 +0800] "GET /xxx/ui/metro/js/metro.min.js HTTP/1.0" 200 92107

1x.x.x.xxx - - [11/Nov/2018:15:58:41 +0800] "GET /xxx/mMember/savePage HTTP/1.0" 200 3898

1x.x.x.xxx - - [11/Nov/2018:15:58:45 +0800] "POST /xxx/mMember/checkCode HTTP/1.0" 200 77二、上传日志到hadoop集群

cd ~/xhexx-logs-anlily/2018-11-11

hdfs dfs -put * /webloginput

[hadoop@centos-aaron-h1 2018-11-11]$ hdfs dfs -ls /webloginput

Found 47 items

-rw-r--r-- 2 hadoop supergroup 2542507 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2621956 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 5943 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2610415 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2613782 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2591445 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 5474 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2585817 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 428110 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 4139168 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 4119252 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2602771 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 4019158 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2597577 2019-01-19 22:09 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 4036010 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2591541 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2622123 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 4081492 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 4139018 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2541915 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 3994434 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2054366 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2087420 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 1970492 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 1999238 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2097946 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2113500 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2065582 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 1981415 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2054112 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2084308 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 1983759 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 1990587 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2049399 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2061087 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2040909 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2068085 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2061532 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2040548 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2070062 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2092143 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2040414 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2075960 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2070758 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 2063688 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 1964264 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_1_2018-11-11.txt

-rw-r--r-- 2 hadoop supergroup 1977532 2019-01-19 22:38 /webloginput/localhost_access_log_1x_x_x_1xx_2_2018-11-11.txt三、编写MR程序

package com.empire.hadoop.mr.xhexxweblogwash;/*** 类 WebLogBean.java的实现描述:web日志bean类* * @author arron 2019年x月xx日 上午xx:xx:xx*/

public class WebLogBean {private String remoteAddr; // 记录客户端的ip地址private String timeLocal; // 记录访问时间与时区private String requestType; // 请求类型1-POST/2-GETprivate String url; // 记录请求的urlprivate String protocol; // 协议private String status; // 记录请求状态;成功是200private String traffic; // 流量private boolean valid = true; // 判断数据是否合法public String getRemoteAddr() {return remoteAddr;}public void setRemoteAddr(String remoteAddr) {this.remoteAddr = remoteAddr;}public String getTimeLocal() {return timeLocal;}public void setTimeLocal(String timeLocal) {this.timeLocal = timeLocal;}public String getRequestType() {return requestType;}public void setRequestType(String requestType) {this.requestType = requestType;}public String getUrl() {return url;}public void setUrl(String url) {this.url = url;}public String getProtocol() {return protocol;}public void setProtocol(String protocol) {this.protocol = protocol;}public String getStatus() {return status;}public void setStatus(String status) {this.status = status;}public String getTraffic() {return traffic;}public void setTraffic(String traffic) {this.traffic = traffic;}public boolean isValid() {return valid;}public void setValid(boolean valid) {this.valid = valid;}@Overridepublic String toString() {StringBuilder sb = new StringBuilder();sb.append(this.remoteAddr);sb.append(",").append(this.timeLocal);sb.append(",").append(this.requestType);sb.append(",").append(this.url);sb.append(",").append(this.protocol);sb.append(",").append(this.status);sb.append(",").append(this.traffic);return sb.toString();}

}

package com.empire.hadoop.mr.xhexxweblogwash;import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Locale;/*** 类 WebLogParser.java的实现描述:日志解析类* * @author arron 2019年x月xx日 上午xx:xx:xx*/

public class WebLogParser {/*** 解析前的格式*/private static SimpleDateFormat sd1 = new SimpleDateFormat("dd/MMM/yyyy:HH:mm:ss", Locale.US);/*** 解析后的格式*/private static SimpleDateFormat sd2 = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");/*** 解析一行日志* * @param line* @return*/public static WebLogBean parser(String line) {WebLogBean webLogBean = new WebLogBean();String[] arr = line.split(" ");if (arr.length == 10) {webLogBean.setRemoteAddr(arr[0]);webLogBean.setTimeLocal(parseTime(arr[3].substring(1)));webLogBean.setRequestType(arr[5].substring(1, arr[5].length()));webLogBean.setUrl(arr[6]);webLogBean.setProtocol(arr[7].substring(0, arr[7].length() - 1));webLogBean.setStatus(arr[8]);if ("-".equals(arr[9])) {webLogBean.setTraffic("0");} else {webLogBean.setTraffic(arr[9]);}webLogBean.setValid(true);} else {webLogBean.setValid(false);}return webLogBean;}/*** 时间解析* * @param dt* @return*/public static String parseTime(String dt) {String timeString = "";try {Date parse = sd1.parse(dt);timeString = sd2.format(parse);} catch (ParseException e) {e.printStackTrace();}return timeString;}public static void main(String[] args) {WebLogParser wp = new WebLogParser();String parseTime = WebLogParser.parseTime("18/Sep/2013:06:49:48");System.out.println(parseTime);String line = "1x.x.x.1xx - - [11/Nov/2018:09:45:31 +0800] \"POST /xxx/wxxlotteryactivitys/xotterxClick HTTP/1.0\" 200 191";WebLogBean webLogBean = WebLogParser.parser(line);if (!webLogBean.isValid())return;System.out.println(webLogBean);}}

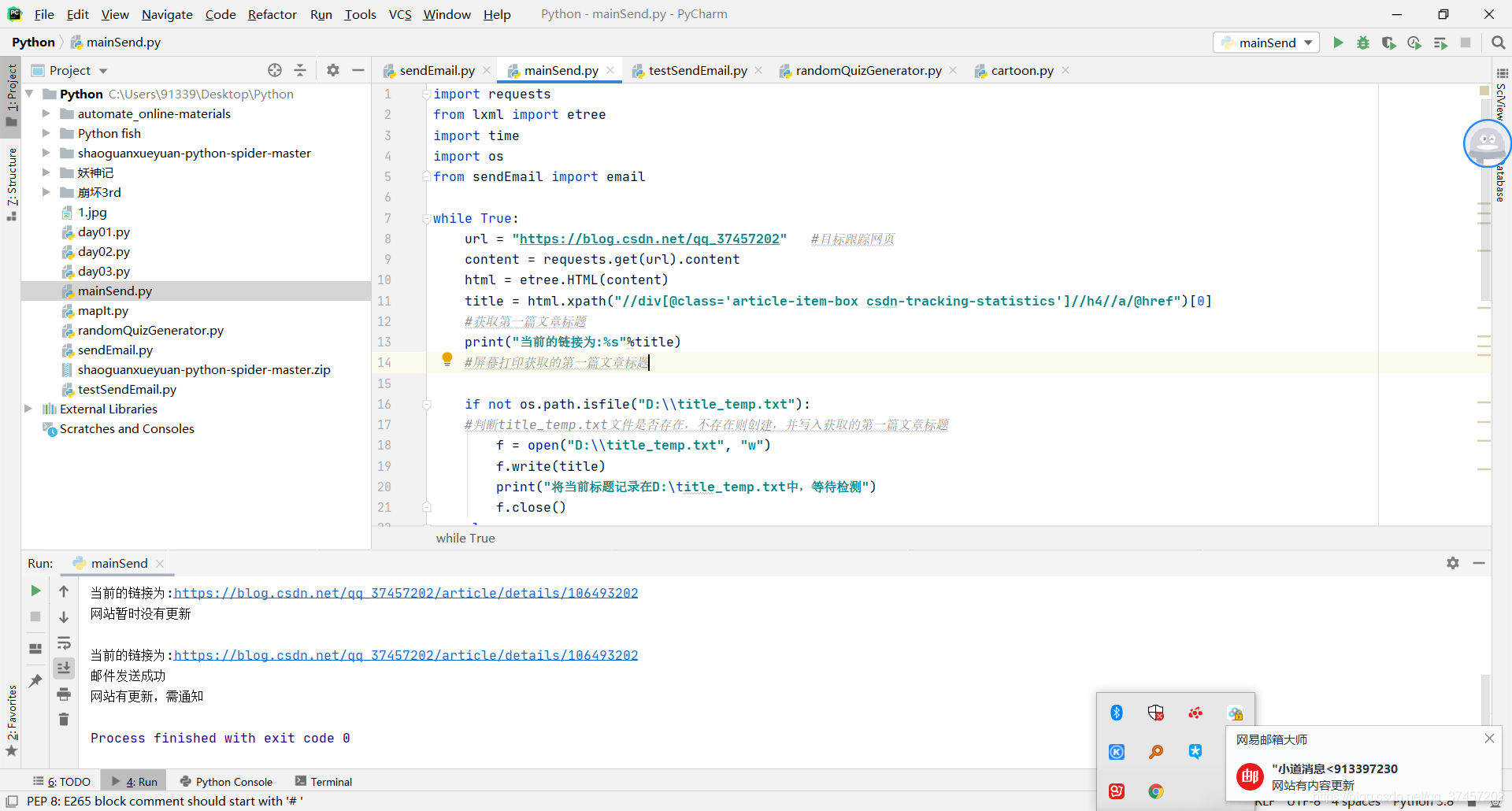

mapreduce主程序

package com.empire.hadoop.mr.xhexxweblogwash;import java.io.IOException;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;/*** 类 WeblogPreProcess.java的实现描述:web日志清洗主类* * @author arron 2019年x月xx日 上午xx:xx:xx*/

public class WeblogPreProcess {static class WeblogPreProcessMapper extends Mapper<LongWritable, Text, Text, NullWritable> {Text k = new Text();NullWritable v = NullWritable.get();@Overrideprotected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {String line = value.toString();WebLogBean webLogBean = WebLogParser.parser(line);if (!webLogBean.isValid())return;k.set(webLogBean.toString());context.write(k, v);}}public static void main(String[] args) throws Exception {Configuration conf = new Configuration();Job job = Job.getInstance(conf);job.setJarByClass(WeblogPreProcess.class);job.setMapperClass(WeblogPreProcessMapper.class);job.setOutputKeyClass(Text.class);job.setOutputValueClass(NullWritable.class);FileInputFormat.setInputPaths(job, new Path(args[0]));FileOutputFormat.setOutputPath(job, new Path(args[1]));job.waitForCompletion(true);}

}

将上面的程序打包并且上传到hadoop集群的yarn程序执行节点

四、执行程序

[hadoop@centos-aaron-h1 ~]$ hadoop jar weblogclear.jar com.empire.hadoop.mr.xhexxweblogwash.WeblogPreProcess /webloginput /weblogout

19/01/20 02:10:53 INFO client.RMProxy: Connecting to ResourceManager at centos-aaron-h1/192.168.29.144:8032

19/01/20 02:10:53 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

19/01/20 02:10:54 INFO input.FileInputFormat: Total input files to process : 47

19/01/20 02:10:54 INFO mapreduce.JobSubmitter: number of splits:47

19/01/20 02:10:54 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

19/01/20 02:10:54 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1547906537546_0004

19/01/20 02:10:54 INFO impl.YarnClientImpl: Submitted application application_1547906537546_0004

19/01/20 02:10:55 INFO mapreduce.Job: The url to track the job: http://centos-aaron-h1:8088/proxy/application_1547906537546_0004/

19/01/20 02:10:55 INFO mapreduce.Job: Running job: job_1547906537546_0004

19/01/20 02:11:03 INFO mapreduce.Job: Job job_1547906537546_0004 running in uber mode : false

19/01/20 02:11:03 INFO mapreduce.Job: map 0% reduce 0%

19/01/20 02:11:41 INFO mapreduce.Job: map 7% reduce 0%

19/01/20 02:11:44 INFO mapreduce.Job: map 10% reduce 0%

19/01/20 02:11:45 INFO mapreduce.Job: map 13% reduce 0%

19/01/20 02:11:58 INFO mapreduce.Job: map 20% reduce 0%

19/01/20 02:12:01 INFO mapreduce.Job: map 23% reduce 0%

19/01/20 02:12:02 INFO mapreduce.Job: map 25% reduce 0%

19/01/20 02:12:03 INFO mapreduce.Job: map 29% reduce 0%

19/01/20 02:12:04 INFO mapreduce.Job: map 35% reduce 0%

19/01/20 02:12:05 INFO mapreduce.Job: map 37% reduce 0%

19/01/20 02:12:07 INFO mapreduce.Job: map 39% reduce 0%

19/01/20 02:12:09 INFO mapreduce.Job: map 41% reduce 0%

19/01/20 02:12:10 INFO mapreduce.Job: map 47% reduce 0%

19/01/20 02:12:20 INFO mapreduce.Job: map 55% reduce 0%

19/01/20 02:12:23 INFO mapreduce.Job: map 56% reduce 0%

19/01/20 02:12:24 INFO mapreduce.Job: map 59% reduce 0%

19/01/20 02:12:25 INFO mapreduce.Job: map 60% reduce 0%

19/01/20 02:12:46 INFO mapreduce.Job: map 62% reduce 20%

19/01/20 02:12:49 INFO mapreduce.Job: map 66% reduce 20%

19/01/20 02:12:51 INFO mapreduce.Job: map 79% reduce 20%

19/01/20 02:12:52 INFO mapreduce.Job: map 83% reduce 21%

19/01/20 02:12:53 INFO mapreduce.Job: map 84% reduce 21%

19/01/20 02:12:54 INFO mapreduce.Job: map 85% reduce 21%

19/01/20 02:12:55 INFO mapreduce.Job: map 89% reduce 21%

19/01/20 02:12:58 INFO mapreduce.Job: map 89% reduce 30%

19/01/20 02:12:59 INFO mapreduce.Job: map 91% reduce 30%

19/01/20 02:13:00 INFO mapreduce.Job: map 95% reduce 30%

19/01/20 02:13:01 INFO mapreduce.Job: map 96% reduce 30%

19/01/20 02:13:02 INFO mapreduce.Job: map 98% reduce 30%

19/01/20 02:13:03 INFO mapreduce.Job: map 100% reduce 30%

19/01/20 02:13:04 INFO mapreduce.Job: map 100% reduce 40%

19/01/20 02:13:07 INFO mapreduce.Job: map 100% reduce 100%

19/01/20 02:13:07 INFO mapreduce.Job: Job job_1547906537546_0004 completed successfully

19/01/20 02:13:08 INFO mapreduce.Job: Counters: 51File System CountersFILE: Number of bytes read=98621200FILE: Number of bytes written=206697260FILE: Number of read operations=0FILE: Number of large read operations=0FILE: Number of write operations=0HDFS: Number of bytes read=110656205HDFS: Number of bytes written=96528650HDFS: Number of read operations=144HDFS: Number of large read operations=0HDFS: Number of write operations=2Job Counters Killed map tasks=2Launched map tasks=48Launched reduce tasks=1Data-local map tasks=44Rack-local map tasks=4Total time spent by all maps in occupied slots (ms)=2281022Total time spent by all reduces in occupied slots (ms)=61961Total time spent by all map tasks (ms)=2281022Total time spent by all reduce tasks (ms)=61961Total vcore-milliseconds taken by all map tasks=2281022Total vcore-milliseconds taken by all reduce tasks=61961Total megabyte-milliseconds taken by all map tasks=2335766528Total megabyte-milliseconds taken by all reduce tasks=63448064Map-Reduce FrameworkMap input records=911941Map output records=911941Map output bytes=96661657Map output materialized bytes=98621470Input split bytes=7191Combine input records=0Combine output records=0Reduce input groups=855824Reduce shuffle bytes=98621470Reduce input records=911941Reduce output records=911941Spilled Records=1823882Shuffled Maps =47Failed Shuffles=0Merged Map outputs=47GC time elapsed (ms)=64058CPU time spent (ms)=130230Physical memory (bytes) snapshot=4865814528Virtual memory (bytes) snapshot=40510705664Total committed heap usage (bytes)=5896761344Shuffle ErrorsBAD_ID=0CONNECTION=0IO_ERROR=0WRONG_LENGTH=0WRONG_MAP=0WRONG_REDUCE=0File Input Format Counters Bytes Read=110649014File Output Format Counters Bytes Written=96528650

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -ls /weblogout

Found 2 items

-rw-r--r-- 2 hadoop supergroup 0 2019-01-20 02:13 /weblogout/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 96528650 2019-01-20 02:13 /weblogout/part-r-00000

[hadoop@centos-aaron-h1 ~]$ [hadoop@centos-aaron-h1 ~]$ hdfs dfs -cat /weblogout/part-r-00000 |more

1x.x.4.x0x,2018-11-11 00:00:01,GET,/xxx/xxxAppoint/showAppointmentList,HTTP/1.0,200,1670

1x.x.4.x0x,2018-11-11 00:00:02,GET,/xxx/xxxSign/showSign,HTTP/1.0,200,2384

1x.x.4.x0x,2018-11-11 00:00:05,POST,/xxx/xxxSign/addSign,HTTP/1.0,200,224

1x.x.4.x0x,2018-11-11 00:00:08,GET,/xxx/xxxMember/mycard,HTTP/1.0,200,1984

1x.x.4.x0x,2018-11-11 00:00:08,POST,/xxx/xxx/activity/rotarydisc,HTTP/1.0,200,230

1x.x.4.x0x,2018-11-11 00:00:09,POST,/xxx/xxxMember/mycardresult,HTTP/1.0,200,1104

1x.x.4.x0x,2018-11-11 00:00:10,GET,/xxx/resources/dojo/dojo.js,HTTP/1.0,200,257598

1x.x.4.x0x,2018-11-11 00:00:10,GET,/xxx/resources/spring/Spring-Dojo.js,HTTP/1.0,200,9520

1x.x.4.x0x,2018-11-11 00:00:10,GET,/xxx/resources/spring/Spring.js,HTTP/1.0,200,3170

1x.x.4.x0x,2018-11-11 00:00:10,GET,/xxx/wcclotteryactivitys/showActivity?id=268&code=081AvJud1SCu4t0Bactd11aGud1AvJuM&state=272,HTTP/1.0,200,30334

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/js/date/WdatePicker.js,HTTP/1.0,200,10235

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/js/hcharts/js/highcharts-more.js,HTTP/1.0,200,23172

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/js/hcharts/js/highcharts.js,HTTP/1.0,200,153283

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/js/hcharts/js/modules/exporting.js,HTTP/1.0,200,7449

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/js/jquery.min.js,HTTP/1.0,200,83477

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/js/jquery.widget.min.js,HTTP/1.0,200,6520

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/resources/dojo/nls/dojo_zh-cn.js,HTTP/1.0,200,6757

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/ui/dtGrid/i18n/zh-cn.js,HTTP/1.0,200,8471

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/ui/dtGrid/jquery.dtGrid.js,HTTP/1.0,200,121247

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/ui/metro/js/metro.min.js,HTTP/1.0,200,92107

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/ui/zTree/js/jquery.ztree.core-3.5.js,HTTP/1.0,200,55651

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/ui/zTree/js/jquery.ztree.excheck-3.5.js,HTTP/1.0,200,21486

1x.x.4.x0x,2018-11-11 00:00:11,GET,/xxx/ui/zTree/js/jquery.ztree.exedit-3.5.js,HTTP/1.0,200,42910

1x.x.4.x0x,2018-11-11 00:00:11,POST,/xxx/xxxMember/mycardresult,HTTP/1.0,200,1104

1x.x.4.x0x,2018-11-11 00:00:12,GET,/xxx/js/lottery/jQueryRotate.2.2.js,HTTP/1.0,200,11500

1x.x.4.x0x,2018-11-11 00:00:12,GET,/xxx/js/lottery/jquery.easing.min.js,HTTP/1.0,200,5555

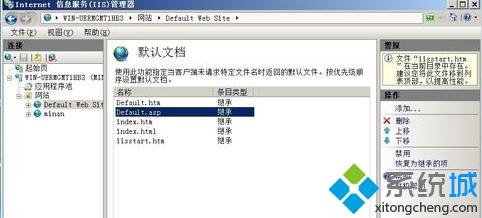

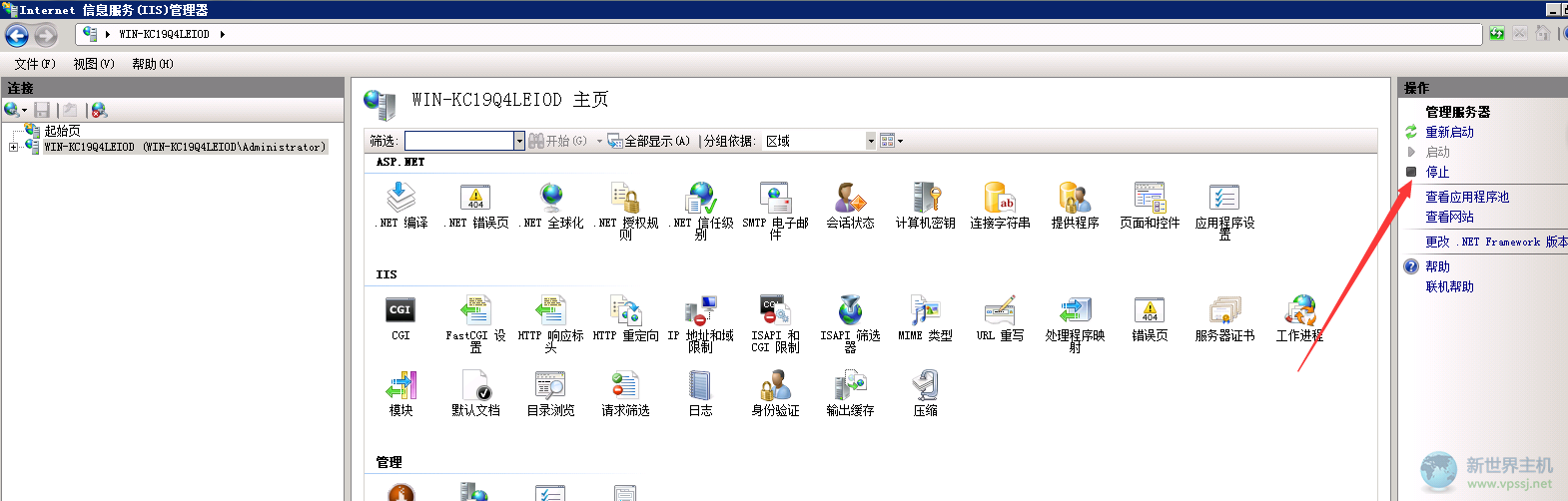

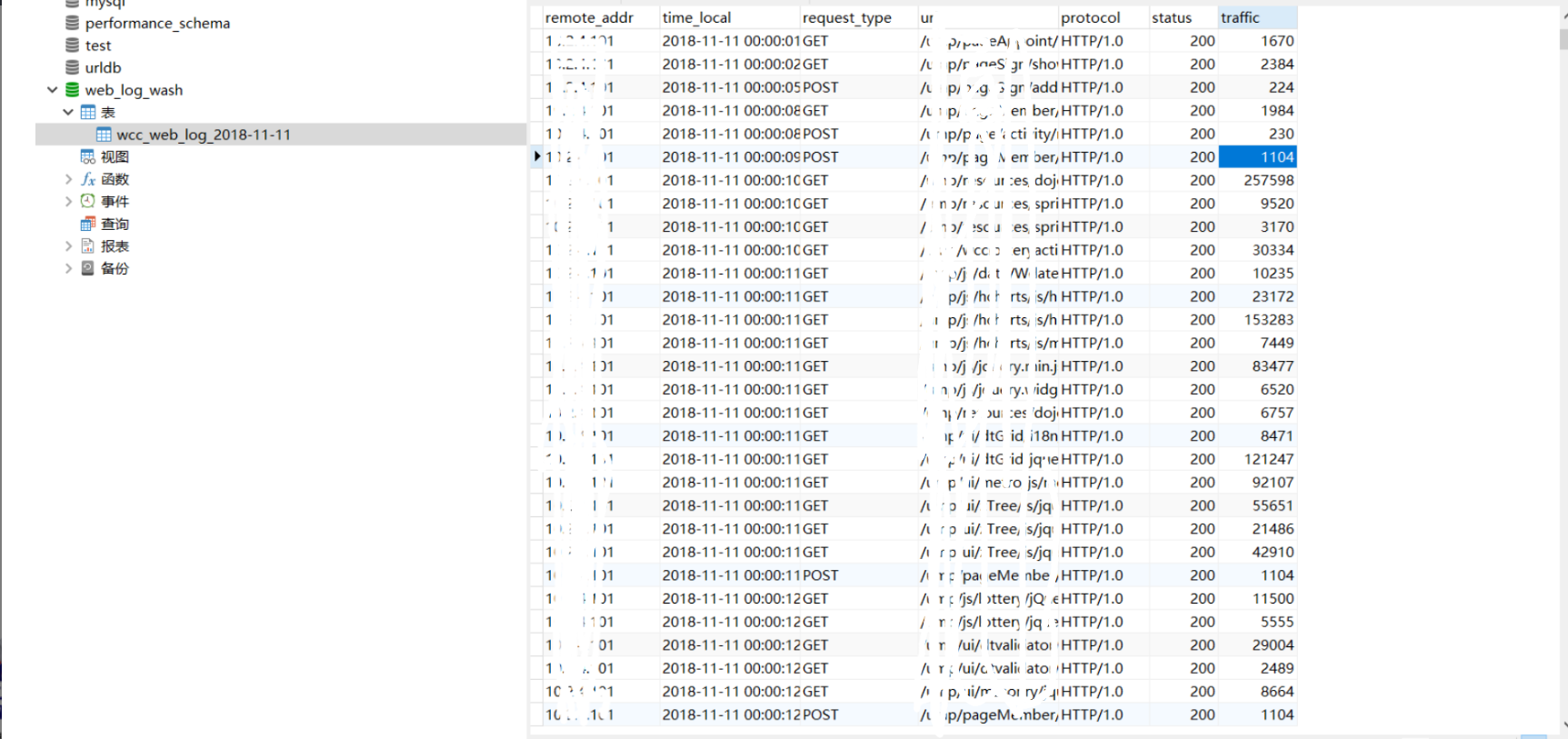

--More--五、mysql数据准备

这里由于一天的数据量只有100M左右,并不算非常大;所以,我们就将这些数据直接通过navicat导入到mysql来分析了。这样可以很方便为客户做分析,甚至我们可以直接将sql导出给客户,让他们自己查。

#a.下载结果文件

hdfs dfs -get /weblogout/part-r-00000

#b.mysql新建表

DROP TABLE IF EXISTS `wcc_web_log_2018-11-11`;

CREATE TABLE `wcc_web_log_2018-11-11` (`remote_addr` varchar(255) CHARACTER SET utf8 COLLATE utf8_bin NULL DEFAULT NULL,`time_local` datetime(0) NULL DEFAULT NULL,`request_type` varchar(255) CHARACTER SET utf8 COLLATE utf8_bin NULL DEFAULT NULL,`url` varchar(500) CHARACTER SET utf8 COLLATE utf8_bin NULL DEFAULT NULL,`protocol` varchar(255) CHARACTER SET utf8 COLLATE utf8_bin NULL DEFAULT NULL,`status` int(255) NULL DEFAULT NULL,`traffic` int(11) NULL DEFAULT NULL,INDEX `idx_wcc_web_log_2018_11_11_time_local`(`time_local`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_bin ROW_FORMAT = Compact;#c.使用navicat的txt方式导入mysql(此处大家可以自行选择,也可以使用sqoop来完成)六、统计分析

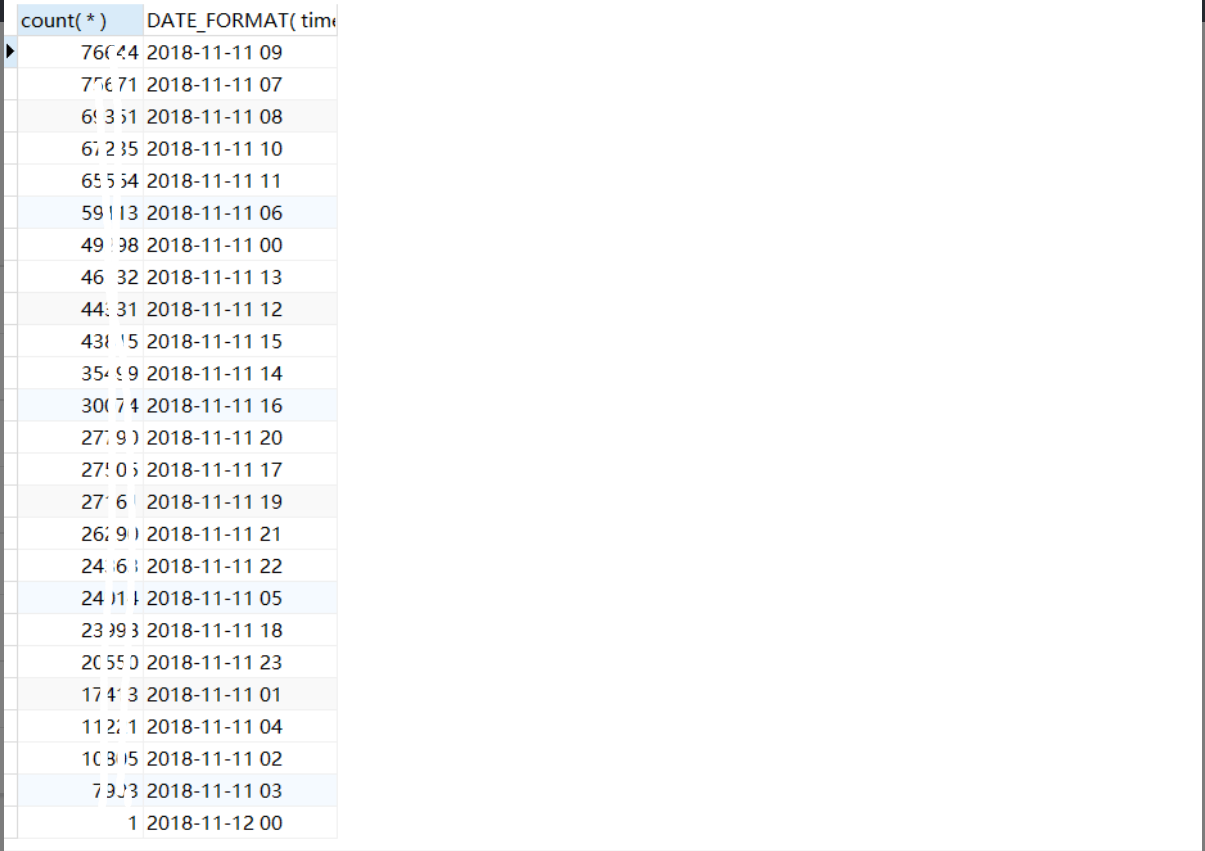

(1)按小时统计访问量、并且按count倒序排序

SELECTcount( * ),DATE_FORMAT( time_local, "%Y-%m-%d %H" )

FROM`wcc_web_log_2018-11-11`

GROUP BYDATE_FORMAT( time_local, "%Y-%m-%d %H" )

ORDER BYcount( * ) DESC;

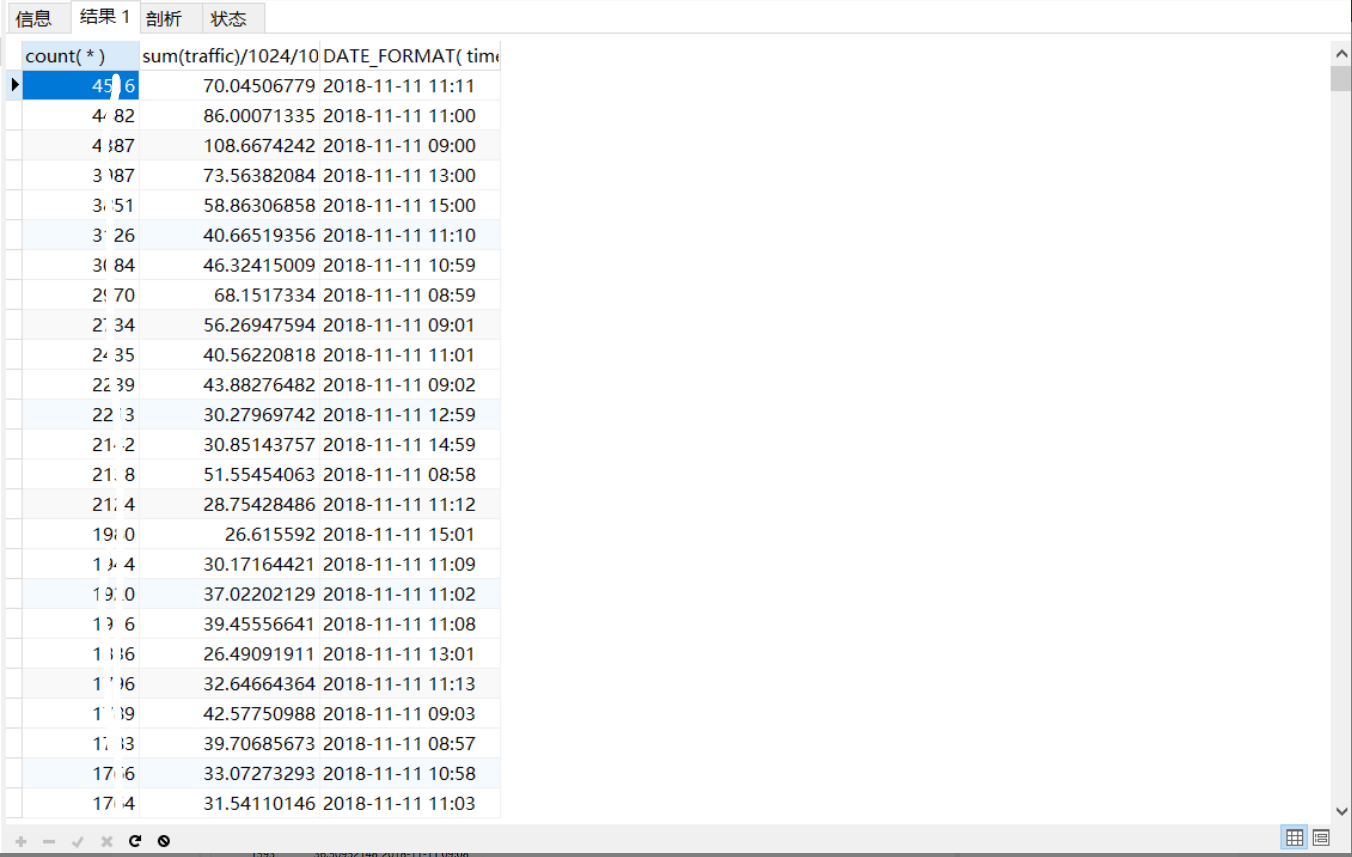

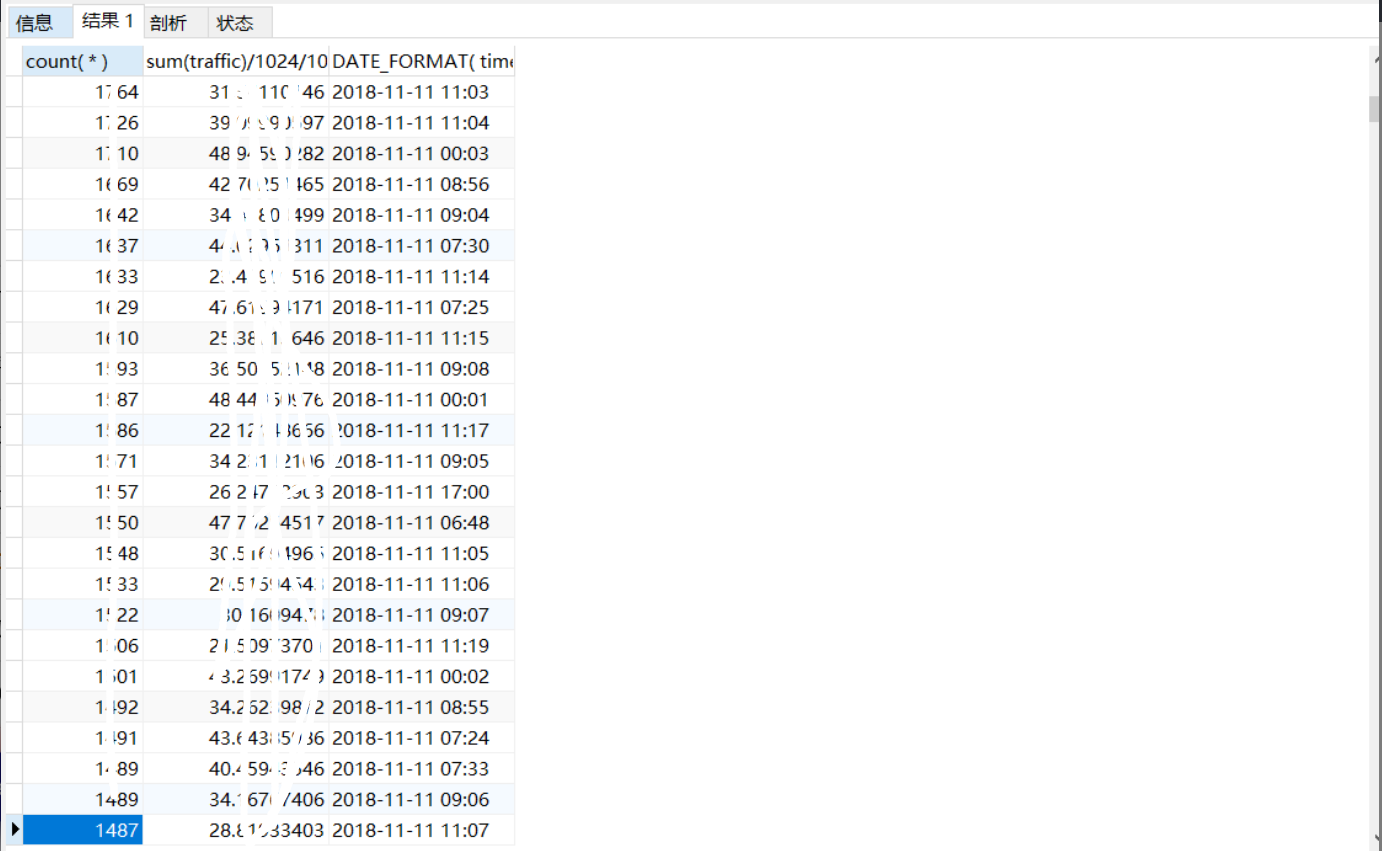

(2)按分钟统计访问量、流量(单位:Mb)

SELECTcount( * ),sum(traffic)/1024/1024,DATE_FORMAT( time_local, "%Y-%m-%d %H:%i" )

FROM`wcc_web_log_2018-11-11`

GROUP BYDATE_FORMAT( time_local, "%Y-%m-%d %H:%i" )

ORDER BY count(*) DESC;

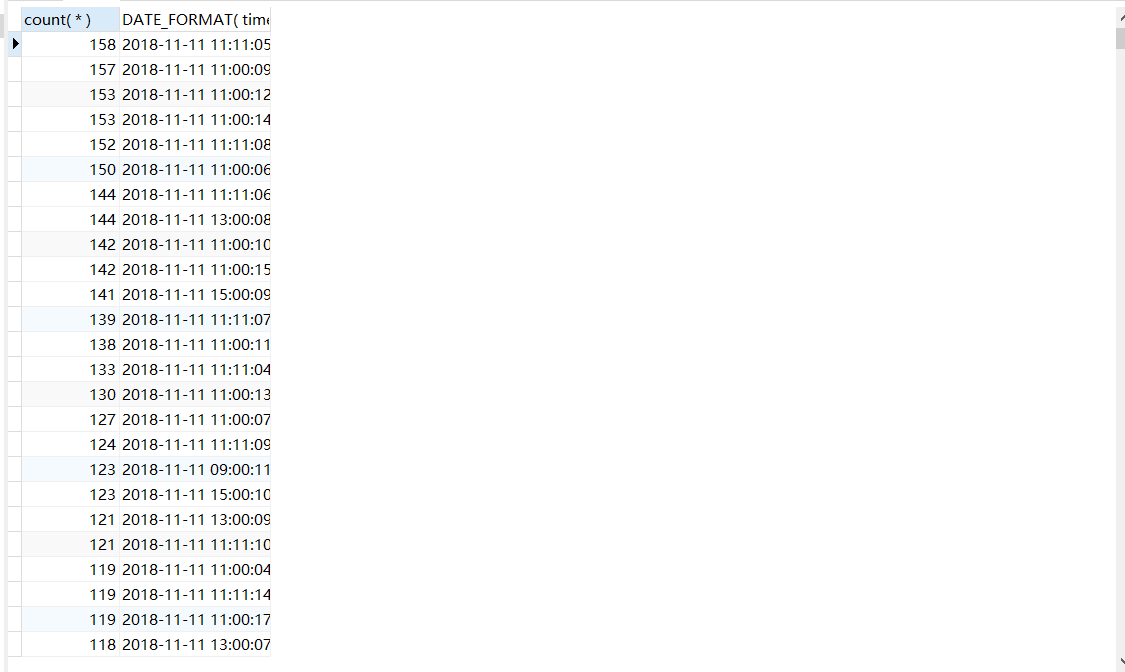

(3)按秒统计访问量

SELECTcount( * ),DATE_FORMAT( time_local, "%Y-%m-%d %H:%i:%S" )

FROM`wcc_web_log_2018-11-11`

GROUP BYDATE_FORMAT( time_local, "%Y-%m-%d %H:%i:%S" )

ORDER BY count(*) DESC;

(4)按url统计访问量(此功能最好是在MR处理的时候,将url中“?"开始后的部分截除)

SELECT count(*),url from `wcc_web_log_2018-11-11`

GROUP BY url

ORDER BY count(*) DESC;

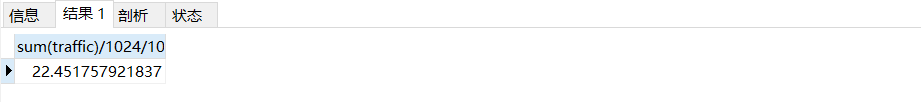

(5)总流量分析(单位:GB)

SELECT sum(traffic)/1024/1024/1024 from `wcc_web_log_2018-11-11`

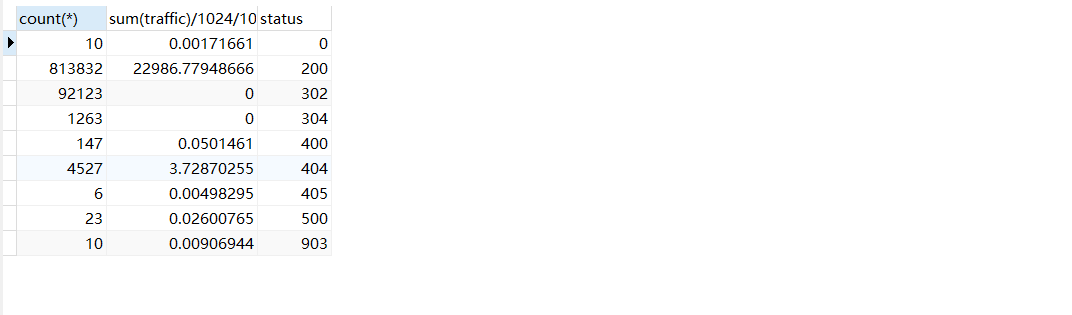

(6)按照请求code状态统计,总流量分析(单位:MB)

SELECT count(*),sum(traffic)/1024/1024,status from `wcc_web_log_2018-11-11` GROUP BY status

(7)统计总的访问量

select count(*) from `wcc_web_log_2018-11-11`

七、最后总结

在分析统计时,如果小伙伴们需要出报表,其实只需要查询该表就可以完成;还可以利用该表的查询做邮件报表。当然,建议大家可以使用hive来分析。博主这里是由于分析需要,所以直接用mysql来分析了。

最后寄语,以上是博主本次文章的全部内容,如果大家觉得博主的文章还不错,请点赞;如果您对博主其它服务器大数据技术或者博主本人感兴趣,请关注博主博客,并且欢迎随时跟博主沟通交流。