首先配置好一个爬虫文件,经过测试配置的URL接口OK,接下来需要通过xpath来提取数据(提取的数据根据自身需要)

先看下要爬取的网站页面信息:

再看下编写的代码信息:

import scrapy

import reclass BilianSpider(scrapy.Spider):name = 'bilian'allowed_domains = ['ebnew.com']# start_urls = ['http://ebnew.com/']# 存储的数据格式sql_data = dict(projectcode='', # 项目编码web='', # 信息来源网站keyword='', # 关键字detail_url='', # 招标详细页网址title='', # 第三方网站发布标题toptype='', # 信息类型province='', # 归属省份Product='', # 产品范畴industry='', # 归属行业tendering_manner='', # 招标方式publicity_date='', # 招标公示日期expiry_date='', # 招标截止日期)# form表单提交的数据格式form_data = dict(infoClassCodes='',rangeType='',projectType='bid',fundSourceCodes='',dateType='',startDateCode='',endDateCode='',normIndustry='',normIndustryName='',zone='',zoneName='',zoneText='',key='', # 搜索的关键字pubDateType='',pubDateBegin='',pubDateEnd='',sortMethod='timeDesc',orgName='',currentPage='' # 当前的页码)def start_requests(self):from_data = self.form_datafrom_data['key'] = '路由器'from_data['currentPage'] = '2'yield scrapy.FormRequest(url='https://ss.ebnew.com/tradingSearch/index.htm',formdata=from_data,callback=self.parse_page1,)# def parse_page1(self, response):# with open('2.html','wb')as f:# f.write(response.body)# //div[@class="abstract-box mg-t25 ebnew-border-bottom mg-r15"]/div/i[1]/text()def parse_page1(self, response):content_list_x_s = response.xpath('//div[@class="ebnew-content-list"]/div')for content_list_x in content_list_x_s:toptype = content_list_x.xpath('./div/i[1]/text()').extract_first()publicity_date= content_list_x.xpath('./div/i[2]/text()').extract_first()title = content_list_x.xpath('./div/a/text()').extract_first()tendering_manner = content_list_x.xpath('.//div[2]/div[1]/p[1]/span[2]/text()').extract_first()Product = content_list_x.xpath('.//div[2]/div[1]/p[2]/span[2]/text()').extract_first()expiry_date = content_list_x.xpath('.//div[2]/div[2]/p[1]/span[2]/text()').extract_first()province = content_list_x.xpath('.//div[2]/div[2]/p[2]/spanp[2]/text()').extract_first()print(toptype, publicity_date, title,tendering_manner,Product, expiry_date, province)

新建一个start.py文件来运行该爬虫文件,

from scrapy import cmdlinecmdline.execute('scrapy crawl bilian'.split())

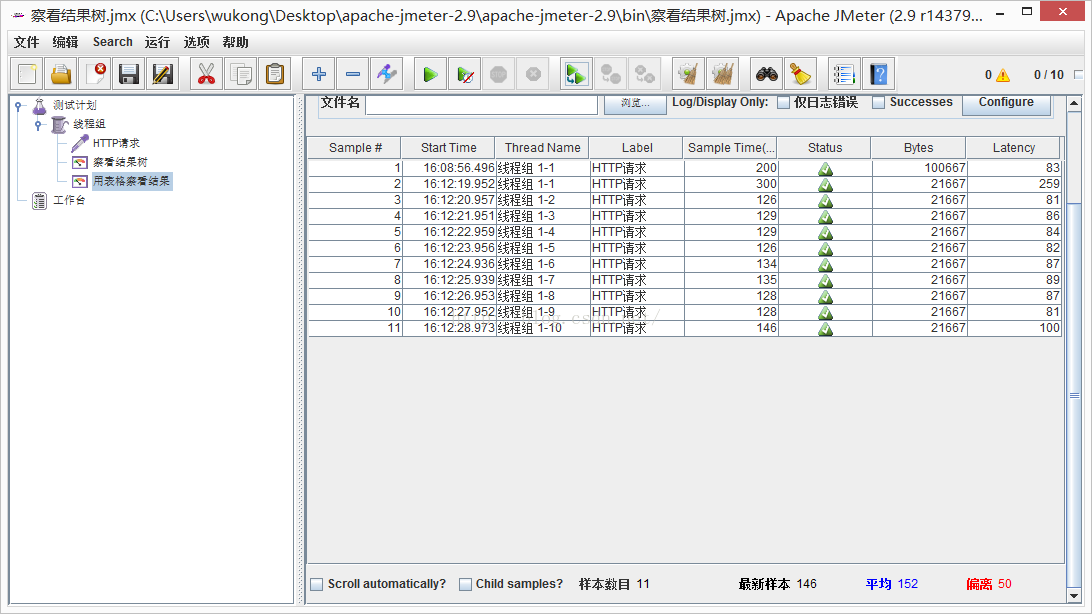

查看运行结果:

D:\Python3.8.5\python.exe D:/zhaobiao/zhaobiao/spiders/start.py

2020-10-06 06:31:34 [scrapy.utils.log] INFO: Scrapy 2.3.0 started (bot: zhaobiao)

2020-10-06 06:31:34 [scrapy.utils.log] INFO: Versions: lxml 4.5.2.0, libxml2 2.9.5, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 20.3.0, Python 3.8.5 (tags/v3.8.5:580fbb0, Jul 20 2020, 15:43:08) [MSC v.1926 32 bit (Intel)], pyOpenSSL 19.1.0 (OpenSSL 1.1.1h 22 Sep 2020), cryptography 3.1.1, Platform Windows-10-10.0.18362-SP0

2020-10-06 06:31:34 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.selectreactor.SelectReactor

2020-10-06 06:31:34 [scrapy.crawler] INFO: Overridden settings:

{'BOT_NAME': 'zhaobiao','DOWNLOAD_DELAY': 3,'NEWSPIDER_MODULE': 'zhaobiao.spiders','SPIDER_MODULES': ['zhaobiao.spiders']}

2020-10-06 06:31:34 [scrapy.extensions.telnet] INFO: Telnet Password: ec14f54ed056d314

2020-10-06 06:31:34 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats','scrapy.extensions.telnet.TelnetConsole','scrapy.extensions.logstats.LogStats']

2020-10-06 06:31:35 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware','scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware','scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware','scrapy.downloadermiddlewares.useragent.UserAgentMiddleware','zhaobiao.middlewares.ZhaobiaoDownloaderMiddleware','scrapy.downloadermiddlewares.retry.RetryMiddleware','scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware','scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware','scrapy.downloadermiddlewares.redirect.RedirectMiddleware','scrapy.downloadermiddlewares.cookies.CookiesMiddleware','scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware','scrapy.downloadermiddlewares.stats.DownloaderStats']

2020-10-06 06:31:35 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware','scrapy.spidermiddlewares.offsite.OffsiteMiddleware','scrapy.spidermiddlewares.referer.RefererMiddleware','scrapy.spidermiddlewares.urllength.UrlLengthMiddleware','scrapy.spidermiddlewares.depth.DepthMiddleware']

2020-10-06 06:31:35 [scrapy.middleware] INFO: Enabled item pipelines:

['zhaobiao.pipelines.ZhaobiaoPipeline']

2020-10-06 06:31:35 [scrapy.core.engine] INFO: Spider opened

2020-10-06 06:31:35 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2020-10-06 06:31:35 [bilian] INFO: Spider opened: bilian

2020-10-06 06:31:35 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2020-10-06 06:31:35 [scrapy.core.engine] DEBUG: Crawled (200) <POST https://ss.ebnew.com/tradingSearch/index.htm> (referer: None)

结果 发布日期:2020-09-30 合肥市包河区同安街道周谷堆社区居民委员会HF20200930144955483001直接采购... 询价采购 路由器,分流器 None None

公示 发布日期:2020-09-30 中国邮政集团有限公司云南省分公司4G无线 国内公开 2020-10-05 09:00:00 None

公示 发布日期:2020-09-30 未来网络试验设施国家重大科技基础设施项目深圳信息通信研究院承建系统—信... 国际公开 信号分析仪 2020-10-12 23:59:00 None

结果 发布日期:2020-09-30 内蒙古超高压供电局2020年第十一批集中采购(2020年三季度生产性消耗性材料... 询价采购 网络路由器 None None

公告 发布日期:2020-09-30 东莞市消防救援支队电子政务外网和指挥网链路租赁项目公开招标公告 国内公开 路由器 None None

公告 发布日期:2020-09-30 河南省滑县政务服务和大数据管理局滑县大数据中心建设项目-公开招标公告 国内公开 系统集成 None None

公告 发布日期:2020-09-30 内蒙古自治区团校内蒙古自治区团教务处标准化数字考场系统竞争性磋商 国内公开 路由器 None None

公告 发布日期:2020-09-30 西北工业大学翱翔体育馆网络升级改造采购项目招标公告 国内公开 负载均衡设备,检测设备 None None

结果 发布日期:2020-09-30 国家税务总局石家庄市税务局稽查局“四室一包”建设(硬件采购及集成)中标... 单一来源采购 音视频播放设备,光端机,扩音设备,LED大屏,... None None

公告 发布日期:2020-09-30 中国信息通信研究院工业互联网标识解析国家顶级节点(一期)建设——国拨追... 国内公开 系统集成 None None

2020-10-06 06:31:35 [scrapy.core.engine] INFO: Closing spider (finished)

2020-10-06 06:31:35 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 783,'downloader/request_count': 1,'downloader/request_method_count/POST': 1,'downloader/response_bytes': 14229,'downloader/response_count': 1,'downloader/response_status_count/200': 1,'elapsed_time_seconds': 0.333967,'finish_reason': 'finished','finish_time': datetime.datetime(2020, 10, 5, 22, 31, 35, 613753),'log_count/DEBUG': 1,'log_count/INFO': 11,'response_received_count': 1,'scheduler/dequeued': 1,'scheduler/dequeued/memory': 1,'scheduler/enqueued': 1,'scheduler/enqueued/memory': 1,'start_time': datetime.datetime(2020, 10, 5, 22, 31, 35, 279786)}

2020-10-06 06:31:35 [scrapy.core.engine] INFO: Spider closed (finished)Process finished with exit code 0中间的文字部分获取到的招标信息,其中None表示该处无数据可供提取,因此返回None。

接下来提取二级页面的信息,先看页面内容:

继续编写代码:

继续编写代码:

def start_requests(self):from_data = self.form_datafrom_data['key'] = '路由器'from_data['currentPage'] = '2'yield scrapy.FormRequest(url='https://ss.ebnew.com/tradingSearch/index.htm',formdata=from_data,callback=self.parse_page1,)# def parse_page1(self, response):# with open('2.html','wb')as f:# f.write(response.body)# //div[@class="abstract-box mg-t25 ebnew-border-bottom mg-r15"]/div/i[1]/text()def parse_page1(self, response):content_list_x_s = response.xpath('//div[@class="ebnew-content-list"]/div')for content_list_x in content_list_x_s:toptype = content_list_x.xpath('./div/i[1]/text()').extract_first()publicity_date= content_list_x.xpath('./div/i[2]/text()').extract_first()title = content_list_x.xpath('./div/a/text()').extract_first()tendering_manner = content_list_x.xpath('.//div[2]/div[1]/p[1]/span[2]/text()').extract_first()Product = content_list_x.xpath('.//div[2]/div[1]/p[2]/span[2]/text()').extract_first()expiry_date = content_list_x.xpath('.//div[2]/div[2]/p[1]/span[2]/text()').extract_first()province = content_list_x.xpath('.//div[2]/div[2]/p[2]/spanp[2]/text()').extract_first()# print(toptype, publicity_date, title,tendering_manner,Product, expiry_date, province)def parse_page2(self, response):content_list_x_s1 = response.xpath('//ul[contains(@class,"ebnew-project-information")]/li')projectcode = content_list_x_s1[0].xpath('./span[2]/text()').extract_first()industry = content_list_x_s1[7].xpath('./span[2]/text()').extract_first()print(projectcode, industry)再次运行:

D:\Python3.8.5\python.exe D:/zhaobiao/zhaobiao/spiders/start.py

2020-10-06 06:41:27 [scrapy.utils.log] INFO: Scrapy 2.3.0 started (bot: zhaobiao)

2020-10-06 06:41:27 [scrapy.utils.log] INFO: Versions: lxml 4.5.2.0, libxml2 2.9.5, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 20.3.0, Python 3.8.5 (tags/v3.8.5:580fbb0, Jul 20 2020, 15:43:08) [MSC v.1926 32 bit (Intel)], pyOpenSSL 19.1.0 (OpenSSL 1.1.1h 22 Sep 2020), cryptography 3.1.1, Platform Windows-10-10.0.18362-SP0

2020-10-06 06:41:27 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.selectreactor.SelectReactor

2020-10-06 06:41:27 [scrapy.crawler] INFO: Overridden settings:

{'BOT_NAME': 'zhaobiao','DOWNLOAD_DELAY': 3,'NEWSPIDER_MODULE': 'zhaobiao.spiders','SPIDER_MODULES': ['zhaobiao.spiders']}

2020-10-06 06:41:27 [scrapy.extensions.telnet] INFO: Telnet Password: e3462c71dbccaed2

2020-10-06 06:41:27 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats','scrapy.extensions.telnet.TelnetConsole','scrapy.extensions.logstats.LogStats']

2020-10-06 06:41:28 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware','scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware','scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware','scrapy.downloadermiddlewares.useragent.UserAgentMiddleware','zhaobiao.middlewares.ZhaobiaoDownloaderMiddleware','scrapy.downloadermiddlewares.retry.RetryMiddleware','scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware','scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware','scrapy.downloadermiddlewares.redirect.RedirectMiddleware','scrapy.downloadermiddlewares.cookies.CookiesMiddleware','scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware','scrapy.downloadermiddlewares.stats.DownloaderStats']

2020-10-06 06:41:28 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware','scrapy.spidermiddlewares.offsite.OffsiteMiddleware','scrapy.spidermiddlewares.referer.RefererMiddleware','scrapy.spidermiddlewares.urllength.UrlLengthMiddleware','scrapy.spidermiddlewares.depth.DepthMiddleware']

2020-10-06 06:41:28 [scrapy.middleware] INFO: Enabled item pipelines:

['zhaobiao.pipelines.ZhaobiaoPipeline']

2020-10-06 06:41:28 [scrapy.core.engine] INFO: Spider opened

2020-10-06 06:41:28 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2020-10-06 06:41:28 [bilian] INFO: Spider opened: bilian

2020-10-06 06:41:28 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2020-10-06 06:41:28 [scrapy.core.engine] DEBUG: Crawled (200) <POST https://ss.ebnew.com/tradingSearch/index.htm> (referer: None)

2020-10-06 06:41:28 [scrapy.core.engine] INFO: Closing spider (finished)

2020-10-06 06:41:28 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 783,'downloader/request_count': 1,'downloader/request_method_count/POST': 1,'downloader/response_bytes': 14229,'downloader/response_count': 1,'downloader/response_status_count/200': 1,'elapsed_time_seconds': 0.344614,'finish_reason': 'finished','finish_time': datetime.datetime(2020, 10, 5, 22, 41, 28, 813691),'log_count/DEBUG': 1,'log_count/INFO': 11,'response_received_count': 1,'scheduler/dequeued': 1,'scheduler/dequeued/memory': 1,'scheduler/enqueued': 1,'scheduler/enqueued/memory': 1,'start_time': datetime.datetime(2020, 10, 5, 22, 41, 28, 469077)}

2020-10-06 06:41:28 [scrapy.core.engine] INFO: Spider closed (finished)Process finished with exit code 0运行后没有提取到任何信息,至少应该返回一个空列表吧,

接下来进入问题分析和排查的过程,

检查xpath语法:

content_list_x_s1 = response.xpath('//ul[contains(@class,"ebnew-project-information")]/li')projectcode = content_list_x_s1[0].xpath('./span[2]/text()').extract_first()industry = content_list_x_s1[7].xpath('./span[2]/text()').extract_first()

语法反复检查,并且在浏览器中反复测试,能够获取到projectcode和industry

xpath自身问题,改用正则表示式提取,

先导入re模块,编写正则表达式,

def parse_page2(self, response):content_list_x_s1 = response.xpath('//ul[contains(@class,"ebnew-project-information")]/li')projectcode = content_list_x_s1[0].xpath('./span[2]/text()').extract_first()industry = content_list_x_s1[7].xpath('./span[2]/text()').extract_first()if not projectcode:projectcode_find = re.findall('项目编号[::]{0,1}\s{0,2}([a-zA-Z0-9]{10,80})', response.body.decode('utf-8'))if projectcode_find:projectcode = projectcode_find[0]else:print('-'*90)print(projectcode, industry)

再次运行,看结果,

D:\Python3.8.5\python.exe D:/zhaobiao/zhaobiao/spiders/start.py

2020-10-06 06:48:50 [scrapy.utils.log] INFO: Scrapy 2.3.0 started (bot: zhaobiao)

2020-10-06 06:48:50 [scrapy.utils.log] INFO: Versions: lxml 4.5.2.0, libxml2 2.9.5, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 20.3.0, Python 3.8.5 (tags/v3.8.5:580fbb0, Jul 20 2020, 15:43:08) [MSC v.1926 32 bit (Intel)], pyOpenSSL 19.1.0 (OpenSSL 1.1.1h 22 Sep 2020), cryptography 3.1.1, Platform Windows-10-10.0.18362-SP0

2020-10-06 06:48:50 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.selectreactor.SelectReactor

2020-10-06 06:48:50 [scrapy.crawler] INFO: Overridden settings:

{'BOT_NAME': 'zhaobiao','DOWNLOAD_DELAY': 3,'NEWSPIDER_MODULE': 'zhaobiao.spiders','SPIDER_MODULES': ['zhaobiao.spiders']}

2020-10-06 06:48:50 [scrapy.extensions.telnet] INFO: Telnet Password: adb2ef029ca73dfb

2020-10-06 06:48:50 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats','scrapy.extensions.telnet.TelnetConsole','scrapy.extensions.logstats.LogStats']

2020-10-06 06:48:51 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware','scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware','scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware','scrapy.downloadermiddlewares.useragent.UserAgentMiddleware','zhaobiao.middlewares.ZhaobiaoDownloaderMiddleware','scrapy.downloadermiddlewares.retry.RetryMiddleware','scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware','scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware','scrapy.downloadermiddlewares.redirect.RedirectMiddleware','scrapy.downloadermiddlewares.cookies.CookiesMiddleware','scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware','scrapy.downloadermiddlewares.stats.DownloaderStats']

2020-10-06 06:48:51 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware','scrapy.spidermiddlewares.offsite.OffsiteMiddleware','scrapy.spidermiddlewares.referer.RefererMiddleware','scrapy.spidermiddlewares.urllength.UrlLengthMiddleware','scrapy.spidermiddlewares.depth.DepthMiddleware']

2020-10-06 06:48:51 [scrapy.middleware] INFO: Enabled item pipelines:

['zhaobiao.pipelines.ZhaobiaoPipeline']

2020-10-06 06:48:51 [scrapy.core.engine] INFO: Spider opened

2020-10-06 06:48:51 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2020-10-06 06:48:51 [bilian] INFO: Spider opened: bilian

2020-10-06 06:48:51 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2020-10-06 06:48:51 [scrapy.core.engine] DEBUG: Crawled (200) <POST https://ss.ebnew.com/tradingSearch/index.htm> (referer: None)

2020-10-06 06:48:51 [scrapy.core.engine] INFO: Closing spider (finished)

2020-10-06 06:48:51 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 783,'downloader/request_count': 1,'downloader/request_method_count/POST': 1,'downloader/response_bytes': 14229,'downloader/response_count': 1,'downloader/response_status_count/200': 1,'elapsed_time_seconds': 0.347671,'finish_reason': 'finished','finish_time': datetime.datetime(2020, 10, 5, 22, 48, 51, 602575),'log_count/DEBUG': 1,'log_count/INFO': 11,'response_received_count': 1,'scheduler/dequeued': 1,'scheduler/dequeued/memory': 1,'scheduler/enqueued': 1,'scheduler/enqueued/memory': 1,'start_time': datetime.datetime(2020, 10, 5, 22, 48, 51, 254904)}

2020-10-06 06:48:51 [scrapy.core.engine] INFO: Spider closed (finished)Process finished with exit code 0结果无变化,所以到底是哪里出了问题,是正则表示的问题?而且即使匹配不成功,通过findall返回的也应该是一个列表,这里完全看不到任何信息,好像就没有被调用,

因此,是爬虫文件被封了?(毕竟我没有使用代理IP)

有点儿沮丧…

排查问题还是从源头去看,在运行结果的状态码信息,

2020-10-06 06:48:51 [bilian] INFO: Spider opened: bilian

2020-10-06 06:48:51 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2020-10-06 06:48:51 [scrapy.core.engine] DEBUG: Crawled (200) <POST https://ss.ebnew.com/tradingSearch/index.htm> (referer: None)

2020-10-06 06:48:51 [scrapy.core.engine] INFO: Closing spider (finished)

DEBUG显示200,说明访问是OK的,但仔细看POST请求信息,这是第一次页面信息,

再看我定义的parse_page2

def parse_page2(self, response):

该代码是要被callback调用的,

def start_requests(self):from_data = self.form_datafrom_data['key'] = '路由器'from_data['currentPage'] = '2'yield scrapy.FormRequest(url='https://ss.ebnew.com/tradingSearch/index.htm',formdata=from_data,callback=self.parse_page1,)

但实际上我的callback 调用却是parse_page1(上一个页面信息)

因此,需要再定义一个start_request(直接复制上一个start_requet,注释掉from_data信息,callback回调parse_page)

def start_requests(self):# from_data = self.form_data# from_data['key'] = '路由器'# from_data['currentPage'] = '2'yield scrapy.Request(url='https://ss.ebnew.com/tradingSearch/index.htm',formdata=from_data,callback=self.parse_page2,)

重新运行下,

D:\Python3.8.5\python.exe D:/zhaobiao/zhaobiao/spiders/start.py

2020-10-06 11:13:26 [scrapy.utils.log] INFO: Scrapy 2.3.0 started (bot: zhaobiao)

2020-10-06 11:13:26 [scrapy.utils.log] INFO: Versions: lxml 4.5.2.0, libxml2 2.9.5, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 20.3.0, Python 3.8.5 (tags/v3.8.5:580fbb0, Jul 20 2020, 15:43:08) [MSC v.1926 32 bit (Intel)], pyOpenSSL 19.1.0 (OpenSSL 1.1.1h 22 Sep 2020), cryptography 3.1.1, Platform Windows-10-10.0.18362-SP0

2020-10-06 11:13:26 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.selectreactor.SelectReactor

2020-10-06 11:13:27 [scrapy.crawler] INFO: Overridden settings:

{'BOT_NAME': 'zhaobiao','DOWNLOAD_DELAY': 3,'NEWSPIDER_MODULE': 'zhaobiao.spiders','SPIDER_MODULES': ['zhaobiao.spiders']}

2020-10-06 11:13:27 [scrapy.extensions.telnet] INFO: Telnet Password: c68f138b1a7f0a2d

2020-10-06 11:13:27 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats','scrapy.extensions.telnet.TelnetConsole','scrapy.extensions.logstats.LogStats']

2020-10-06 11:13:30 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware','scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware','scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware','scrapy.downloadermiddlewares.useragent.UserAgentMiddleware','zhaobiao.middlewares.ZhaobiaoDownloaderMiddleware','scrapy.downloadermiddlewares.retry.RetryMiddleware','scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware','scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware','scrapy.downloadermiddlewares.redirect.RedirectMiddleware','scrapy.downloadermiddlewares.cookies.CookiesMiddleware','scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware','scrapy.downloadermiddlewares.stats.DownloaderStats']

2020-10-06 11:13:30 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware','scrapy.spidermiddlewares.offsite.OffsiteMiddleware','scrapy.spidermiddlewares.referer.RefererMiddleware','scrapy.spidermiddlewares.urllength.UrlLengthMiddleware','scrapy.spidermiddlewares.depth.DepthMiddleware']

2020-10-06 11:13:30 [scrapy.middleware] INFO: Enabled item pipelines:

['zhaobiao.pipelines.ZhaobiaoPipeline']

2020-10-06 11:13:30 [scrapy.core.engine] INFO: Spider opened

2020-10-06 11:13:30 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2020-10-06 11:13:30 [bilian] INFO: Spider opened: bilian

2020-10-06 11:13:30 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2020-10-06 11:13:30 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://www.ebnew.com/businessShow/653378328.html> (referer: None)

HF20200930144955483001 ;网络设备;电工仪表;

2020-10-06 11:13:30 [scrapy.core.engine] INFO: Closing spider (finished)

2020-10-06 11:13:30 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 469,'downloader/request_count': 1,'downloader/request_method_count/GET': 1,'downloader/response_bytes': 9957,'downloader/response_count': 1,'downloader/response_status_count/200': 1,'elapsed_time_seconds': 0.559952,'finish_reason': 'finished','finish_time': datetime.datetime(2020, 10, 6, 3, 13, 30, 676786),'log_count/DEBUG': 1,'log_count/INFO': 11,'response_received_count': 1,'scheduler/dequeued': 1,'scheduler/dequeued/memory': 1,'scheduler/enqueued': 1,'scheduler/enqueued/memory': 1,'start_time': datetime.datetime(2020, 10, 6, 3, 13, 30, 116834)}

2020-10-06 11:13:30 [scrapy.core.engine] INFO: Spider closed (finished)Process finished with exit code 0020-10-06 11:13:30 [bilian] INFO: Spider opened: bilian

2020-10-06 11:13:30 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2020-10-06 11:13:30 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://www.ebnew.com/businessShow/653378328.html> (referer: None)

HF20200930144955483001 ;网络设备;电工仪表;

2020-10-06 11:13:30 [scrapy.core.engine] INFO: Closing spider (finished)

拿到了项目编号:HF20200930144955483001

也拿到了所属行业:网络设备;电工仪表;

问题是驱动进步的,解决了一个问题不仅仅代表对这个领域的知识掌握,也梳理了自身分析问题的能力方面的缺陷。