37.scrapy解决翻页及采集杭州造价网站材料数据

1.目标采集地址: http://183.129.219.195:8081/bs/hzzjb/web/list

2.这里的翻页还是较为简单的,只要模拟post请求发送data包含关键参数就能获取下一页页面信息。

获取页面标签信息的方法不合适,是之前写的,应该用xpath匹配整个table数据获取父类选择器再去二次匹配子类标签数据。

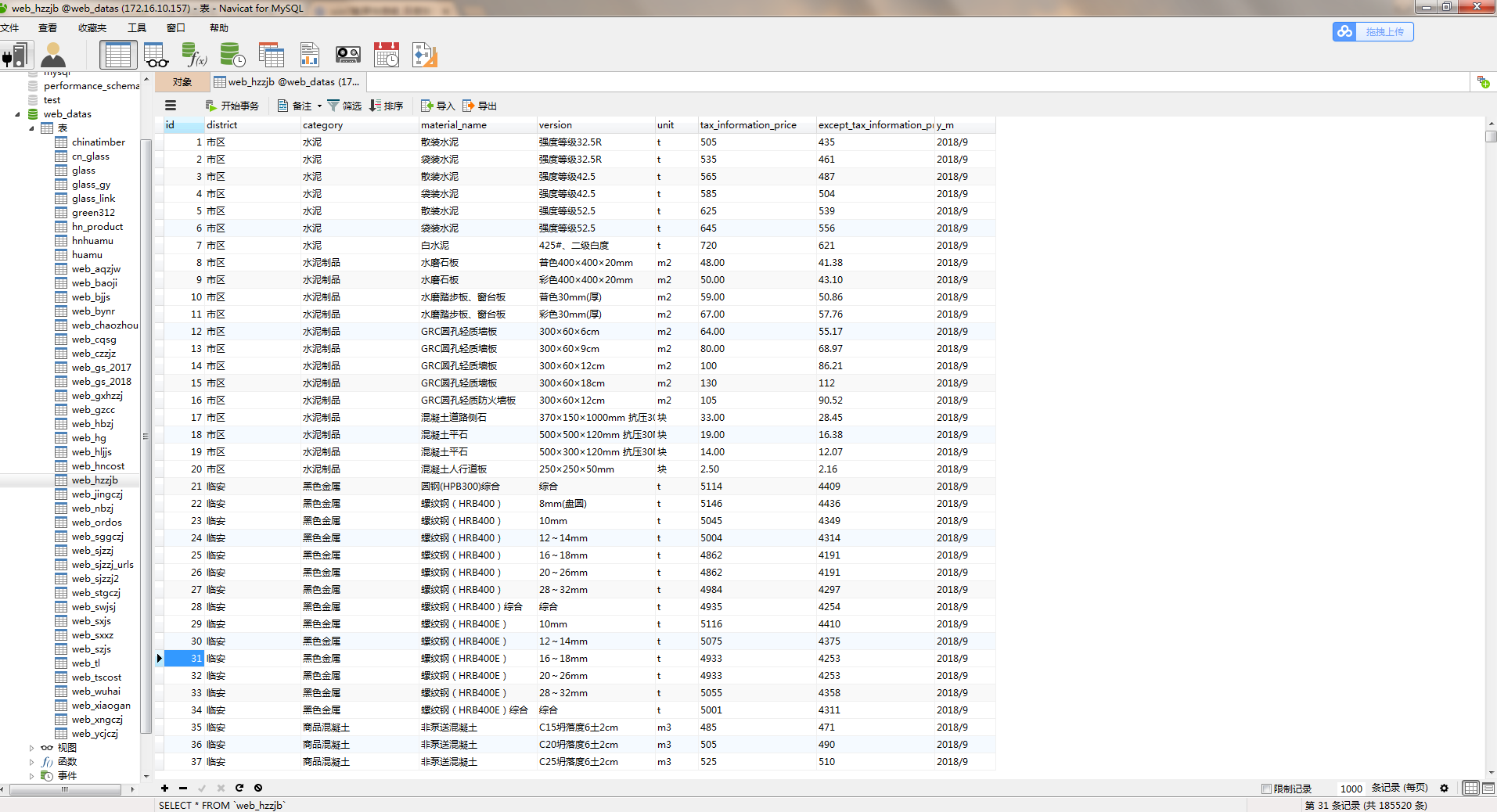

3.采集结果如下:

#hzzjb.py# -*- coding: utf-8 -*- import scrapy import json import re from hzzjb_web.items import HzzjbWebItem class HzzjbSpider(scrapy.Spider):name = 'hzzjb'allowed_domains = ['183.129.219.195:8081/bs']start_urls = ['http://183.129.219.195:8081/bs/hzzjb/web/list']custom_settings = {"DOWNLOAD_DELAY": 0.2,"ITEM_PIPELINES": {'hzzjb_web.pipelines.MysqlPipeline': 320,},"DOWNLOADER_MIDDLEWARES": {'hzzjb_web.middlewares.HzzjbWebDownloaderMiddleware': 500},}def parse(self, response):_response=response.text# print(_response)try :#获取信息表tag_list=response.xpath("//table[@class='table1']//tr/td").extract()# print(tag_list)# for i in tag_list:# print(i)tag1=tag_list[:9]tag2=tag_list[9:18]tag3=tag_list[18:27]tag4=tag_list[27:36]tag5=tag_list[36:45]tag6=tag_list[45:54]tag7=tag_list[54:63]tag8=tag_list[63:72]tag9=tag_list[72:81]tag10=tag_list[81:90]tag11=tag_list[90:99]tag12=tag_list[99:108]tag13=tag_list[108:117]tag14=tag_list[117:126]tag15=tag_list[126:135]tag16=tag_list[135:144]tag17=tag_list[144:153]tag18=tag_list[153:162]tag19=tag_list[162:171]tag20=tag_list[171:180]list=[]list.append(tag1)list.append(tag2)list.append(tag3)list.append(tag4)list.append(tag5)list.append(tag6)list.append(tag7)list.append(tag8)list.append(tag9)list.append(tag10)list.append(tag11)list.append(tag12)list.append(tag13)list.append(tag14)list.append(tag15)list.append(tag16)list.append(tag17)list.append(tag18)list.append(tag19)list.append(tag20)print(list)except:print('————————————————网站编码有异常!————————————————————')for index,tag in enumerate(list):# print('*'*100)# print(index+1,TAG(i)) item = HzzjbWebItem()try:# 地区district = tag[0].replace('<td>','').replace('</td>','')# print(district)item['district'] = district# 类别category = tag[1].replace('<td>','').replace('</td>','')# print(category)item['category'] = category# 材料名称material_name = tag[2].replace('<td>','').replace('</td>','')# print(material_name)item['material_name'] = material_name# 规格及型号version = tag[3].replace('<td>','').replace('</td>','')# print(version)item['version'] = version# 单位unit = tag[4].replace('<td>','').replace('</td>','')# print(unit)item['unit'] = unit# 含税信息价tax_information_price = tag[5].replace('<td>','').replace('</td>','')# print(tax_information_price)item['tax_information_price'] = tax_information_price# 除税信息价except_tax_information_price = tag[6].replace('<td>','').replace('</td>','')# print(except_tax_information_price)item['except_tax_information_price'] = except_tax_information_price# 年/月year_month = tag[7].replace('<td>','').replace('</td>','')# print(year_month)item['y_m'] = year_monthexcept:pass# print('*'*100)yield itemfor i in range(2, 5032):# 翻页data={'mtype': '2','_query.nfStart':'','_query.yfStart':'','_query.nfEnd':'','_query.yfEnd':'','_query.dqstr':'','_query.dq':'','_query.lbtype':'','_query.clmc':'','_query.ggjxh':'','pageNumber': '{}'.format(i),'pageSize':'','orderColunm':'','orderMode':'',}yield scrapy.FormRequest(url='http://183.129.219.195:8081/bs/hzzjb/web/list', callback=self.parse, formdata=data, method="POST", dont_filter=True)

#items.py# -*- coding: utf-8 -*-# Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.htmlimport scrapyclass HzzjbWebItem(scrapy.Item):# define the fields for your item here like:# name = scrapy.Field() district=scrapy.Field()category=scrapy.Field()material_name=scrapy.Field()version=scrapy.Field()unit=scrapy.Field()tax_information_price=scrapy.Field()except_tax_information_price=scrapy.Field()y_m=scrapy.Field()

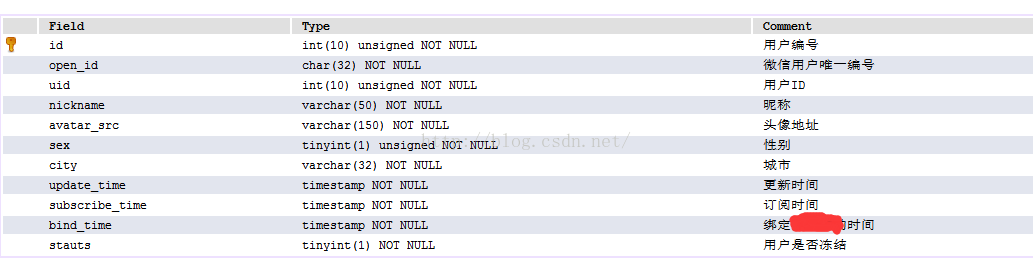

#piplines.py# -*- coding: utf-8 -*-# Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html from scrapy.conf import settings import pymysqlclass HzzjbWebPipeline(object):def process_item(self, item, spider):return item# 数据保存mysql class MysqlPipeline(object):def open_spider(self, spider):self.host = settings.get('MYSQL_HOST')self.port = settings.get('MYSQL_PORT')self.user = settings.get('MYSQL_USER')self.password = settings.get('MYSQL_PASSWORD')self.db = settings.get(('MYSQL_DB'))self.table = settings.get('TABLE')self.client = pymysql.connect(host=self.host, user=self.user, password=self.password, port=self.port, db=self.db, charset='utf8')def process_item(self, item, spider):item_dict = dict(item)cursor = self.client.cursor()values = ','.join(['%s'] * len(item_dict))keys = ','.join(item_dict.keys())sql = 'INSERT INTO {table}({keys}) VALUES ({values})'.format(table=self.table, keys=keys, values=values)try:if cursor.execute(sql, tuple(item_dict.values())): # 第一个值为sql语句第二个为 值 为一个元组print('数据入库成功!')self.client.commit()except Exception as e:print(e)

self.client.rollback()return itemdef close_spider(self, spider):self.client.close()

#setting.py# -*- coding: utf-8 -*-# Scrapy settings for hzzjb_web project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'hzzjb_web'SPIDER_MODULES = ['hzzjb_web.spiders'] NEWSPIDER_MODULE = 'hzzjb_web.spiders'# mysql配置参数 MYSQL_HOST = "172.16.0.55" MYSQL_PORT = 3306 MYSQL_USER = "root" MYSQL_PASSWORD = "concom603" MYSQL_DB = 'web_datas' TABLE = "web_hzzjb"# Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'hzzjb_web (+http://www.yourdomain.com)'# Obey robots.txt rules ROBOTSTXT_OBEY = False# Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32# Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16# Disable cookies (enabled by default) #COOKIES_ENABLED = False# Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False# Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #}# Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'hzzjb_web.middlewares.HzzjbWebSpiderMiddleware': 543, #}# Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html DOWNLOADER_MIDDLEWARES = {'hzzjb_web.middlewares.HzzjbWebDownloaderMiddleware': 500, }# Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #}# Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = {'hzzjb_web.pipelines.HzzjbWebPipeline': 300, }# Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False# Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

#middlewares.py# -*- coding: utf-8 -*-# Define here the models for your spider middleware # # See documentation in: # https://doc.scrapy.org/en/latest/topics/spider-middleware.htmlfrom scrapy import signalsclass HzzjbWebSpiderMiddleware(object):# Not all methods need to be defined. If a method is not defined,# scrapy acts as if the spider middleware does not modify the# passed objects. @classmethoddef from_crawler(cls, crawler):# This method is used by Scrapy to create your spiders.s = cls()crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)return sdef process_spider_input(self, response, spider):# Called for each response that goes through the spider# middleware and into the spider.# Should return None or raise an exception.return Nonedef process_spider_output(self, response, result, spider):# Called with the results returned from the Spider, after# it has processed the response.# Must return an iterable of Request, dict or Item objects.for i in result:yield idef process_spider_exception(self, response, exception, spider):# Called when a spider or process_spider_input() method# (from other spider middleware) raises an exception.# Should return either None or an iterable of Response, dict# or Item objects.passdef process_start_requests(self, start_requests, spider):# Called with the start requests of the spider, and works# similarly to the process_spider_output() method, except# that it doesn’t have a response associated.# Must return only requests (not items).for r in start_requests:yield rdef spider_opened(self, spider):spider.logger.info('Spider opened: %s' % spider.name)class HzzjbWebDownloaderMiddleware(object):# Not all methods need to be defined. If a method is not defined,# scrapy acts as if the downloader middleware does not modify the# passed objects. @classmethoddef from_crawler(cls, crawler):# This method is used by Scrapy to create your spiders.s = cls()crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)return sdef process_request(self, request, spider):# Called for each request that goes through the downloader# middleware.# Must either:# - return None: continue processing this request# - or return a Response object# - or return a Request object# - or raise IgnoreRequest: process_exception() methods of# installed downloader middleware will be calledreturn Nonedef process_response(self, request, response, spider):# Called with the response returned from the downloader.# Must either;# - return a Response object# - return a Request object# - or raise IgnoreRequestreturn responsedef process_exception(self, request, exception, spider):# Called when a download handler or a process_request()# (from other downloader middleware) raises an exception.# Must either:# - return None: continue processing this exception# - return a Response object: stops process_exception() chain# - return a Request object: stops process_exception() chainpassdef spider_opened(self, spider):spider.logger.info('Spider opened: %s' % spider.name)

posted on 2018-10-19 09:43 五杀摇滚小拉夫 阅读(...) 评论(...) 编辑 收藏