使用Spark的foreach算子及UDTF函数实现MySQL数据的一对多【Java】

背景

我们的数仓项目中遇到了这样一种场景,脱敏后内容大致如下:

| col1 | col2 | time1 | time2 |

|---|---|---|---|

| a1 | b1 | 2022-01-01 00:00:00 | 2022-01-05 00:00:00 |

| a2 | b2 | 2022-01-28 00:00:00 | 2022-02-03 00:00:00 |

| a3 | b3 | 2022-02-20 00:00:00 | 2022-02-25 00:00:00 |

| a4 | b4 | 2022-03-29 00:00:00 | 2022-04-02 00:00:00 |

表结构大概如下:

mysql> create database db_lzy;

Query OK, 1 row affected (0.00 sec)mysql> use db_lzy;

Database changed

mysql> show tables;

Empty set (0.00 sec)mysql> create table test_origin_20001128 (-> col1 varchar(200),-> col2 varchar(200),-> time1 datetime,-> time2 datetime-> )-> ;

Query OK, 0 rows affected (0.03 sec)mysql> create table test_result_20001128 (-> col1 varchar(200),-> col2 varchar(200),-> time3 varchar(200)-> )-> ;

Query OK, 0 rows affected (0.01 sec)mysql>

运算后的结果应该长这样:

| col1 | col2 | time3 |

|---|---|---|

| a1 | b1 | 2022-01-01 |

| a1 | b1 | 2022-01-02 |

| a1 | b1 | 2022-01-03 |

| a1 | b1 | 2022-01-04 |

| a1 | b1 | 2022-01-05 |

| a2 | b2 | 2022-01-28 |

| a2 | b2 | 2022-01-29 |

| a2 | b2 | 2022-01-30 |

| a2 | b2 | 2022-01-31 |

| a2 | b2 | 2022-02-01 |

| a2 | b2 | 2022-02-02 |

| a2 | b2 | 2022-02-03 |

| a3 | b3 | 2022-02-20 |

| a3 | b3 | 2022-02-21 |

| a3 | b3 | 2022-02-22 |

| a3 | b3 | 2022-02-23 |

| a3 | b3 | 2022-02-24 |

| a3 | b3 | 2022-02-25 |

| a4 | b4 | 2022-03-29 |

| a4 | b4 | 2022-03-30 |

| a4 | b4 | 2022-03-31 |

| a4 | b4 | 2022-04-01 |

| a4 | b4 | 2022-04-02 |

显然应该使用一个UDTF函数。考虑到数据库应该尽可能存数据,而非消耗大量资源去运算,笔者最先想到的就是使用Spark的foreach算子实现该需求。运算应该尽可能放在Java这一侧,当然数据量不大时,也可以不用Spark,直接JDBC迭代器遍历一遍就OK了。数据量大时,还是应该使用Spark这类分布式运算引擎。

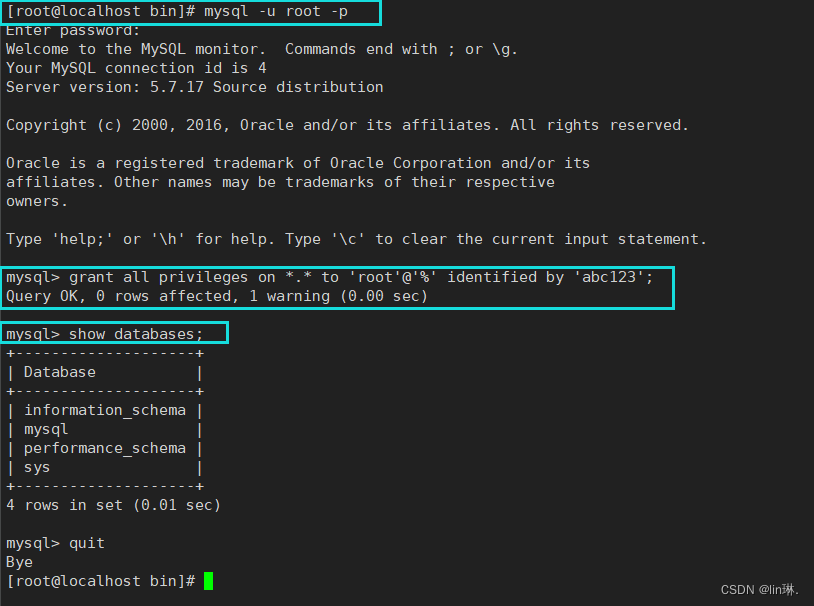

数据准备

简单插一些数据来模拟。实际prod环境当然不止这点数据!!!

mysql> insert into test_origin_20001128 values('a1','b1','2022-01-01 00:00:00','2022-01-01 00:00:00');

Query OK, 1 row affected (0.07 sec)mysql> insert into test_origin_20001128 values('a2','b2','2022-01-28 00:00:00','2022-02-03 00:00:00');

Query OK, 1 row affected (0.01 sec)mysql> insert into test_origin_20001128 values('a3','b3','2022-02-20 00:00:00','2022-02-25 00:00:00');

Query OK, 1 row affected (0.00 sec)mysql> insert into test_origin_20001128 values('a4','b4','2022-03-29 00:00:00','2022-04-02 00:00:00');

Query OK, 1 row affected (0.00 sec)mysql> select * from test_origin_20001128;

+------+------+---------------------+---------------------+

| col1 | col2 | time1 | time2 |

+------+------+---------------------+---------------------+

| a1 | b1 | 2022-01-01 00:00:00 | 2022-01-01 00:00:00 |

| a2 | b2 | 2022-01-28 00:00:00 | 2022-02-03 00:00:00 |

| a3 | b3 | 2022-02-20 00:00:00 | 2022-02-25 00:00:00 |

| a4 | b4 | 2022-03-29 00:00:00 | 2022-04-02 00:00:00 |

+------+------+---------------------+---------------------+

4 rows in set (0.00 sec)mysql>Java代码

pom.xml

<properties><maven.compiler.source>8</maven.compiler.source><maven.compiler.target>8</maven.compiler.target><scala.version>2.12.12</scala.version><scala.binary.version>2.12</scala.binary.version><spark.version>3.3.0</spark.version><encoding>UTF-8</encoding></properties><dependencies><dependency><groupId>org.scala-lang</groupId><artifactId>scala-library</artifactId><version>${scala.version}</version></dependency><!-- 添加spark依赖 --><dependency><groupId>org.apache.spark</groupId><artifactId>spark-core_${scala.binary.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-sql_${scala.binary.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming_${scala.binary.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-hive_${scala.binary.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming-kafka-0-10_${scala.binary.version}</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-sql-kafka-0-10_${scala.binary.version}</artifactId><version>${spark.version}</version></dependency><!-- 可以使用Lombok的@注解--><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><version>1.16.20</version></dependency><!-- MySQL驱动包--><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>8.0.28</version></dependency></dependencies><build><sourceDirectory>src/main/java</sourceDirectory><testSourceDirectory>src/test/java</testSourceDirectory><plugins><!-- 编译插件 --><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-compiler-plugin</artifactId><version>3.5.1</version><configuration><source>1.8</source><target>1.8</target><!--<encoding>${project.build.sourceEncoding}</encoding>--></configuration></plugin><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-surefire-plugin</artifactId><version>2.18.1</version><configuration><useFile>false</useFile><disableXmlReport>true</disableXmlReport><includes><include>**/*Test.*</include><include>**/*Suite.*</include></includes></configuration></plugin><!-- 打jar包插件(会包含所有依赖) --><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-shade-plugin</artifactId><version>2.3</version><executions><execution><phase>package</phase><goals><goal>shade</goal></goals><configuration><filters><filter><artifact>*:*</artifact><excludes><!--zip -d learn_spark.jar META-INF/*.RSA META-INF/*.DSA META-INF/*.SF --><exclude>META-INF/*.SF</exclude><exclude>META-INF/*.DSA</exclude><exclude>META-INF/*.RSA</exclude></excludes></filter></filters><transformers><transformerimplementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer"><!-- 可以设置jar包的入口类(可选) --><!--<mainClass>com.aa.flink.StreamWordCount</mainClass>--></transformer></transformers></configuration></execution></executions></plugin></plugins></build>

Java

话不多说,直接上代码:

package com.zhiyong.day20221128;import org.apache.spark.api.java.function.ForeachFunction;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.SparkSession;import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.text.SimpleDateFormat;

import java.util.Calendar;

import java.util.Date;

import java.util.LinkedList;

import java.util.Properties;/*** @program: zhiyong_study* @description: 使用foreach模拟UDTF函数* @author: zhiyong* @create: 2022-11-28 19:27**/

public class ForeachDemo {public static void main(String[] args) {SparkSession spark = SparkSession.builder().appName("使用foreach模拟UDTF函数").master("local[2]").getOrCreate();String url = "jdbc:mysql://192.168.88.100:3306/db_lzy";String table = "test_origin_20001128";Properties prop = new Properties();prop.put("driver", "com.mysql.cj.jdbc.Driver");prop.put("user", "root");prop.put("password", "123456");prop.put("url", url);prop.put("fetchSize", "1000");Dataset<Row> df1 = spark.read().jdbc(url, table, prop);df1.show(false);String[] exp = new String[5];exp[0] = "col1 as col1";exp[1] = "col2 as col2";exp[2] = "date_format(time1,'yyyy-MM-dd') as time1";exp[3] = "date_format(time2,'yyyy-MM-dd') as time2";exp[4] = "datediff(date_format(time2,'yyyy-MM-dd'),date_format(time1,'yyyy-MM-dd')) as offset";df1 = df1.selectExpr(exp);df1.show(false);df1.foreach((ForeachFunction<Row>) row -> {//生产环境应该用连接池Class.forName("com.mysql.cj.jdbc.Driver");Connection connection = DriverManager.getConnection(url, "root", "123456");connection.setAutoCommit(false);String col1 = row.get(0).toString();String col2 = row.get(1).toString();String time1 = row.get(2).toString();int offset = Integer.parseInt(row.get(4).toString());LinkedList<String> list = new LinkedList<>();list.add(time1);String sql = "insert into test_result_20001128 values(?,?,?)";PreparedStatement prepareStatement = connection.prepareStatement(sql);if (offset > 0) {SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd");Calendar calendar = Calendar.getInstance();Date date1 = simpleDateFormat.parse(time1);calendar.setTime(date1);for (;offset>0;offset--){calendar.add(calendar.DATE, 1);String parseTime = simpleDateFormat.format(calendar.getTime());list.add(parseTime);}}for (String time3 : list) {prepareStatement.setString(1,col1);prepareStatement.setString(2,col2);prepareStatement.setString(3,time3);prepareStatement.addBatch();}prepareStatement.executeBatch();connection.commit();if (null !=prepareStatement){prepareStatement.close();}if (null!=connection){connection.close();}});spark.close();}}

性能当然是灰常好,毕竟也没几条数据。

效果

读取到的原始DataFrame

+----+----+-------------------+-------------------+

|col1|col2|time1 |time2 |

+----+----+-------------------+-------------------+

|a1 |b1 |2022-01-01 00:00:00|2022-01-05 00:00:00|

|a2 |b2 |2022-01-28 00:00:00|2022-02-03 00:00:00|

|a3 |b3 |2022-02-20 00:00:00|2022-02-25 00:00:00|

|a4 |b4 |2022-03-29 00:00:00|2022-04-02 00:00:00|

+----+----+-------------------+-------------------+

可以成功读取到数据。

运算后的简化时间

+----+----+----------+----------+------+

|col1|col2|time1 |time2 |offset|

+----+----+----------+----------+------+

|a1 |b1 |2022-01-01|2022-01-05|4 |

|a2 |b2 |2022-01-28|2022-02-03|6 |

|a3 |b3 |2022-02-20|2022-02-25|5 |

|a4 |b4 |2022-03-29|2022-04-02|4 |

+----+----+----------+----------+------+

结果表

mysql> select * from test_result_20001128;

+------+------+------------+

| col1 | col2 | time3 |

+------+------+------------+

| a1 | b1 | 2022-01-01 |

| a1 | b1 | 2022-01-02 |

| a1 | b1 | 2022-01-03 |

| a1 | b1 | 2022-01-04 |

| a1 | b1 | 2022-01-05 |

| a2 | b2 | 2022-01-28 |

| a2 | b2 | 2022-01-29 |

| a2 | b2 | 2022-01-30 |

| a2 | b2 | 2022-01-31 |

| a2 | b2 | 2022-02-01 |

| a2 | b2 | 2022-02-02 |

| a2 | b2 | 2022-02-03 |

| a3 | b3 | 2022-02-20 |

| a3 | b3 | 2022-02-21 |

| a3 | b3 | 2022-02-22 |

| a3 | b3 | 2022-02-23 |

| a3 | b3 | 2022-02-24 |

| a3 | b3 | 2022-02-25 |

| a4 | b4 | 2022-03-29 |

| a4 | b4 | 2022-03-30 |

| a4 | b4 | 2022-03-31 |

| a4 | b4 | 2022-04-01 |

| a4 | b4 | 2022-04-02 |

+------+------+------------+

23 rows in set (0.00 sec)mysql>

结果当然是和预期一致。

纯SQL的做法

事实上,SQL Boy们当然是不会用Java或者Scala操作Spark的算子来搞这种骚操作。SQL能做的事情,Java一定是都能做。Java能做的事情,少数情况纯SQL方式,取点巧妙的方法也可以实现。

由于新字段的格式固定,且可以枚举遍历,撑死100年也不过5w条数据,即便是笛卡尔积也不会有啥异常严重的后果,更何况是只有几百、几千条的小体量。故可以采用如下方式,不会Java和Scala的SQL Boy们也容易领悟。

构建副表

create table test_another_20001128 (time3 varchar(200)

)

;

建个简单的表。

预填充数据

insert into test_another_20001128 values('2022-01-01');

insert into test_another_20001128 values('2022-01-02');

insert into test_another_20001128 values('2022-01-03');

insert into test_another_20001128 values('2022-01-04');

insert into test_another_20001128 values('2022-01-05');

insert into test_another_20001128 values('2022-01-06');

insert into test_another_20001128 values('2022-01-07');

insert into test_another_20001128 values('2022-01-08');

insert into test_another_20001128 values('2022-01-09');

insert into test_another_20001128 values('2022-01-10');

insert into test_another_20001128 values('2022-01-11');

insert into test_another_20001128 values('2022-01-12');

insert into test_another_20001128 values('2022-01-13');

insert into test_another_20001128 values('2022-01-14');

insert into test_another_20001128 values('2022-01-15');

insert into test_another_20001128 values('2022-01-16');

insert into test_another_20001128 values('2022-01-17');

insert into test_another_20001128 values('2022-01-18');

insert into test_another_20001128 values('2022-01-19');

insert into test_another_20001128 values('2022-01-20');

insert into test_another_20001128 values('2022-01-21');

insert into test_another_20001128 values('2022-01-22');

insert into test_another_20001128 values('2022-01-23');

insert into test_another_20001128 values('2022-01-24');

insert into test_another_20001128 values('2022-01-25');

insert into test_another_20001128 values('2022-01-26');

insert into test_another_20001128 values('2022-01-27');

insert into test_another_20001128 values('2022-01-28');

insert into test_another_20001128 values('2022-01-29');

insert into test_another_20001128 values('2022-01-30');

insert into test_another_20001128 values('2022-01-31');

insert into test_another_20001128 values('2022-02-01');

insert into test_another_20001128 values('2022-02-02');

insert into test_another_20001128 values('2022-02-03');

insert into test_another_20001128 values('2022-02-04');

insert into test_another_20001128 values('2022-02-05');

insert into test_another_20001128 values('2022-02-06');

insert into test_another_20001128 values('2022-02-07');

insert into test_another_20001128 values('2022-02-08');

insert into test_another_20001128 values('2022-02-09');

insert into test_another_20001128 values('2022-02-10');

insert into test_another_20001128 values('2022-02-11');

insert into test_another_20001128 values('2022-02-12');

insert into test_another_20001128 values('2022-02-13');

insert into test_another_20001128 values('2022-02-14');

insert into test_another_20001128 values('2022-02-15');

insert into test_another_20001128 values('2022-02-16');

insert into test_another_20001128 values('2022-02-17');

insert into test_another_20001128 values('2022-02-18');

insert into test_another_20001128 values('2022-02-19');

insert into test_another_20001128 values('2022-02-20');

insert into test_another_20001128 values('2022-02-21');

insert into test_another_20001128 values('2022-02-22');

insert into test_another_20001128 values('2022-02-23');

insert into test_another_20001128 values('2022-02-24');

insert into test_another_20001128 values('2022-02-25');

insert into test_another_20001128 values('2022-02-26');

insert into test_another_20001128 values('2022-02-27');

insert into test_another_20001128 values('2022-02-28');

insert into test_another_20001128 values('2022-03-01');

insert into test_another_20001128 values('2022-03-02');

insert into test_another_20001128 values('2022-03-03');

insert into test_another_20001128 values('2022-03-04');

insert into test_another_20001128 values('2022-03-05');

insert into test_another_20001128 values('2022-03-06');

insert into test_another_20001128 values('2022-03-07');

insert into test_another_20001128 values('2022-03-08');

insert into test_another_20001128 values('2022-03-09');

insert into test_another_20001128 values('2022-03-10');

insert into test_another_20001128 values('2022-03-11');

insert into test_another_20001128 values('2022-03-12');

insert into test_another_20001128 values('2022-03-13');

insert into test_another_20001128 values('2022-03-14');

insert into test_another_20001128 values('2022-03-15');

insert into test_another_20001128 values('2022-03-16');

insert into test_another_20001128 values('2022-03-17');

insert into test_another_20001128 values('2022-03-18');

insert into test_another_20001128 values('2022-03-19');

insert into test_another_20001128 values('2022-03-20');

insert into test_another_20001128 values('2022-03-21');

insert into test_another_20001128 values('2022-03-22');

insert into test_another_20001128 values('2022-03-23');

insert into test_another_20001128 values('2022-03-24');

insert into test_another_20001128 values('2022-03-25');

insert into test_another_20001128 values('2022-03-26');

insert into test_another_20001128 values('2022-03-27');

insert into test_another_20001128 values('2022-03-28');

insert into test_another_20001128 values('2022-03-29');

insert into test_another_20001128 values('2022-03-30');

insert into test_another_20001128 values('2022-03-31');

insert into test_another_20001128 values('2022-04-01');

insert into test_another_20001128 values('2022-04-02');

insert into test_another_20001128 values('2022-04-03');

insert into test_another_20001128 values('2022-04-04');

insert into test_another_20001128 values('2022-04-05');

insert into test_another_20001128 values('2022-04-06');

insert into test_another_20001128 values('2022-04-07');

insert into test_another_20001128 values('2022-04-08');

insert into test_another_20001128 values('2022-04-09');

insert into test_another_20001128 values('2022-04-10');

insert into test_another_20001128 values('2022-04-11');

insert into test_another_20001128 values('2022-04-12');

insert into test_another_20001128 values('2022-04-13');

insert into test_another_20001128 values('2022-04-14');

insert into test_another_20001128 values('2022-04-15');

insert into test_another_20001128 values('2022-04-16');

insert into test_another_20001128 values('2022-04-17');

insert into test_another_20001128 values('2022-04-18');

insert into test_another_20001128 values('2022-04-19');

insert into test_another_20001128 values('2022-04-20');

insert into test_another_20001128 values('2022-04-21');

insert into test_another_20001128 values('2022-04-22');

insert into test_another_20001128 values('2022-04-23');

insert into test_another_20001128 values('2022-04-24');

insert into test_another_20001128 values('2022-04-25');

insert into test_another_20001128 values('2022-04-26');

insert into test_another_20001128 values('2022-04-27');

insert into test_another_20001128 values('2022-04-28');

insert into test_another_20001128 values('2022-04-29');

insert into test_another_20001128 values('2022-04-30');

预先把用得到的数据准备好。

构建笛卡尔积

mysql> truncate table test_result_20001128;

Query OK, 0 rows affected (0.01 sec)mysql> select * from test_result_20001128;

Empty set (0.00 sec)mysql> insert into test_result_20001128-> select-> t1.col1,-> t1.col2,-> t2.time3-> from-> test_origin_20001128 t1,-> test_another_20001128 t2-> where-> t1.time1<=t2.time3-> and t1.time2>=t2.time3-> ;

Query OK, 23 rows affected (0.00 sec)

Records: 23 Duplicates: 0 Warnings: 0mysql> select * from test_result_20001128;

+------+------+------------+

| col1 | col2 | time3 |

+------+------+------------+

| a1 | b1 | 2022-01-01 |

| a1 | b1 | 2022-01-02 |

| a1 | b1 | 2022-01-03 |

| a1 | b1 | 2022-01-04 |

| a1 | b1 | 2022-01-05 |

| a2 | b2 | 2022-01-28 |

| a2 | b2 | 2022-01-29 |

| a2 | b2 | 2022-01-30 |

| a2 | b2 | 2022-01-31 |

| a2 | b2 | 2022-02-01 |

| a2 | b2 | 2022-02-02 |

| a2 | b2 | 2022-02-03 |

| a3 | b3 | 2022-02-20 |

| a3 | b3 | 2022-02-21 |

| a3 | b3 | 2022-02-22 |

| a3 | b3 | 2022-02-23 |

| a3 | b3 | 2022-02-24 |

| a3 | b3 | 2022-02-25 |

| a4 | b4 | 2022-03-29 |

| a4 | b4 | 2022-03-30 |

| a4 | b4 | 2022-03-31 |

| a4 | b4 | 2022-04-01 |

| a4 | b4 | 2022-04-02 |

+------+------+------------+

23 rows in set (0.00 sec)mysql>显然结果是一致的。

RDBMS的自动类型转换做的相当到位,不用像Hive那样各种时间戳还需要显式地手动写SQL函数转换才能做比较运算。

UDTF

异常

必须要enableHiveSupport():

//必须enableHiveSupport()

String register = "create temporary function udtf_demo as 'com.zhiyong.day20221128.UdtfDemo'";

spark.sql(register);

String sql = "select col1,col2,udtf_demo(time1,time2) as time3 from tb_tmp1_day20221128";

spark.sql(sql).show(100,false);

否则在Spark3.3.0这种新版本会报错:

Exception in thread "main" org.apache.spark.sql.AnalysisException: No handler for UDAF 'com.zhiyong.day20221128.ZhiyongUdtfFunction'. Use sparkSession.udf.register(...) instead.; line 1 pos 17at org.apache.spark.sql.errors.QueryCompilationErrors$.noHandlerForUDAFError(QueryCompilationErrors.scala:786)at org.apache.spark.sql.internal.SparkUDFExpressionBuilder.makeExpression(BaseSessionStateBuilder.scala:416)at org.apache.spark.sql.catalyst.catalog.SessionCatalog.$anonfun$makeFunctionBuilder$1(SessionCatalog.scala:1456)at org.apache.spark.sql.catalyst.analysis.SimpleFunctionRegistryBase.lookupFunction(FunctionRegistry.scala:239)at org.apache.spark.sql.catalyst.analysis.SimpleFunctionRegistryBase.lookupFunction$(FunctionRegistry.scala:233)at org.apache.spark.sql.catalyst.analysis.SimpleFunctionRegistry.lookupFunction(FunctionRegistry.scala:305)at org.apache.spark.sql.catalyst.catalog.SessionCatalog.$anonfun$resolveBuiltinOrTempFunctionInternal$1(SessionCatalog.scala:1613)at org.apache.spark.sql.catalyst.catalog.SessionCatalog.lookupTempFuncWithViewContext(SessionCatalog.scala:1635)at org.apache.spark.sql.catalyst.catalog.SessionCatalog.resolveBuiltinOrTempFunctionInternal(SessionCatalog.scala:1613)at org.apache.spark.sql.catalyst.catalog.SessionCatalog.resolveBuiltinOrTempFunction(SessionCatalog.scala:1590)at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveFunctions$.org$apache$spark$sql$catalyst$analysis$Analyzer$ResolveFunctions$$resolveBuiltinOrTempFunction(Analyzer.scala:2160)at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveFunctions$$anonfun$apply$25$$anonfun$applyOrElse$103.$anonfun$applyOrElse$108(Analyzer.scala:2119)at org.apache.spark.sql.catalyst.analysis.package$.withPosition(package.scala:60)at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveFunctions$$anonfun$apply$25$$anonfun$applyOrElse$103.applyOrElse(Analyzer.scala:2119)at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveFunctions$$anonfun$apply$25$$anonfun$applyOrElse$103.applyOrElse(Analyzer.scala:2093)at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$1(TreeNode.scala:584)at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:176)at org.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:584)at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$3(TreeNode.scala:589)at org.apache.spark.sql.catalyst.trees.UnaryLike.mapChildren(TreeNode.scala:1228)at org.apache.spark.sql.catalyst.trees.UnaryLike.mapChildren$(TreeNode.scala:1227)at org.apache.spark.sql.catalyst.expressions.UnaryExpression.mapChildren(Expression.scala:513)at org.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:589)at org.apache.spark.sql.catalyst.plans.QueryPlan.$anonfun$transformExpressionsDownWithPruning$1(QueryPlan.scala:159)at org.apache.spark.sql.catalyst.plans.QueryPlan.$anonfun$mapExpressions$1(QueryPlan.scala:200)at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:176)at org.apache.spark.sql.catalyst.plans.QueryPlan.transformExpression$1(QueryPlan.scala:200)at org.apache.spark.sql.catalyst.plans.QueryPlan.recursiveTransform$1(QueryPlan.scala:211)at org.apache.spark.sql.catalyst.plans.QueryPlan.$anonfun$mapExpressions$3(QueryPlan.scala:216)at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:285)at scala.collection.immutable.List.foreach(List.scala:431)at scala.collection.TraversableLike.map(TraversableLike.scala:285)at scala.collection.TraversableLike.map$(TraversableLike.scala:278)at scala.collection.immutable.List.map(List.scala:305)at org.apache.spark.sql.catalyst.plans.QueryPlan.recursiveTransform$1(QueryPlan.scala:216)at org.apache.spark.sql.catalyst.plans.QueryPlan.$anonfun$mapExpressions$4(QueryPlan.scala:221)at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:427)at org.apache.spark.sql.catalyst.plans.QueryPlan.mapExpressions(QueryPlan.scala:221)at org.apache.spark.sql.catalyst.plans.QueryPlan.transformExpressionsDownWithPruning(QueryPlan.scala:159)at org.apache.spark.sql.catalyst.plans.QueryPlan.transformExpressionsWithPruning(QueryPlan.scala:130)at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveFunctions$$anonfun$apply$25.applyOrElse(Analyzer.scala:2093)at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveFunctions$$anonfun$apply$25.applyOrElse(Analyzer.scala:2072)at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.$anonfun$resolveOperatorsUpWithPruning$3(AnalysisHelper.scala:138)at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:176)at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.$anonfun$resolveOperatorsUpWithPruning$1(AnalysisHelper.scala:138)at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$.allowInvokingTransformsInAnalyzer(AnalysisHelper.scala:323)at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.resolveOperatorsUpWithPruning(AnalysisHelper.scala:134)at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.resolveOperatorsUpWithPruning$(AnalysisHelper.scala:130)at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.resolveOperatorsUpWithPruning(LogicalPlan.scala:30)at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveFunctions$.apply(Analyzer.scala:2072)at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveFunctions$.apply(Analyzer.scala:2068)at org.apache.spark.sql.catalyst.rules.RuleExecutor.$anonfun$execute$2(RuleExecutor.scala:211)at scala.collection.LinearSeqOptimized.foldLeft(LinearSeqOptimized.scala:126)at scala.collection.LinearSeqOptimized.foldLeft$(LinearSeqOptimized.scala:122)at scala.collection.immutable.List.foldLeft(List.scala:91)at org.apache.spark.sql.catalyst.rules.RuleExecutor.$anonfun$execute$1(RuleExecutor.scala:208)at org.apache.spark.sql.catalyst.rules.RuleExecutor.$anonfun$execute$1$adapted(RuleExecutor.scala:200)at scala.collection.immutable.List.foreach(List.scala:431)at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:200)at org.apache.spark.sql.catalyst.analysis.Analyzer.org$apache$spark$sql$catalyst$analysis$Analyzer$$executeSameContext(Analyzer.scala:227)at org.apache.spark.sql.catalyst.analysis.Analyzer.$anonfun$execute$1(Analyzer.scala:223)at org.apache.spark.sql.catalyst.analysis.AnalysisContext$.withNewAnalysisContext(Analyzer.scala:172)at org.apache.spark.sql.catalyst.analysis.Analyzer.execute(Analyzer.scala:223)at org.apache.spark.sql.catalyst.analysis.Analyzer.execute(Analyzer.scala:187)at org.apache.spark.sql.catalyst.rules.RuleExecutor.$anonfun$executeAndTrack$1(RuleExecutor.scala:179)at org.apache.spark.sql.catalyst.QueryPlanningTracker$.withTracker(QueryPlanningTracker.scala:88)at org.apache.spark.sql.catalyst.rules.RuleExecutor.executeAndTrack(RuleExecutor.scala:179)at org.apache.spark.sql.catalyst.analysis.Analyzer.$anonfun$executeAndCheck$1(Analyzer.scala:208)at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$.markInAnalyzer(AnalysisHelper.scala:330)at org.apache.spark.sql.catalyst.analysis.Analyzer.executeAndCheck(Analyzer.scala:207)at org.apache.spark.sql.execution.QueryExecution.$anonfun$analyzed$1(QueryExecution.scala:76)at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)at org.apache.spark.sql.execution.QueryExecution.$anonfun$executePhase$2(QueryExecution.scala:185)at org.apache.spark.sql.execution.QueryExecution$.withInternalError(QueryExecution.scala:510)at org.apache.spark.sql.execution.QueryExecution.$anonfun$executePhase$1(QueryExecution.scala:185)at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)at org.apache.spark.sql.execution.QueryExecution.executePhase(QueryExecution.scala:184)at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:76)at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:74)at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:66)at org.apache.spark.sql.Dataset$.$anonfun$ofRows$2(Dataset.scala:99)at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:97)at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:622)at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:617)at com.zhiyong.day20221128.UdtfDemo.main(UdtfDemo.java:63)

22/11/28 23:39:33 INFO SparkContext: Invoking stop() from shutdown hook

22/11/28 23:39:33 INFO SparkUI: Stopped Spark web UI at http://DESKTOP-VRV0NDO:4040

22/11/28 23:39:33 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/11/28 23:39:33 INFO MemoryStore: MemoryStore cleared

22/11/28 23:39:33 INFO BlockManager: BlockManager stopped

22/11/28 23:39:33 INFO BlockManagerMaster: BlockManagerMaster stopped

22/11/28 23:39:33 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/11/28 23:39:33 INFO SparkContext: Successfully stopped SparkContext

22/11/28 23:39:33 INFO ShutdownHookManager: Shutdown hook called

22/11/28 23:39:33 INFO ShutdownHookManager: Deleting directory C:\Users\zhiyong\AppData\Local\Temp\spark-6a9f7535-0ebd-4d9e-b04c-97edd5c96d31Process finished with exit code 1需要使用提示的函数来注册UDTF。但是实际上是Hive的Catalog没有启用,默认的SessionCatalog当然是有问题的,它并不能解析到Hive的方法。

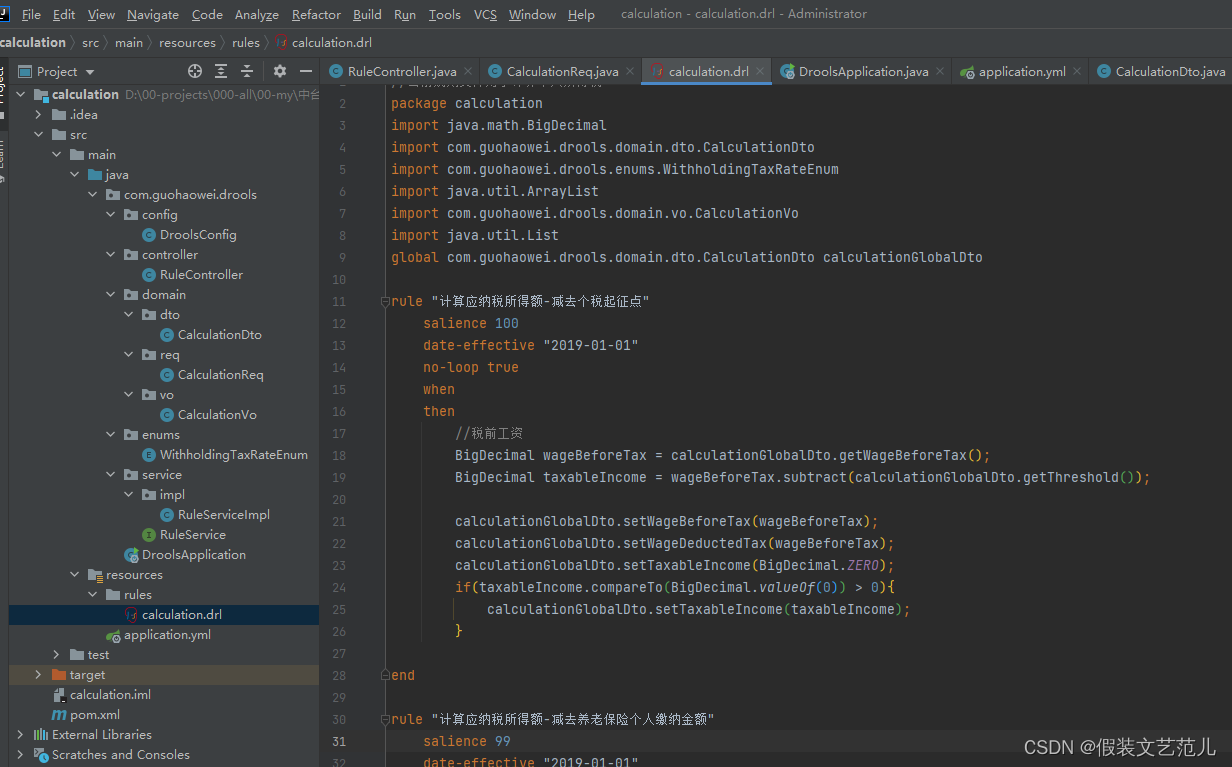

UDTF的Java类

package com.zhiyong.day20221128;import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.PrimitiveObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Calendar;

import java.util.Date;/*** @program: zhiyong_study* @description: UDTF实现类* @author: zhiyong* @create: 2022-11-28 23:24**/

public class ZhiyongUdtfFunction extends GenericUDTF {@Overridepublic StructObjectInspector initialize(ObjectInspector[] argOIs) throws UDFArgumentException {ArrayList<String> name = new ArrayList<>();ArrayList<ObjectInspector> oi = new ArrayList<>();name.add("time");oi.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);//return super.initialize(argOIs);return ObjectInspectorFactory.getStandardStructObjectInspector(name,oi);}@Overridepublic void process(Object[] args) throws HiveException {if (2!= args.length){throw new UDFArgumentException("参数个数不对,需要2个,实际" + args.length + "个");}String time1 = args[0].toString();forward(time1);int offset = Integer.parseInt(args[1].toString());if (offset > 0) {SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd");Calendar calendar = Calendar.getInstance();Date date1 = null;try {date1 = simpleDateFormat.parse(time1);} catch (ParseException e) {e.printStackTrace();}//System.out.println("date1 = " + date1);//date1 = Tue Mar 29 00:00:00 CST 2022calendar.setTime(date1);for (;offset>0;offset--){calendar.add(calendar.DATE, 1);//System.out.println("time = " + time);String parseTime = simpleDateFormat.format(calendar.getTime());forward(parseTime);}}}@Overridepublic void close() throws HiveException {}

}这个本身就是继承了Hive的类,所以写法与Hive一致。

UDTF的测试Demo类

package com.zhiyong.day20221128;import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.spark.sql.*;import java.util.Properties;/*** @program: zhiyong_study* @description: 使用UDTF函数打散数据* @author: zhiyong* @create: 2022-11-28 22:29**/

public class UdtfDemo {public static void main(String[] args) {SparkSession spark = SparkSession.builder().appName("使用UDTF函数打散数据").master("local[2]").enableHiveSupport().getOrCreate();String url = "jdbc:mysql://192.168.88.100:3306/db_lzy";String table = "test_origin_20001128";Properties prop = new Properties();prop.put("driver", "com.mysql.cj.jdbc.Driver");prop.put("user", "root");prop.put("password", "123456");prop.put("url", url);prop.put("fetchSize", "1000");Dataset<Row> df1 = spark.read().jdbc(url, table, prop);df1.show(false);String[] exp = new String[5];exp[0] = "col1 as col1";exp[1] = "col2 as col2";exp[2] = "date_format(time1,'yyyy-MM-dd') as time1";exp[3] = "date_format(time2,'yyyy-MM-dd') as time2";exp[4] = "datediff(date_format(time2,'yyyy-MM-dd'),date_format(time1,'yyyy-MM-dd')) as offset";df1 = df1.selectExpr(exp);df1.show(false);df1.registerTempTable("tb_tmp1_day20221128");//必须enableHiveSupport()String register = "create temporary function udtf_demo as 'com.zhiyong.day20221128.ZhiyongUdtfFunction'";spark.sql(register);//SQLContext sqlContext = spark.sqlContext();//sqlContext.sql(register);//HiveContext hiveContext = new HiveContext(spark);//hiveContext.sql(register);//UdtfDemo udtfDemo = new UdtfDemo();//spark.udf().register("udtf_demo",udtfDemo, DataTypes.StringType);String sql = "select col1,col2,udtf_demo(time1,offset) as time3 from tb_tmp1_day20221128";Dataset<Row> df2 = spark.sql(sql);df2.show(100,false);df2.write().mode(SaveMode.Overwrite).format("jdbc").option("url", url).option("dbtable", "db_lzy.test_result_20001128").option("user", "root").option("password", "123456").save();}

}由于Spark本身不支持注册UDTF【只能注册UDAF、UDF】,所以需要使用Hive的Context来注册函数。

Spark和Hive虽然都是有SQL,但是Hive是用Calcite解析的,Spark是自己写的Catalyst,语法上有区别。例如此处Spark SQL可以直接这么使用UDTF,但是Hive一定要这样:

with temp1 as (

selectcol1 as col1,col2 as col2,date_format(time1,'yyyy-MM-dd') as time1,date_format(time2,'yyyy-MM-dd') as time2,datediff(date_format(time2,'yyyy-MM-dd'),date_format(time1,'yyyy-MM-dd')) as offset

)

selectcol1,col2,time3

fromtb_tmp1_day20221128 t1lateral view udtf_demo(time1,offset) temp3 as time3

;

需要将新的打散列当一个临时表【类似笛卡尔积】来用。

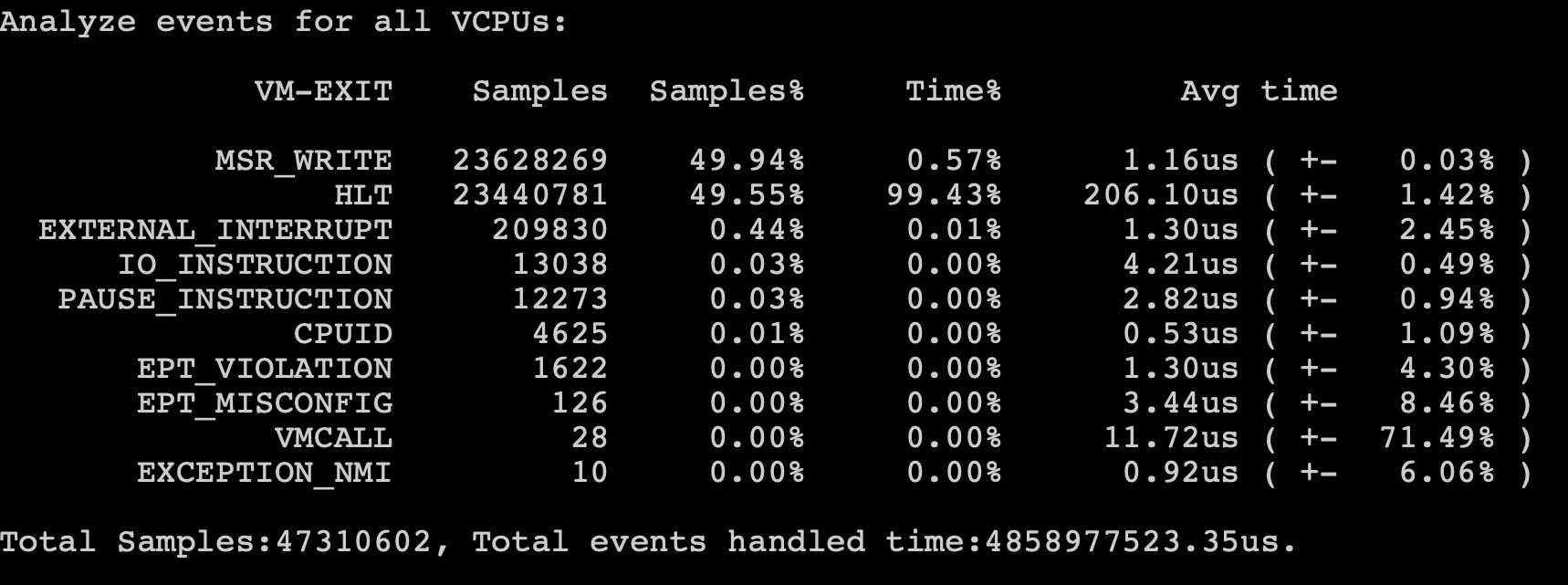

效果展示

22/11/29 00:03:52 INFO CodeGenerator: Code generated in 20.7807 ms

22/11/29 00:03:52 INFO SparkContext: Starting job: show at UdtfDemo.java:64

22/11/29 00:03:52 INFO DAGScheduler: Got job 2 (show at UdtfDemo.java:64) with 1 output partitions

22/11/29 00:03:52 INFO DAGScheduler: Final stage: ResultStage 2 (show at UdtfDemo.java:64)

22/11/29 00:03:52 INFO DAGScheduler: Parents of final stage: List()

22/11/29 00:03:52 INFO DAGScheduler: Missing parents: List()

22/11/29 00:03:52 INFO DAGScheduler: Submitting ResultStage 2 (MapPartitionsRDD[9] at show at UdtfDemo.java:64), which has no missing parents

22/11/29 00:03:52 INFO SerializationUtilities: Serializing ZhiyongUdtfFunction using kryo

22/11/29 00:03:52 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 25.8 KiB, free 15.8 GiB)

22/11/29 00:03:52 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 12.5 KiB, free 15.8 GiB)

22/11/29 00:03:52 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on DESKTOP-VRV0NDO:50132 (size: 12.5 KiB, free: 15.8 GiB)

22/11/29 00:03:52 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1513

22/11/29 00:03:52 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 2 (MapPartitionsRDD[9] at show at UdtfDemo.java:64) (first 15 tasks are for partitions Vector(0))

22/11/29 00:03:52 INFO TaskSchedulerImpl: Adding task set 2.0 with 1 tasks resource profile 0

22/11/29 00:03:52 INFO TaskSetManager: Starting task 0.0 in stage 2.0 (TID 2) (DESKTOP-VRV0NDO, executor driver, partition 0, PROCESS_LOCAL, 4299 bytes) taskResourceAssignments Map()

22/11/29 00:03:52 INFO Executor: Running task 0.0 in stage 2.0 (TID 2)

22/11/29 00:03:52 INFO SerializationUtilities: Deserializing ZhiyongUdtfFunction using kryo

22/11/29 00:03:52 INFO CodeGenerator: Code generated in 10.9602 ms

22/11/29 00:03:52 INFO CodeGenerator: Code generated in 9.3454 ms

22/11/29 00:03:52 WARN StandardStructObjectInspector: Invalid type for struct class java.lang.String

22/11/29 00:03:52 WARN StandardStructObjectInspector: ignoring similar errors.

22/11/29 00:03:52 INFO JDBCRDD: closed connection

22/11/29 00:03:52 INFO Executor: Finished task 0.0 in stage 2.0 (TID 2). 1717 bytes result sent to driver

22/11/29 00:03:52 INFO TaskSetManager: Finished task 0.0 in stage 2.0 (TID 2) in 129 ms on DESKTOP-VRV0NDO (executor driver) (1/1)

22/11/29 00:03:52 INFO TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

22/11/29 00:03:52 INFO DAGScheduler: ResultStage 2 (show at UdtfDemo.java:64) finished in 0.388 s

22/11/29 00:03:52 INFO DAGScheduler: Job 2 is finished. Cancelling potential speculative or zombie tasks for this job

22/11/29 00:03:52 INFO TaskSchedulerImpl: Killing all running tasks in stage 2: Stage finished

22/11/29 00:03:52 INFO DAGScheduler: Job 2 finished: show at UdtfDemo.java:64, took 0.394444 s

22/11/29 00:03:52 INFO CodeGenerator: Code generated in 18.709 ms

+----+----+----------+

|col1|col2|time3 |

+----+----+----------+

| a1 |b1 |2022-01-01|

|a1 |b1 |2022-01-02|

|a1 |b1 |2022-01-03|

|a1 |b1 |2022-01-04|

|a1 |b1 |2022-01-05|

|a2 |b2 |2022-01-28|

|a2 |b2 |2022-01-29|

|a2 |b2 |2022-01-30|

|a2 |b2 |2022-01-31|

|a2 |b2 |2022-02-01|

|a2 |b2 |2022-02-02|

|a2 |b2 |2022-02-03|

|a3 |b3 |2022-02-20|

|a3 |b3 |2022-02-21|

|a3 |b3 |2022-02-22|

|a3 |b3 |2022-02-23|

|a3 |b3 |2022-02-24|

|a3 |b3 |2022-02-25|

|a4 |b4 |2022-03-29|

|a4 |b4 |2022-03-30|

|a4 |b4 |2022-03-31|

|a4 |b4 |2022-04-01|

|a4 |b4 |2022-04-02|

+----+----+----------+22/11/29 00:03:52 INFO SparkContext: Invoking stop() from shutdown hook

22/11/29 00:03:52 INFO SparkUI: Stopped Spark web UI at http://DESKTOP-VRV0NDO:4040

22/11/29 00:03:52 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/11/29 00:03:52 INFO MemoryStore: MemoryStore cleared

22/11/29 00:03:52 INFO BlockManager: BlockManager stopped

22/11/29 00:03:52 INFO BlockManagerMaster: BlockManagerMaster stopped

22/11/29 00:03:52 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/11/29 00:03:52 INFO SparkContext: Successfully stopped SparkContext

22/11/29 00:03:52 INFO ShutdownHookManager: Shutdown hook called

22/11/29 00:03:52 INFO ShutdownHookManager: Deleting directory C:\Users\zhiyong\AppData\Local\Temp\spark-dd1ef2ab-0562-4e85-8f1c-f172a7bd07ddProcess finished with exit code 0不管是UDTF还是foreach,由于算法相似,结果当然也是一致的。

尾言

Java总归还是比SQL强大太多了!!!只会写几句SQL的话,距离真正意义的大数据开发还有很长的路要走!!!

转载请注明出处:https://lizhiyong.blog.csdn.net/article/details/128090026