经典软件测试技术分类:

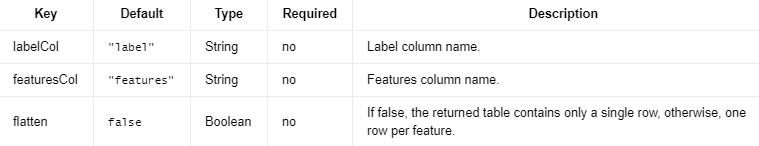

测试技术是指顺利完成测试的一系列相关过程,有很多可能的分类方式,表2-1就是其中的一种。表中列出了流行的测试技术,也按照上面的讨论对其进行分类:手工测试、自动测试、静态测试、动态测试、功能(黑盒)测试或者结构测试(白盒)测试等。

表2-2描述了每一种软件测试方法。

表2-1 测试技术分类

| 测试技术 | 手工测试 | 自动测试 | 静态测试 | 动态测试 | 功能测试 | 结构测试 |

| 验收测试 | X | X | X | X | ||

| 随机测试 | X | X | ||||

| Alpha测试 | X | X | X | |||

| 基本路径测试 | X | X | X | |||

| Beta测试 | X | X | X | |||

| 黑盒测试 | X | X | X | |||

| 自底向上测试 | X | X | X | |||

| 边界值测试 | X | X | X | |||

| 分支覆盖测试 | X | X | X | |||

| 分支/条件测试 | X | X | X | |||

| 因果图测试 | X | X | X | |||

| 比较测试 | X | X | X | X | X | |

| 兼容性测试 | X | X | X | |||

| 条件覆盖测试 | X | X | X | |||

| CRUD测试 | X | X | X | |||

| 数据库测试 | X | X | X | |||

| 决策表 | X | X | X | |||

| 桌面检查 | X | X | X | |||

| 端到端测试 | X | X | X | |||

| 等价类划分 | X | X | ||||

| 异常测试 | X | X | X | |||

| 探索测试 | X | X | X | |||

| 自由形式测试 | X | X | X | |||

| 灰盒测试 | X | X | X | X | ||

| 直方图 | X | X | ||||

| 增量集成测试 | X | X | X | X | ||

| 代码审查 | X | X | X | X | ||

| 集成测试 | X | X | X | X | ||

| JAD | X | X | X | |||

| 负载测试 | X | X | X | X | ||

| 突变测试 | X | X | X | X | ||

| 正交矩阵测试 | X | X | X |

| 测试技术 | 手工测试 | 自动测试 | 静态测试 | 动态测试 | 功能测试 | 结构测试 |

| 帕累托分析法 | X | X | ||||

| 性能测试 | X | X | X | X | X | |

| 正反测试 | X | X | X | |||

| 缺陷历史预测试 | X | X | X | |||

| 原型法 | X | X | X | |||

| 随机测试 | X | X | X | |||

| 范围测试 | X | X | X | |||

| 恢复性测试 | X | X | X | X | ||

| 回归测试 | X | X | ||||

| 基于风险的测试 | X | X | X | |||

| 运行图 | X | X | X | |||

| 三明治测试 | X | X | X | |||

| 健全性测试 | X | X | X | X | ||

| 安全性测试 | X | X | X | |||

| 状态转换测试 | X | X | X | |||

| 语句覆盖测试 | X | X | X | |||

| 统计概况测试 | X | X | X | |||

| 压力测试 | X | X | X | |||

| 结构化走查 | X | X | X | X | ||

| 语法测试 | X | X | X | X | ||

| 系统测试 | X | X | X | X | ||

| 表测试 | X | X | X | |||

| 线序测试 | X | X | X | |||

| 自顶向下测试 | X | X | X | X | ||

| 单元测试 | X | X | X | X | ||

| 易用性测试 | X | X | X | X | ||

| 用户验收测试 | X | X | X | X | ||

| 白盒测试 | X | X | X |

表2-2 测试技术描述

| 测试技术 | 简 要 描 述 |

| 验收测试 | 基于最终用户/客户规约的最终测试,或基于最终用户/客户使用一段时间的测试 |

| 随机测试 | 与探索测试相似,但是通常指测试人员在测试以前对软件有较深的理解 |

| Alpha测试 | 当开发接近结束的时候对应用程序进行的测试;作为测试结果,可能会有一些细微的设计变更。通常由最终用户或其他人员完成,而不是开发人员和测试人员 |

| 基本路径测试 | 基于程序或系统的流和路径而进行的测试 |

| Beta测试 | 当开发和测试基本上都结束的时候对应用程序进行的测试;产品最终发布以前,bug或问题需要在该测试中发现。通常由最终用户或其他人员完成,而不是开发人员和测试人员 |

| 黑盒测试 | 测试用例的产生是基于系统的功能 |

| 自底向上测试 | 从下面开始来对模块或程序进行集成测试 |

| 边界值测试 | 测试用例是由等价类的边界值产生的 |

| 测试技术 | 简 要 描 述 |

| 分支覆盖测试 | 验证程序中每一个判断分支取真取假各至少一次 |

| 分支/条件测试 | 验证每一个判断的所有可能条件的取值组合至少一次 |

| 因果图测试 | 通过映射同时发生、互相影响的多个输入来确定判定条件 |

| 比较测试 | 与竞争对手产品比较其优势与劣势 |

| 兼容性测试 | 测试软件与特定的硬件/软件/操作系统/网络等环境的兼容性 |

| 条件覆盖测试 | 验证每一个判断的每个条件的所有可能的取值至少一次 |

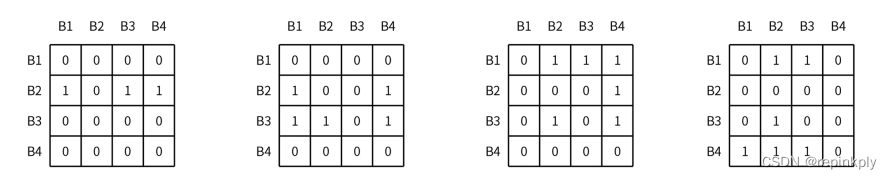

| CRUD测试 | 建立CRUD矩阵并测试所有目标的生成、检索、修改和删除 |

| 数据库测试 | 检查数据库字段值的完整性 |

| 决策表 | 显示决策标准和相应的行动的表 |

| 桌面检查 | 开发人员评审代码的正确性 |

| 端到端测试 | 与系统测试类似;测试尺度的“宏观”端;包括在一个模拟真实世界使用的情况下对完整的应用程序环境进行的测试,这种模拟包括与数据库互动、使用网络通信或在适当的情况下与其他硬件、应用程序或系统的互动等 |

| 等价类划分 | 每一个输入条件都被划分入两个或多个分组。测试用例由有效类和无效类的代表组成 |

| 异常测试 | 识别出错误信息和异常处理流程以及触发它们的条件 |

| 探索测试 | 经常被看作一个创造性的非正式的软件测试,这一测试不是基于正式的测试计划或测试用例的;测试者可能在测试软件的同时正在学习该软件 |

| 自由形式测试 | 使用直觉定义测试用例,随机地或以头脑风暴方式进行 |

| 灰盒测试 | 白盒测试和黑盒测试的组合方式,充分利用了二者的优点 |

| 直方图 | 测量值的一个图形表示,这些测量值根据定位热点问题的出现频率分类组织 |

| 增量集成测试 | 当在一个应用程序中加入新功能时对其进行的继续测试;需要一个应用程序功能性的不同方面足够独立以能够在程序的所有部分完成之前单独工作,或者测试驱动是按照需求进行开发的;由程序员或者测试人员进行该测试 |

| 代码审查 | 同事之间正式的代码审核,会使用到检查表、准入标准和退出标准 |

| 集成测试 | 对一个应用程序的各个混合部分的进行测试以确定它们的功能是否正确的整合。这些部分可以是代码模块、个体应用程序或者在一个网络上的客户端/服务器应用程序。这种测试类型与客户端/服务器结构的系统和分布式系统的关联尤其紧密 |

| JAD | 用户和开发人员坐在一起,用易于理解的会话方式共同设计系统 |

| 负载测试 | 在很重负载的情况下对应用程序加以测试,例如,在一个负载的范围下对一个网站进行的测试以决定在哪一点系统的反应时间会变慢或瘫痪 |

| 突变测试 | 决定一组测试数据或测试用例是否有用的方法,通过故意引入不同的代码变动(“缺陷”),并用原始测试数据/用例重新测试以确定是否这些缺陷能被检测出来。这一方法的适当实现需要大量的计算资源 |

| 正交表测试 | 确定哪些变量的改变需要被测试的数学技术 |

| 帕累托分析法 | 对缺陷模型加以分析以识别原因和来源 |

| 性能测试 | 可与压力和负载测试互换使用的方法。理想情况下性能测试(以及其他任何测试类型)应在需求文档或QA或测试计划中定义 |

| 正反测试 | 对所有输入测试正确值和错误值 |

| 缺陷历史预测试 | 在系统的预测试中,为每一个缺陷创建或者重运行测试用例 |

| 原型法 | 通过建立一个潜在应用程序的某些部分并向用户展示从而从用户处收集数据的一般方法 |

| 随机测试 | 涉及从一个特定的输入值的集合(其中的值与其他值非常相似)中随机选择的技术 |

| 测试技术 | 简 要 描 述 |

| 范围测试 | 对于每一个输入,找出系统反应相同的区间范围 |

| 恢复性测试 | 测试一个系统从崩溃、硬件故障或其他灾难性问题中能够恢复到什么程度 |

| 回归测试 | 回归测试根据在一个开发螺旋周期或者一个新版本的调试、维护或开发中产生的变化对应用程序加以测试 |

| 基于风险的测试 | 测量一个应用程序系统所具有的业务风险的程度以对测试加以改进 |

| 运行图 | 一个关于质量特性怎样随时间变化的图形表示 |

| 三明治测试 | 三明治测试同时使用自顶向下和自底向上技术并且是两个技术的一个折中 |

| 健全性测试 | 一般来说是一个初始的测试工作,用以确定一个新的软件版本是否运行足够良好,达到一个可以进行主要测试的标准。例如,如果新的软件每5分钟就系统崩溃一次、系统运行陷于停顿状态、或者毁坏数据库,那么这个软件可能就处于不足够健全的情况,无法在其现有状态下保证进一步测试的顺利进行 |

| 安全性测试 | 测试系统抵制未授权的内部或外部访问、故意损害等的能力;可能需要复杂的测试技术 |

| 状态转换测试 | 首先标识了一个系统的状态,然后编写一个测试用例以测试造成从一个状态转换到另外一个状态的触发条件的技术 |

| 语句覆盖测试 | 确保代码的每一条语句或者每一行都至少执行一遍 |

| 统计概况测试 | 使用统计技术来描述系统的一个使用概况。基于期望的使用频率,测试人员确定有利于测试的事务路径、条件、功能区域和数据表格 |

| 压力测试 | 可与性能和负载测试互换使用的方法。用于将这样的测试描述为在非正常的高负载、特定行为或输入的大量重复、输入大量数值数据或对数据库系统的大量复杂访问的情况下的系统功能测试 |

| 结构化走查 | 举行一个项目相关人员对工作产品进行查错的会议 |

| 语法测试 | 测试输入排列组合的数据驱动的技术 |

| 系统测试 | 基于一个整体的需求规约的黑盒类型测试,覆盖了一个系统的所有组成部分 |

| 表测试 | 测试表项的访问、安全性和数据完整性 |

| 线序测试 | 将个体单元组合成为共同完成一个或一组功能的功能性线序 |

| 自顶向下测试 | 从顶部开始的整合模块或程序 |

| 单元测试 | 测试最微观的尺度;测试特定的功能或代码模块,一般来说由开发人员而非测试人员进行,因为它需要对程序内部设计和代码有细致的了解。一般不容易实现,除非应用程序的代码具有非常好的结构;可能需要开发测试驱动模块或测试执行器 |

| 易用性测试 | 测试软件的人机交互是否友好。很明显这是主观的,并且依赖于目标终端用户或客户。可使用用户访谈、调查、用户会议的摄像和其他技术。开发人员和测试人员通常不适合作为易用性测试人员 |

| 用户验收测试 | 确定软件是否让最终用户或客户感到满意 |

| 白盒测试 | 通过检查系统的逻辑路径来定义测试用例 |

这里为你准备了200G软件测试教程9资料,包括用例模板、计划模板、报告模板、性能调优、自

动化教程、测开模板、简历模板、面试技巧、大厂真题等!点击下方自行获取: