前言

本文简要介绍IJKPLAYER的几个常用API,以API使用的角度,来审视其内部运作原理。这里以iOS端直播API调用切入。

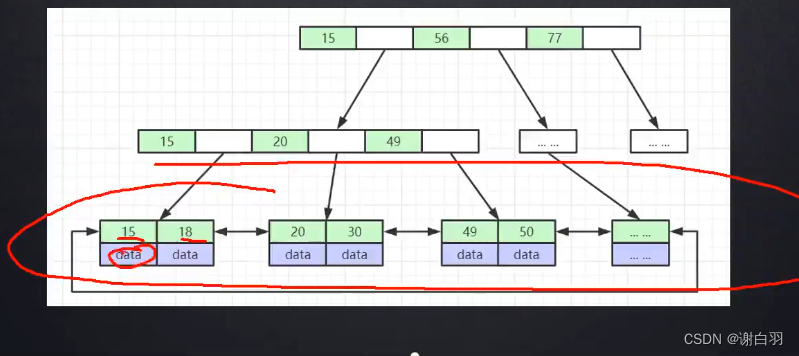

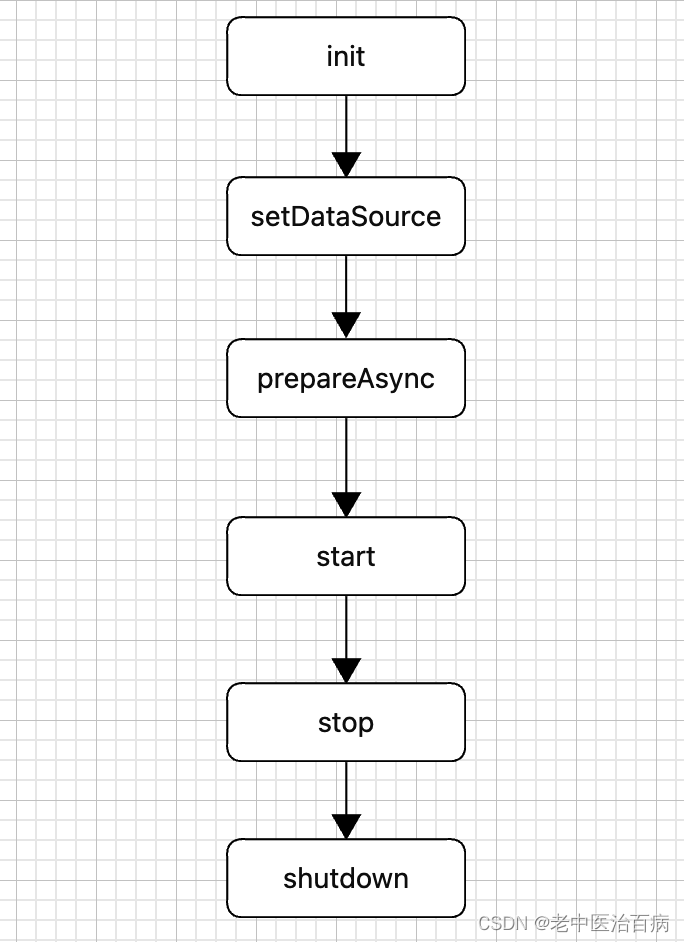

调用流程

init

创建播放器实例后,会先调用init方法进行初始化:

- (IJKFFMediaPlayer *)init

{self = [super init];if (self) {_renderType = IJKSDLFFPlayrRenderTypeGlView;[self nativeSetup];}return self;

}而后,在init方法里调用nativeSetup对IJKPLAYER进行初始化:

- (void) nativeSetup

{ijkmp_global_init();ijkmp_global_set_inject_callback(ijkff_inject_callback);_msgPool = [[IJKFFMoviePlayerMessagePool alloc] init];_eventHandlers = [[NSMutableSet alloc] init];_nativeMediaPlayer = ijkmp_ios_create(ff_media_player_msg_loop);ijkmp_set_option(_nativeMediaPlayer, IJKMP_OPT_CATEGORY_PLAYER, "overlay-format", "fcc-_es2");[self setInjectOpaque];_notificationManager = [[IJKNotificationManager alloc] init];[[IJKAudioKit sharedInstance] setupAudioSessionWithoutInterruptHandler];_optionsDictionary = nil;_isThirdGLView = true;_scaleFactor = 1.0f;_fps = 1.0f;[self registerApplicationObservers];

}注册ijkio相关callback:

- (void) setInjectOpaque

{IJKFFWeakHolder *weakHolder = [[IJKFFWeakHolder alloc] init];weakHolder.object = self;ijkmp_set_weak_thiz(_nativeMediaPlayer, (__bridge_retained void *) self);ijkmp_set_inject_opaque(_nativeMediaPlayer, (__bridge_retained void *) weakHolder);ijkmp_set_ijkio_inject_opaque(_nativeMediaPlayer, (__bridge_retained void *) weakHolder);

}然后调用ijkmp_ios_create方法,由ijkmp_create创建IjkMediaPlayer、SDL_Vout、IJKFF_Pipeline等对象:

IjkMediaPlayer *ijkmp_ios_create(int (*msg_loop)(void*))

{IjkMediaPlayer *mp = ijkmp_create(msg_loop);if (!mp)goto fail;mp->ffplayer->vout = SDL_VoutIos_CreateForGLES2();if (!mp->ffplayer->vout)goto fail;mp->ffplayer->pipeline = ffpipeline_create_from_ios(mp->ffplayer);if (!mp->ffplayer->pipeline)goto fail;return mp;fail:ijkmp_dec_ref_p(&mp);return NULL;

}特别值得一提的是ffpipeline_create_from_ios方法,创建了IJKFF_Pipeline对象并注册了打开video和audio的解码器的callback:

IJKFF_Pipeline *ffpipeline_create_from_ios(FFPlayer *ffp)

{IJKFF_Pipeline *pipeline = ffpipeline_alloc(&g_pipeline_class, sizeof(IJKFF_Pipeline_Opaque));if (!pipeline)return pipeline;IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;opaque->ffp = ffp;pipeline->func_destroy = func_destroy;pipeline->func_open_video_decoder = func_open_video_decoder;pipeline->func_open_audio_output = func_open_audio_output;return pipeline;

}struct IJKFF_Pipeline_Opaque {FFPlayer *ffp;bool is_videotoolbox_open;

};static void func_destroy(IJKFF_Pipeline *pipeline)

{

}static IJKFF_Pipenode *func_open_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{IJKFF_Pipenode* node = NULL;IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;if (ffp->videotoolbox) {node = ffpipenode_create_video_decoder_from_ios_videotoolbox(ffp);if (!node)ALOGE("vtb fail!!! switch to ffmpeg decode!!!! \n");}if (node == NULL) {node = ffpipenode_create_video_decoder_from_ffplay(ffp);ffp->stat.vdec_type = FFP_PROPV_DECODER_AVCODEC;opaque->is_videotoolbox_open = false;} else {ffp->stat.vdec_type = FFP_PROPV_DECODER_VIDEOTOOLBOX;opaque->is_videotoolbox_open = true;}ffp_notify_msg2(ffp, FFP_MSG_VIDEO_DECODER_OPEN, opaque->is_videotoolbox_open);return node;

}static SDL_Aout *func_open_audio_output(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{return SDL_AoutIos_CreateForAudioUnit();

}static SDL_Class g_pipeline_class = {.name = "ffpipeline_ios",

};以video为例,func_open_video_decoder方法是在read_thread线程例如下调用栈回调的:

read_thread => stream_component_open(FFPlayer *ffp, int stream_index) => ffpipeline_open_video_decoder => IJKFF_Pipenode* ffpipeline_open_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{return pipeline->func_open_video_decoder(pipeline, ffp);

}因此,init方法主要是对ijkplayer的比如demux、decoder、网络IO,以及ijkcache等的初始化,并设置使能的option。

setDataSource

随后,业务层调用setDataSource方法,传入播放URL:

- (int) setDataSource:(NSString *)url

{ return ijkmp_set_data_source(_nativeMediaPlayer, [url UTF8String]);

}最后,url参数是保存在IjkMediaPlayer结构的data_source字段中:

static int ijkmp_set_data_source_l(IjkMediaPlayer *mp, const char *url)

{assert(mp);assert(url);// MPST_RET_IF_EQ(mp->mp_state, MP_STATE_IDLE);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_INITIALIZED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ASYNC_PREPARING);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PREPARED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STARTED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PAUSED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_COMPLETED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STOPPED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ERROR);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_END);freep((void**)&mp->data_source);mp->data_source = strdup(url);if (!mp->data_source)return EIJK_OUT_OF_MEMORY;ijkmp_change_state_l(mp, MP_STATE_INITIALIZED);return 0;

}prepareAsync

紧接着,调用prepareAsync方法,这里将做一系列播放前的准备工作,比如创建相关线程、队列(PacketQueue和FrameQueue)初始化、锁和信号灯创建、参考时钟始化等:

- (int) prepareAsync

{return ijkmp_prepare_async(_nativeMediaPlayer);

}

- msg loop thread:创建消息循环线程;

- PacketQueue / FrameQueue / 参考时钟 初始化 / 锁和信号灯创建;

video_refresh_thread:创建视频显示线程;

read_thread:网络IO线程,并在该线程里创建解码线程并做audio、video和subtitle显示初始化系列工作;

展开ijkmp_prepare_async源码:

static int ijkmp_prepare_async_l(IjkMediaPlayer *mp)

{assert(mp);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_IDLE);// MPST_RET_IF_EQ(mp->mp_state, MP_STATE_INITIALIZED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ASYNC_PREPARING);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PREPARED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STARTED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PAUSED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_COMPLETED);// MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STOPPED);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ERROR);MPST_RET_IF_EQ(mp->mp_state, MP_STATE_END);assert(mp->data_source);ijkmp_change_state_l(mp, MP_STATE_ASYNC_PREPARING);// released in msg_loopijkmp_inc_ref(mp);// mp->msg_thread = SDL_CreateThreadEx(&mp->_msg_thread, ijkmp_msg_loop, mp, "ff_msg_loop");mp->msg_thread = SDL_CreateThread(ijkmp_msg_loop, "ff_msg_loop", mp);// msg_thread is detached inside msg_loop// TODO: 9 release weak_thiz if pthread_create() failed;int retval = ffp_prepare_async_l(mp->ffplayer, mp->data_source);if (retval < 0) {ijkmp_change_state_l(mp, MP_STATE_ERROR);return retval;}return 0;

}在此方法里,首先创建ijkmp_msg_loop消息循环线程,紧接着调用ffp_prepare_async_l方法:

int ffp_prepare_async_l(FFPlayer *ffp, const char *file_name)

{assert(ffp);assert(!ffp->is);assert(file_name);if (av_stristart(file_name, "rtmp", NULL) ||av_stristart(file_name, "rtsp", NULL)) {// There is total different meaning for 'timeout' option in rtmpav_log(ffp, AV_LOG_WARNING, "remove 'timeout' option for rtmp.\n");av_dict_set(&ffp->format_opts, "timeout", NULL, 0);}/* there is a length limit in avformat */if (strlen(file_name) + 1 > 1024) {av_log(ffp, AV_LOG_ERROR, "%s too long url\n", __func__);if (avio_find_protocol_name("ijklongurl:")) {av_dict_set(&ffp->format_opts, "ijklongurl-url", file_name, 0);file_name = "ijklongurl:";}}av_log(NULL, AV_LOG_INFO, "===== versions =====\n");ffp_show_version_str(ffp, "ijkplayer", ijk_version_info());ffp_show_version_str(ffp, "FFmpeg", av_version_info());ffp_show_version_int(ffp, "libavutil", avutil_version());ffp_show_version_int(ffp, "libavcodec", avcodec_version());ffp_show_version_int(ffp, "libavformat", avformat_version());ffp_show_version_int(ffp, "libswscale", swscale_version());ffp_show_version_int(ffp, "libswresample", swresample_version());av_log(NULL, AV_LOG_INFO, "===== options =====\n");ffp_show_dict(ffp, "player-opts", ffp->player_opts);ffp_show_dict(ffp, "format-opts", ffp->format_opts);ffp_show_dict(ffp, "codec-opts ", ffp->codec_opts);ffp_show_dict(ffp, "sws-opts ", ffp->sws_dict);ffp_show_dict(ffp, "swr-opts ", ffp->swr_opts);av_log(NULL, AV_LOG_INFO, "===================\n");av_opt_set_dict(ffp, &ffp->player_opts);if (!ffp->aout) {ffp->aout = ffpipeline_open_audio_output(ffp->pipeline, ffp);if (!ffp->aout)return -1;}#if CONFIG_AVFILTERif (ffp->vfilter0) {GROW_ARRAY(ffp->vfilters_list, ffp->nb_vfilters);ffp->vfilters_list[ffp->nb_vfilters - 1] = ffp->vfilter0;}

#endifVideoState *is = stream_open(ffp, file_name, NULL);if (!is) {av_log(NULL, AV_LOG_WARNING, "ffp_prepare_async_l: stream_open failed OOM");return EIJK_OUT_OF_MEMORY;}ffp->is = is;ffp->input_filename = av_strdup(file_name);return 0;

}在此调用stream_open(ffp, file_name, NULL)方法:

static VideoState *stream_open(FFPlayer *ffp, const char *filename, AVInputFormat *iformat)

{assert(!ffp->is);VideoState *is;is = av_mallocz(sizeof(VideoState));if (!is)return NULL;is->filename = av_strdup(filename);if (!is->filename)goto fail;is->iformat = iformat;is->ytop = 0;is->xleft = 0;

#if defined(__ANDROID__)if (ffp->soundtouch_enable) {is->handle = ijk_soundtouch_create();}

#endif/* start video display */if (frame_queue_init(&is->pictq, &is->videoq, ffp->pictq_size, 1) < 0)goto fail;if (frame_queue_init(&is->subpq, &is->subtitleq, SUBPICTURE_QUEUE_SIZE, 0) < 0)goto fail;if (frame_queue_init(&is->sampq, &is->audioq, SAMPLE_QUEUE_SIZE, 1) < 0)goto fail;if (packet_queue_init(&is->videoq) < 0 ||packet_queue_init(&is->audioq) < 0 ||packet_queue_init(&is->subtitleq) < 0)goto fail;if (!(is->continue_read_thread = SDL_CreateCond())) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());goto fail;}if (!(is->video_accurate_seek_cond = SDL_CreateCond())) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());ffp->enable_accurate_seek = 0;}if (!(is->audio_accurate_seek_cond = SDL_CreateCond())) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());ffp->enable_accurate_seek = 0;}init_clock(&is->vidclk, &is->videoq.serial);init_clock(&is->audclk, &is->audioq.serial);init_clock(&is->extclk, &is->extclk.serial);is->audio_clock_serial = -1;if (ffp->startup_volume < 0)av_log(NULL, AV_LOG_WARNING, "-volume=%d < 0, setting to 0\n", ffp->startup_volume);if (ffp->startup_volume > 100)av_log(NULL, AV_LOG_WARNING, "-volume=%d > 100, setting to 100\n", ffp->startup_volume);ffp->startup_volume = av_clip(ffp->startup_volume, 0, 100);ffp->startup_volume = av_clip(SDL_MIX_MAXVOLUME * ffp->startup_volume / 100, 0, SDL_MIX_MAXVOLUME);is->audio_volume = ffp->startup_volume;is->muted = 0;is->av_sync_type = ffp->av_sync_type;is->play_mutex = SDL_CreateMutex();is->accurate_seek_mutex = SDL_CreateMutex();ffp->is = is;is->pause_req = !ffp->start_on_prepared;// is->video_refresh_tid = SDL_CreateThreadEx(&is->_video_refresh_tid, video_refresh_thread, ffp, "ff_vout");is->video_refresh_tid = SDL_CreateThread(video_refresh_thread, "ff_vout", ffp);if (!is->video_refresh_tid) {av_freep(&ffp->is);return NULL;}is->initialized_decoder = 0;// is->read_tid = SDL_CreateThreadEx(&is->_read_tid, read_thread, ffp, "ff_read");is->read_tid = SDL_CreateThread(read_thread, "ff_read", ffp);if (!is->read_tid) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateThread(): %s\n", SDL_GetError());goto fail;}if (ffp->async_init_decoder && !ffp->video_disable && ffp->video_mime_type && strlen(ffp->video_mime_type) > 0&& ffp->mediacodec_default_name && strlen(ffp->mediacodec_default_name) > 0) {if (ffp->mediacodec_all_videos || ffp->mediacodec_avc || ffp->mediacodec_hevc || ffp->mediacodec_mpeg2) {decoder_init(&is->viddec, NULL, &is->videoq, is->continue_read_thread);ffp->node_vdec = ffpipeline_init_video_decoder(ffp->pipeline, ffp);}}is->initialized_decoder = 1;return is;

fail:is->initialized_decoder = 1;is->abort_request = true;if (is->video_refresh_tid)SDL_WaitThread(is->video_refresh_tid, NULL);stream_close(ffp);return NULL;

}在此初始化PacketQueue、FrameQueue、参考时钟,并创建相关mutex和信号灯,最后创建video_refresh_thread显示线程和read_thread网络IO线程。

此处重点介绍下read_thread线程:

/* this thread gets the stream from the disk or the network */

static int read_thread(void *arg)

{FFPlayer *ffp = arg;VideoState *is = ffp->is;AVFormatContext *ic = NULL;int err, i, ret av_unused;int st_index[AVMEDIA_TYPE_NB];AVPacket pkt1, *pkt = &pkt1;int64_t stream_start_time;int completed = 0;int pkt_in_play_range = 0;AVDictionaryEntry *t;SDL_mutex *wait_mutex = SDL_CreateMutex();int scan_all_pmts_set = 0;int64_t pkt_ts;int last_error = 0;int64_t prev_io_tick_counter = 0;int64_t io_tick_counter = 0;int init_ijkmeta = 0;if (!wait_mutex) {av_log(NULL, AV_LOG_FATAL, "SDL_CreateMutex(): %s\n", SDL_GetError());ret = AVERROR(ENOMEM);goto fail;}memset(st_index, -1, sizeof(st_index));is->last_video_stream = is->video_stream = -1;is->last_audio_stream = is->audio_stream = -1;is->last_subtitle_stream = is->subtitle_stream = -1;is->eof = 0;ic = avformat_alloc_context();if (!ic) {av_log(NULL, AV_LOG_FATAL, "Could not allocate context.\n");ret = AVERROR(ENOMEM);goto fail;}ic->interrupt_callback.callback = decode_interrupt_cb;ic->interrupt_callback.opaque = is;if (!av_dict_get(ffp->format_opts, "scan_all_pmts", NULL, AV_DICT_MATCH_CASE)) {av_dict_set(&ffp->format_opts, "scan_all_pmts", "1", AV_DICT_DONT_OVERWRITE);scan_all_pmts_set = 1;}if (av_stristart(is->filename, "rtmp", NULL) ||av_stristart(is->filename, "rtsp", NULL)) {// There is total different meaning for 'timeout' option in rtmpav_log(ffp, AV_LOG_WARNING, "remove 'timeout' option for rtmp.\n");av_dict_set(&ffp->format_opts, "timeout", NULL, 0);}if (ffp->skip_calc_frame_rate) {av_dict_set_int(&ic->metadata, "skip-calc-frame-rate", ffp->skip_calc_frame_rate, 0);av_dict_set_int(&ffp->format_opts, "skip-calc-frame-rate", ffp->skip_calc_frame_rate, 0);}if (ffp->iformat_name)is->iformat = av_find_input_format(ffp->iformat_name);err = avformat_open_input(&ic, is->filename, is->iformat, &ffp->format_opts);if (err < 0) {print_error(is->filename, err);ret = -1;last_error = err;goto fail;}ffp_notify_msg1(ffp, FFP_MSG_OPEN_INPUT);if (scan_all_pmts_set)av_dict_set(&ffp->format_opts, "scan_all_pmts", NULL, AV_DICT_MATCH_CASE);if ((t = av_dict_get(ffp->format_opts, "", NULL, AV_DICT_IGNORE_SUFFIX))) {av_log(NULL, AV_LOG_ERROR, "Option %s not found.\n", t->key);

#ifdef FFP_MERGEret = AVERROR_OPTION_NOT_FOUND;goto fail;

#endif}is->ic = ic;if (ffp->genpts)ic->flags |= AVFMT_FLAG_GENPTS;av_format_inject_global_side_data(ic);////AVDictionary **opts;//int orig_nb_streams;//opts = setup_find_stream_info_opts(ic, ffp->codec_opts);//orig_nb_streams = ic->nb_streams;if (ffp->find_stream_info) {AVDictionary **opts = setup_find_stream_info_opts(ic, ffp->codec_opts);int orig_nb_streams = ic->nb_streams;do {if (av_stristart(is->filename, "data:", NULL) && orig_nb_streams > 0) {for (i = 0; i < orig_nb_streams; i++) {if (!ic->streams[i] || !ic->streams[i]->codecpar || ic->streams[i]->codecpar->profile == FF_PROFILE_UNKNOWN) {break;}}if (i == orig_nb_streams) {break;}}err = avformat_find_stream_info(ic, opts);} while(0);ffp_notify_msg1(ffp, FFP_MSG_FIND_STREAM_INFO);for (i = 0; i < orig_nb_streams; i++)av_dict_free(&opts[i]);av_freep(&opts);if (err < 0) {av_log(NULL, AV_LOG_WARNING,"%s: could not find codec parameters\n", is->filename);ret = -1;last_error = err;goto fail;}}if (ic->pb)ic->pb->eof_reached = 0; // FIXME hack, ffplay maybe should not use avio_feof() to test for the endif (ffp->seek_by_bytes < 0)ffp->seek_by_bytes = !!(ic->iformat->flags & AVFMT_TS_DISCONT) && strcmp("ogg", ic->iformat->name);is->max_frame_duration = (ic->iformat->flags & AVFMT_TS_DISCONT) ? 10.0 : 3600.0;is->max_frame_duration = 10.0;av_log(ffp, AV_LOG_INFO, "max_frame_duration: %.3f\n", is->max_frame_duration);#ifdef FFP_MERGEif (!window_title && (t = av_dict_get(ic->metadata, "title", NULL, 0)))window_title = av_asprintf("%s - %s", t->value, input_filename);#endif/* if seeking requested, we execute it */if (ffp->start_time != AV_NOPTS_VALUE) {int64_t timestamp;timestamp = ffp->start_time;/* add the stream start time */if (ic->start_time != AV_NOPTS_VALUE)timestamp += ic->start_time;ret = avformat_seek_file(ic, -1, INT64_MIN, timestamp, INT64_MAX, 0);if (ret < 0) {av_log(NULL, AV_LOG_WARNING, "%s: could not seek to position %0.3f\n",is->filename, (double)timestamp / AV_TIME_BASE);}}// 把原来的realtime设置为0,并从外部设置获取max_cached_duration的值// is->realtime = is_realtime(ic);is->realtime = 0;is->drag_to_end = false;AVDictionaryEntry *e = av_dict_get(ffp->player_opts, "max_cached_duration", NULL, 0);if (e) {int max_cached_duration = atoi(e->value);if (max_cached_duration <= 0) {is->max_cached_duration = 0;} else {is->max_cached_duration = max_cached_duration;}} else {is->max_cached_duration = 0;}av_dump_format(ic, 0, is->filename, 0);int video_stream_count = 0;int h264_stream_count = 0;int first_h264_stream = -1;for (i = 0; i < ic->nb_streams; i++) {AVStream *st = ic->streams[i];enum AVMediaType type = st->codecpar->codec_type;st->discard = AVDISCARD_ALL;if (type >= 0 && ffp->wanted_stream_spec[type] && st_index[type] == -1)if (avformat_match_stream_specifier(ic, st, ffp->wanted_stream_spec[type]) > 0)st_index[type] = i;// choose first h264if (type == AVMEDIA_TYPE_VIDEO) {enum AVCodecID codec_id = st->codecpar->codec_id;video_stream_count++;if (codec_id == AV_CODEC_ID_H264) {h264_stream_count++;if (first_h264_stream < 0)first_h264_stream = i;}}}if (video_stream_count > 1 && st_index[AVMEDIA_TYPE_VIDEO] < 0) {st_index[AVMEDIA_TYPE_VIDEO] = first_h264_stream;av_log(NULL, AV_LOG_WARNING, "multiple video stream found, prefer first h264 stream: %d\n", first_h264_stream);}if (!ffp->video_disable)st_index[AVMEDIA_TYPE_VIDEO] =av_find_best_stream(ic, AVMEDIA_TYPE_VIDEO,st_index[AVMEDIA_TYPE_VIDEO], -1, NULL, 0);if (!ffp->audio_disable)st_index[AVMEDIA_TYPE_AUDIO] =av_find_best_stream(ic, AVMEDIA_TYPE_AUDIO,st_index[AVMEDIA_TYPE_AUDIO],st_index[AVMEDIA_TYPE_VIDEO],NULL, 0);if (!ffp->video_disable && !ffp->subtitle_disable)st_index[AVMEDIA_TYPE_SUBTITLE] =av_find_best_stream(ic, AVMEDIA_TYPE_SUBTITLE,st_index[AVMEDIA_TYPE_SUBTITLE],(st_index[AVMEDIA_TYPE_AUDIO] >= 0 ?st_index[AVMEDIA_TYPE_AUDIO] :st_index[AVMEDIA_TYPE_VIDEO]),NULL, 0);is->show_mode = ffp->show_mode;

#ifdef FFP_MERGE // bbc: dunno if we need thisif (st_index[AVMEDIA_TYPE_VIDEO] >= 0) {AVStream *st = ic->streams[st_index[AVMEDIA_TYPE_VIDEO]];AVCodecParameters *codecpar = st->codecpar;AVRational sar = av_guess_sample_aspect_ratio(ic, st, NULL);if (codecpar->width)set_default_window_size(codecpar->width, codecpar->height, sar);}

#endif/* open the streams */if (st_index[AVMEDIA_TYPE_AUDIO] >= 0) {stream_component_open(ffp, st_index[AVMEDIA_TYPE_AUDIO]);} else {ffp->av_sync_type = AV_SYNC_VIDEO_MASTER;is->av_sync_type = ffp->av_sync_type;}ret = -1;if (st_index[AVMEDIA_TYPE_VIDEO] >= 0) {ret = stream_component_open(ffp, st_index[AVMEDIA_TYPE_VIDEO]);}if (is->show_mode == SHOW_MODE_NONE)is->show_mode = ret >= 0 ? SHOW_MODE_VIDEO : SHOW_MODE_RDFT;if (st_index[AVMEDIA_TYPE_SUBTITLE] >= 0) {stream_component_open(ffp, st_index[AVMEDIA_TYPE_SUBTITLE]);}ffp_notify_msg1(ffp, FFP_MSG_COMPONENT_OPEN);if (!ffp->ijkmeta_delay_init) {ijkmeta_set_avformat_context_l(ffp->meta, ic);}ffp->stat.bit_rate = ic->bit_rate;if (st_index[AVMEDIA_TYPE_VIDEO] >= 0)ijkmeta_set_int64_l(ffp->meta, IJKM_KEY_VIDEO_STREAM, st_index[AVMEDIA_TYPE_VIDEO]);if (st_index[AVMEDIA_TYPE_AUDIO] >= 0)ijkmeta_set_int64_l(ffp->meta, IJKM_KEY_AUDIO_STREAM, st_index[AVMEDIA_TYPE_AUDIO]);if (st_index[AVMEDIA_TYPE_SUBTITLE] >= 0)ijkmeta_set_int64_l(ffp->meta, IJKM_KEY_TIMEDTEXT_STREAM, st_index[AVMEDIA_TYPE_SUBTITLE]);if (is->video_stream < 0 && is->audio_stream < 0) {av_log(NULL, AV_LOG_FATAL, "Failed to open file '%s' or configure filtergraph\n",is->filename);ret = -1;last_error = AVERROR_STREAM_NOT_FOUND;goto fail;}if (is->audio_stream >= 0) {is->audioq.is_buffer_indicator = 1;is->buffer_indicator_queue = &is->audioq;} else if (is->video_stream >= 0) {is->videoq.is_buffer_indicator = 1;is->buffer_indicator_queue = &is->videoq;} else {assert("invalid streams");}if (ffp->infinite_buffer < 0 && is->realtime)ffp->infinite_buffer = 1;if (is->pause_req && !ffp->render_wait_start && !ffp->cover_after_prepared)toggle_pause(ffp, 1);if (is->video_st && is->video_st->codecpar) {AVCodecParameters *codecpar = is->video_st->codecpar;ffp_notify_msg3(ffp, FFP_MSG_VIDEO_SIZE_CHANGED, codecpar->width, codecpar->height);ffp_notify_msg3(ffp, FFP_MSG_SAR_CHANGED, codecpar->sample_aspect_ratio.num, codecpar->sample_aspect_ratio.den);}ffp->prepared = true;ffp_notify_msg1(ffp, FFP_MSG_PREPARED);if (!ffp->render_wait_start && !ffp->cover_after_prepared) {while (is->pause_req && !is->abort_request) {SDL_Delay(20);}}if (ffp->auto_resume) {ffp_notify_msg1(ffp, FFP_REQ_START);ffp->auto_resume = 0;}/* offset should be seeked*/if (ffp->seek_at_start > 0) {ffp_seek_to_l(ffp, (long)(ffp->seek_at_start));}for (;;) {if (is->abort_request)break;

#ifdef FFP_MERGEif (is->paused != is->last_paused) {is->last_paused = is->paused;if (is->paused)is->read_pause_return = av_read_pause(ic);elseav_read_play(ic);}

#endif

#if CONFIG_RTSP_DEMUXER || CONFIG_MMSH_PROTOCOLif (is->paused &&(!strcmp(ic->iformat->name, "rtsp") ||(ic->pb && !strncmp(ffp->input_filename, "mmsh:", 5)))) {/* wait 10 ms to avoid trying to get another packet *//* XXX: horrible */SDL_Delay(10);continue;}

#endifif (is->seek_req) {int64_t seek_target = is->seek_pos;int64_t seek_min = is->seek_rel > 0 ? seek_target - is->seek_rel + 2: INT64_MIN;int64_t seek_max = is->seek_rel < 0 ? seek_target - is->seek_rel - 2: INT64_MAX;

// FIXME the +-2 is due to rounding being not done in the correct direction in generation

// of the seek_pos/seek_rel variablesffp_toggle_buffering(ffp, 1);ffp_notify_msg3(ffp, FFP_MSG_BUFFERING_UPDATE, 0, 0);ret = avformat_seek_file(is->ic, -1, seek_min, seek_target, seek_max, is->seek_flags);if (ret < 0) {av_log(NULL, AV_LOG_ERROR,"%s: error while seeking\n", is->ic->filename);} else {if (is->audio_stream >= 0) {packet_queue_flush(&is->audioq);packet_queue_put(&is->audioq, &flush_pkt);// TODO: clear invaild audio data// SDL_AoutFlushAudio(ffp->aout);}if (is->subtitle_stream >= 0) {packet_queue_flush(&is->subtitleq);packet_queue_put(&is->subtitleq, &flush_pkt);}if (is->video_stream >= 0) {if (ffp->node_vdec) {ffpipenode_flush(ffp->node_vdec);}packet_queue_flush(&is->videoq);packet_queue_put(&is->videoq, &flush_pkt);}if (is->seek_flags & AVSEEK_FLAG_BYTE) {set_clock(&is->extclk, NAN, 0);} else {set_clock(&is->extclk, seek_target / (double)AV_TIME_BASE, 0);}is->latest_video_seek_load_serial = is->videoq.serial;is->latest_audio_seek_load_serial = is->audioq.serial;is->latest_seek_load_start_at = av_gettime();}ffp->dcc.current_high_water_mark_in_ms = ffp->dcc.first_high_water_mark_in_ms;is->seek_req = 0;is->queue_attachments_req = 1;is->eof = 0;

#ifdef FFP_MERGEif (is->paused)step_to_next_frame(is);

#endifcompleted = 0;SDL_LockMutex(ffp->is->play_mutex);if (ffp->auto_resume) {is->pause_req = 0;if (ffp->packet_buffering)is->buffering_on = 1;ffp->auto_resume = 0;stream_update_pause_l(ffp);}if (is->pause_req)step_to_next_frame_l(ffp);SDL_UnlockMutex(ffp->is->play_mutex);if (ffp->enable_accurate_seek) {is->drop_aframe_count = 0;is->drop_vframe_count = 0;SDL_LockMutex(is->accurate_seek_mutex);if (is->video_stream >= 0) {is->video_accurate_seek_req = 1;}if (is->audio_stream >= 0 && !is->pause_req && !is->drag_to_end) {is->audio_accurate_seek_req = 1;}SDL_CondSignal(is->audio_accurate_seek_cond);SDL_CondSignal(is->video_accurate_seek_cond);SDL_UnlockMutex(is->accurate_seek_mutex);}ffp_notify_msg3(ffp, FFP_MSG_SEEK_COMPLETE, (int)fftime_to_milliseconds(seek_target), ret);ffp_toggle_buffering(ffp, 1);}if (is->queue_attachments_req) {if (is->video_st && (is->video_st->disposition & AV_DISPOSITION_ATTACHED_PIC)) {AVPacket copy = { 0 };if ((ret = av_packet_ref(©, &is->video_st->attached_pic)) < 0)goto fail;packet_queue_put(&is->videoq, ©);packet_queue_put_nullpacket(&is->videoq, is->video_stream);}is->queue_attachments_req = 0;}/* if the queue are full, no need to read more */if (ffp->infinite_buffer<1 && !is->seek_req &&

#ifdef FFP_MERGE(is->audioq.size + is->videoq.size + is->subtitleq.size > MAX_QUEUE_SIZE

#else(is->audioq.size + is->videoq.size + is->subtitleq.size > ffp->dcc.max_buffer_size

#endif|| ( stream_has_enough_packets(is->audio_st, is->audio_stream, &is->audioq, MIN_FRAMES)&& stream_has_enough_packets(is->video_st, is->video_stream, &is->videoq, MIN_FRAMES)&& stream_has_enough_packets(is->subtitle_st, is->subtitle_stream, &is->subtitleq, MIN_FRAMES)))) {if (!is->eof) {ffp_toggle_buffering(ffp, 0);}/* wait 10 ms */SDL_LockMutex(wait_mutex);SDL_CondWaitTimeout(is->continue_read_thread, wait_mutex, 10);SDL_UnlockMutex(wait_mutex);continue;}// is->viddec.finished == is->videoq.serial means decode complete// frame_queue_nb_remaining(&is->videoq) == 0 means display completebool audio_no_remaining = !is->audio_st || (is->auddec.finished == is->audioq.serial && frame_queue_nb_remaining(&is->sampq) == 0);bool video_no_remaining = !is->video_st || (is->viddec.finished == is->videoq.serial && frame_queue_nb_remaining(&is->pictq) == 0);bool eof = is->eof && video_no_remaining;bool will_completed = ((!is->paused || completed) && audio_no_remaining && video_no_remaining) || eof;

// av_log(NULL, AV_LOG_INFO, "paused=%d,drag_to_end=%d,video_no_remain=%d,finished=%d,serial=%d\n",

// is->paused, eof, video_no_remaining, is->viddec.finished, is->videoq.serial);if (will_completed) {if (ffp->loop != 1 && (!ffp->loop || --ffp->loop)) {stream_seek(is, ffp->start_time != AV_NOPTS_VALUE ? ffp->start_time : 0, 0, 0);} else if (ffp->autoexit) {ret = AVERROR_EOF;goto fail;} else {ffp_statistic_l(ffp);if (completed) {av_log(ffp, AV_LOG_INFO, "ffp_toggle_buffering: eof\n");SDL_LockMutex(wait_mutex);// infinite wait may block shutdownwhile(!is->abort_request && !is->seek_req)SDL_CondWaitTimeout(is->continue_read_thread, wait_mutex, 100);SDL_UnlockMutex(wait_mutex);if (!is->abort_request)continue;} else {completed = 1;ffp->auto_resume = 0;// TODO: 0 it's a bit early to notify complete hereffp_toggle_buffering(ffp, 0);SDL_AoutFlushAudio(ffp->aout);toggle_pause(ffp, 1);if (ffp->error) {av_log(ffp, AV_LOG_INFO, "ffp_toggle_buffering: error: %d\n", ffp->error);char error_msg[AV_ERROR_MAX_STRING_SIZE];av_strerror(ffp->error, error_msg, AV_ERROR_MAX_STRING_SIZE);ffp_notify_msg4(ffp, FFP_MSG_ERROR, ffp->error, 0, error_msg, AV_ERROR_MAX_STRING_SIZE);} else {if (ffp->enable_position_notify) {int64_t position = ffp_get_duration_l(ffp);ffp_notify_msg2(ffp, FFP_MSG_CURRENT_POSITION_UPDATE, (int) position);}ALOGW("msg complete, duration %ld. position %ld \n", ffp_get_duration_l(ffp), ffp_get_current_position_l(ffp));av_log(ffp, AV_LOG_INFO, "ffp_toggle_buffering: completed: OK\n");ffp_notify_msg1(ffp, FFP_MSG_COMPLETED);}}}}pkt->flags = 0;ret = av_read_frame(ic, pkt);if (ret < 0) {int pb_eof = 0;int pb_error = 0;if ((ret == AVERROR_EOF || avio_feof(ic->pb)) && !is->eof) {ffp_check_buffering_l(ffp);pb_eof = 1;// check error later}if (ic->pb && ic->pb->error) {pb_eof = 1;pb_error = ic->pb->error;}if (ret == AVERROR_EXIT) {pb_eof = 1;pb_error = AVERROR_EXIT;}if (pb_eof) {if (is->video_stream >= 0)packet_queue_put_nullpacket(&is->videoq, is->video_stream);if (is->audio_stream >= 0)packet_queue_put_nullpacket(&is->audioq, is->audio_stream);if (is->subtitle_stream >= 0)packet_queue_put_nullpacket(&is->subtitleq, is->subtitle_stream);is->eof = 1;}if (pb_error) {if (is->video_stream >= 0)packet_queue_put_nullpacket(&is->videoq, is->video_stream);if (is->audio_stream >= 0)packet_queue_put_nullpacket(&is->audioq, is->audio_stream);if (is->subtitle_stream >= 0)packet_queue_put_nullpacket(&is->subtitleq, is->subtitle_stream);is->eof = 1;ffp->error = pb_error;av_log(ffp, AV_LOG_ERROR, "av_read_frame error: %s\n", ffp_get_error_string(ffp->error));// break;} else {ffp->error = 0;}if (is->eof) {ffp_toggle_buffering(ffp, 0);SDL_Delay(100);}SDL_LockMutex(wait_mutex);SDL_CondWaitTimeout(is->continue_read_thread, wait_mutex, 10);SDL_UnlockMutex(wait_mutex);ffp_statistic_l(ffp);continue;} else {is->eof = 0;}if (pkt->flags & AV_PKT_FLAG_DISCONTINUITY) {if (is->audio_stream >= 0) {packet_queue_put(&is->audioq, &flush_pkt);}if (is->subtitle_stream >= 0) {packet_queue_put(&is->subtitleq, &flush_pkt);}if (is->video_stream >= 0) {packet_queue_put(&is->videoq, &flush_pkt);}}// 每次读取一个pkt,都去判断处理// TODO:优化,不用每次都调用if (is->max_cached_duration > 0) {control_queue_duration(ffp, is);}/* check if packet is in play range specified by user, then queue, otherwise discard */stream_start_time = ic->streams[pkt->stream_index]->start_time;pkt_ts = pkt->pts == AV_NOPTS_VALUE ? pkt->dts : pkt->pts;pkt_in_play_range = ffp->duration == AV_NOPTS_VALUE ||(pkt_ts - (stream_start_time != AV_NOPTS_VALUE ? stream_start_time : 0)) *av_q2d(ic->streams[pkt->stream_index]->time_base) -(double)(ffp->start_time != AV_NOPTS_VALUE ? ffp->start_time : 0) / 1000000<= ((double)ffp->duration / 1000000);if (pkt->stream_index == is->audio_stream && pkt_in_play_range) {packet_queue_put(&is->audioq, pkt);} else if (pkt->stream_index == is->video_stream && pkt_in_play_range&& !(is->video_st && (is->video_st->disposition & AV_DISPOSITION_ATTACHED_PIC))) {packet_queue_put(&is->videoq, pkt);} else if (pkt->stream_index == is->subtitle_stream && pkt_in_play_range) {packet_queue_put(&is->subtitleq, pkt);} else {av_packet_unref(pkt);}ffp_statistic_l(ffp);if (ffp->ijkmeta_delay_init && !init_ijkmeta &&(ffp->first_video_frame_rendered || !is->video_st) && (ffp->first_audio_frame_rendered || !is->audio_st)) {ijkmeta_set_avformat_context_l(ffp->meta, ic);init_ijkmeta = 1;}if (ffp->packet_buffering) {io_tick_counter = SDL_GetTickHR();if ((!ffp->first_video_frame_rendered && is->video_st) || (!ffp->first_audio_frame_rendered && is->audio_st)) {if (abs((int)(io_tick_counter - prev_io_tick_counter)) > FAST_BUFFERING_CHECK_PER_MILLISECONDS) {prev_io_tick_counter = io_tick_counter;ffp->dcc.current_high_water_mark_in_ms = ffp->dcc.first_high_water_mark_in_ms;ffp_check_buffering_l(ffp);}} else {if (abs((int)(io_tick_counter - prev_io_tick_counter)) > BUFFERING_CHECK_PER_MILLISECONDS) {prev_io_tick_counter = io_tick_counter;ffp_check_buffering_l(ffp);}}}}ret = 0;fail:if (ic && !is->ic)avformat_close_input(&ic);if (!ffp->prepared || !is->abort_request) {ffp->last_error = last_error;char error_msg[AV_ERROR_MAX_STRING_SIZE];av_strerror(ffp->last_error, error_msg, AV_ERROR_MAX_STRING_SIZE);ffp_notify_msg4(ffp, FFP_MSG_ERROR, ffp->last_error, 0, error_msg, AV_ERROR_MAX_STRING_SIZE);}SDL_DestroyMutex(wait_mutex);return 0;

}首先调用ffmpeg的libavformat的api来解协议、解封装、查找流信息:

avformat_alloc_context() => avformat_open_input(&ic, is->filename, is->iformat, &ffp->format_opts) => avformat_find_stream_info => av_find_best_stream而后,在找到video / audio / subtitle流index后,调用stream_component_open方法创建相关解码器:

/* open a given stream. Return 0 if OK */

static int stream_component_open(FFPlayer *ffp, int stream_index)

{VideoState *is = ffp->is;AVFormatContext *ic = is->ic;AVCodecContext *avctx;AVCodec *codec = NULL;const char *forced_codec_name = NULL;AVDictionary *opts = NULL;AVDictionaryEntry *t = NULL;int sample_rate, nb_channels;int64_t channel_layout;int ret = 0;int stream_lowres = ffp->lowres;if (stream_index < 0 || stream_index >= ic->nb_streams)return -1;avctx = avcodec_alloc_context3(NULL);if (!avctx)return AVERROR(ENOMEM);ret = avcodec_parameters_to_context(avctx, ic->streams[stream_index]->codecpar);if (ret < 0)goto fail;av_codec_set_pkt_timebase(avctx, ic->streams[stream_index]->time_base);codec = avcodec_find_decoder(avctx->codec_id);switch (avctx->codec_type) {case AVMEDIA_TYPE_AUDIO : is->last_audio_stream = stream_index; forced_codec_name = ffp->audio_codec_name; break;case AVMEDIA_TYPE_SUBTITLE: is->last_subtitle_stream = stream_index; forced_codec_name = ffp->subtitle_codec_name; break;case AVMEDIA_TYPE_VIDEO : is->last_video_stream = stream_index; forced_codec_name = ffp->video_codec_name; break;default: break;}if (forced_codec_name)codec = avcodec_find_decoder_by_name(forced_codec_name);if (!codec) {if (forced_codec_name) av_log(NULL, AV_LOG_WARNING,"No codec could be found with name '%s'\n", forced_codec_name);else av_log(NULL, AV_LOG_WARNING,"No codec could be found with id %d\n", avctx->codec_id);ret = AVERROR(EINVAL);goto fail;}avctx->codec_id = codec->id;if(stream_lowres > av_codec_get_max_lowres(codec)){av_log(avctx, AV_LOG_WARNING, "The maximum value for lowres supported by the decoder is %d\n",av_codec_get_max_lowres(codec));stream_lowres = av_codec_get_max_lowres(codec);}av_codec_set_lowres(avctx, stream_lowres);#if FF_API_EMU_EDGEif(stream_lowres) avctx->flags |= CODEC_FLAG_EMU_EDGE;

#endifif (ffp->fast)avctx->flags2 |= AV_CODEC_FLAG2_FAST;

#if FF_API_EMU_EDGEif(codec->capabilities & AV_CODEC_CAP_DR1)avctx->flags |= CODEC_FLAG_EMU_EDGE;

#endifopts = filter_codec_opts(ffp->codec_opts, avctx->codec_id, ic, ic->streams[stream_index], codec);if (!av_dict_get(opts, "threads", NULL, 0))av_dict_set(&opts, "threads", "auto", 0);if (stream_lowres)av_dict_set_int(&opts, "lowres", stream_lowres, 0);if (avctx->codec_type == AVMEDIA_TYPE_VIDEO || avctx->codec_type == AVMEDIA_TYPE_AUDIO)av_dict_set(&opts, "refcounted_frames", "1", 0);if ((ret = avcodec_open2(avctx, codec, &opts)) < 0) {goto fail;}if ((t = av_dict_get(opts, "", NULL, AV_DICT_IGNORE_SUFFIX))) {av_log(NULL, AV_LOG_ERROR, "Option %s not found.\n", t->key);

#ifdef FFP_MERGEret = AVERROR_OPTION_NOT_FOUND;goto fail;

#endif}is->eof = 0;ic->streams[stream_index]->discard = AVDISCARD_DEFAULT;switch (avctx->codec_type) {case AVMEDIA_TYPE_AUDIO:

#if CONFIG_AVFILTER{AVFilterContext *sink;is->audio_filter_src.freq = avctx->sample_rate;is->audio_filter_src.channels = avctx->channels;is->audio_filter_src.channel_layout = get_valid_channel_layout(avctx->channel_layout, avctx->channels);is->audio_filter_src.fmt = avctx->sample_fmt;SDL_LockMutex(ffp->af_mutex);if ((ret = configure_audio_filters(ffp, ffp->afilters, 0)) < 0) {SDL_UnlockMutex(ffp->af_mutex);goto fail;}ffp->af_changed = 0;SDL_UnlockMutex(ffp->af_mutex);sink = is->out_audio_filter;sample_rate = av_buffersink_get_sample_rate(sink);nb_channels = av_buffersink_get_channels(sink);channel_layout = av_buffersink_get_channel_layout(sink);}

#elsesample_rate = avctx->sample_rate;nb_channels = avctx->channels;channel_layout = avctx->channel_layout;

#endif/* prepare audio output */if ((ret = audio_open(ffp, channel_layout, nb_channels, sample_rate, &is->audio_tgt)) < 0)goto fail;ffp_set_audio_codec_info(ffp, AVCODEC_MODULE_NAME, avcodec_get_name(avctx->codec_id));is->audio_hw_buf_size = ret;is->audio_src = is->audio_tgt;is->audio_buf_size = 0;is->audio_buf_index = 0;/* init averaging filter */is->audio_diff_avg_coef = exp(log(0.01) / AUDIO_DIFF_AVG_NB);is->audio_diff_avg_count = 0;/* since we do not have a precise anough audio FIFO fullness,we correct audio sync only if larger than this threshold */is->audio_diff_threshold = 2.0 * is->audio_hw_buf_size / is->audio_tgt.bytes_per_sec;is->audio_stream = stream_index;is->audio_st = ic->streams[stream_index];decoder_init(&is->auddec, avctx, &is->audioq, is->continue_read_thread);if ((is->ic->iformat->flags & (AVFMT_NOBINSEARCH | AVFMT_NOGENSEARCH | AVFMT_NO_BYTE_SEEK)) && !is->ic->iformat->read_seek) {is->auddec.start_pts = is->audio_st->start_time;is->auddec.start_pts_tb = is->audio_st->time_base;}if ((ret = decoder_start(&is->auddec, audio_thread, ffp, "ff_audio_dec")) < 0)goto out;if (!is->paused && !ffp->cover_after_prepared)SDL_AoutPauseAudio(ffp->aout, 0);break;case AVMEDIA_TYPE_VIDEO:is->video_stream = stream_index;is->video_st = ic->streams[stream_index];if (ffp->async_init_decoder) {while (!is->initialized_decoder) {SDL_Delay(5);}if (ffp->node_vdec) {is->viddec.avctx = avctx;ret = ffpipeline_config_video_decoder(ffp->pipeline, ffp);}if (ret || !ffp->node_vdec) {decoder_init(&is->viddec, avctx, &is->videoq, is->continue_read_thread);ffp->node_vdec = ffpipeline_open_video_decoder(ffp->pipeline, ffp);if (!ffp->node_vdec)goto fail;}} else {decoder_init(&is->viddec, avctx, &is->videoq, is->continue_read_thread);ffp->node_vdec = ffpipeline_open_video_decoder(ffp->pipeline, ffp);if (!ffp->node_vdec)goto fail;}if ((ret = decoder_start(&is->viddec, video_thread, ffp, "ff_video_dec")) < 0)goto out;is->queue_attachments_req = 1;if (ffp->max_fps >= 0) {if(is->video_st->avg_frame_rate.den && is->video_st->avg_frame_rate.num) {double fps = av_q2d(is->video_st->avg_frame_rate);SDL_ProfilerReset(&is->viddec.decode_profiler, fps + 0.5);if (fps > ffp->max_fps && fps < 130.0) {is->is_video_high_fps = 1;av_log(ffp, AV_LOG_WARNING, "fps: %lf (too high)\n", fps);} else {av_log(ffp, AV_LOG_WARNING, "fps: %lf (normal)\n", fps);}}if(is->video_st->r_frame_rate.den && is->video_st->r_frame_rate.num) {double tbr = av_q2d(is->video_st->r_frame_rate);if (tbr > ffp->max_fps && tbr < 130.0) {is->is_video_high_fps = 1;av_log(ffp, AV_LOG_WARNING, "fps: %lf (too high)\n", tbr);} else {av_log(ffp, AV_LOG_WARNING, "fps: %lf (normal)\n", tbr);}}}if (is->is_video_high_fps) {avctx->skip_frame = FFMAX(avctx->skip_frame, AVDISCARD_NONREF);avctx->skip_loop_filter = FFMAX(avctx->skip_loop_filter, AVDISCARD_NONREF);avctx->skip_idct = FFMAX(avctx->skip_loop_filter, AVDISCARD_NONREF);}break;case AVMEDIA_TYPE_SUBTITLE:if (!ffp->subtitle) break;is->subtitle_stream = stream_index;is->subtitle_st = ic->streams[stream_index];ffp_set_subtitle_codec_info(ffp, AVCODEC_MODULE_NAME, avcodec_get_name(avctx->codec_id));decoder_init(&is->subdec, avctx, &is->subtitleq, is->continue_read_thread);if ((ret = decoder_start(&is->subdec, subtitle_thread, ffp, "ff_subtitle_dec")) < 0)goto out;break;default:break;}goto out;fail:avcodec_free_context(&avctx);

out:av_dict_free(&opts);return ret;

}此方法很关键,audio / video / subtitle的解码器及其解码线程都是在该方法里完成的。

先找audio、video和subtitle流的解码器:

avcodec_alloc_context3(NULL) => avcodec_parameters_to_context(avctx, ic->streams[stream_index]->codecpar) => av_codec_set_pkt_timebase(avctx, ic->streams[stream_index]->time_base) => avcodec_find_decoder(avctx->codec_id) 打开音频并创建解码线程

若有audio流,在找到audio的解码器之后,会打开audio流,并做音频的初始化,然后初始化解码器并创建解码线程:

switch (avctx->codec_type) {case AVMEDIA_TYPE_AUDIO:

#if CONFIG_AVFILTER{AVFilterContext *sink;is->audio_filter_src.freq = avctx->sample_rate;is->audio_filter_src.channels = avctx->channels;is->audio_filter_src.channel_layout = get_valid_channel_layout(avctx->channel_layout, avctx->channels);is->audio_filter_src.fmt = avctx->sample_fmt;SDL_LockMutex(ffp->af_mutex);if ((ret = configure_audio_filters(ffp, ffp->afilters, 0)) < 0) {SDL_UnlockMutex(ffp->af_mutex);goto fail;}ffp->af_changed = 0;SDL_UnlockMutex(ffp->af_mutex);sink = is->out_audio_filter;sample_rate = av_buffersink_get_sample_rate(sink);nb_channels = av_buffersink_get_channels(sink);channel_layout = av_buffersink_get_channel_layout(sink);}

#elsesample_rate = avctx->sample_rate;nb_channels = avctx->channels;channel_layout = avctx->channel_layout;

#endif/* prepare audio output */if ((ret = audio_open(ffp, channel_layout, nb_channels, sample_rate, &is->audio_tgt)) < 0)goto fail;ffp_set_audio_codec_info(ffp, AVCODEC_MODULE_NAME, avcodec_get_name(avctx->codec_id));is->audio_hw_buf_size = ret;is->audio_src = is->audio_tgt;is->audio_buf_size = 0;is->audio_buf_index = 0;/* init averaging filter */is->audio_diff_avg_coef = exp(log(0.01) / AUDIO_DIFF_AVG_NB);is->audio_diff_avg_count = 0;/* since we do not have a precise anough audio FIFO fullness,we correct audio sync only if larger than this threshold */is->audio_diff_threshold = 2.0 * is->audio_hw_buf_size / is->audio_tgt.bytes_per_sec;is->audio_stream = stream_index;is->audio_st = ic->streams[stream_index];decoder_init(&is->auddec, avctx, &is->audioq, is->continue_read_thread);if ((is->ic->iformat->flags & (AVFMT_NOBINSEARCH | AVFMT_NOGENSEARCH | AVFMT_NO_BYTE_SEEK)) && !is->ic->iformat->read_seek) {is->auddec.start_pts = is->audio_st->start_time;is->auddec.start_pts_tb = is->audio_st->time_base;}if ((ret = decoder_start(&is->auddec, audio_thread, ffp, "ff_audio_dec")) < 0)goto out;if (!is->paused && !ffp->cover_after_prepared)SDL_AoutPauseAudio(ffp->aout, 0);break;audio_open

static int audio_open(FFPlayer *opaque, int64_t wanted_channel_layout, int wanted_nb_channels, int wanted_sample_rate, struct AudioParams *audio_hw_params)

{FFPlayer *ffp = opaque;VideoState *is = ffp->is;SDL_AudioSpec wanted_spec, spec;const char *env;static const int next_nb_channels[] = {0, 0, 1, 6, 2, 6, 4, 6};

#ifdef FFP_MERGEstatic const int next_sample_rates[] = {0, 44100, 48000, 96000, 192000};

#endifstatic const int next_sample_rates[] = {0, 44100, 48000};int next_sample_rate_idx = FF_ARRAY_ELEMS(next_sample_rates) - 1;env = SDL_getenv("SDL_AUDIO_CHANNELS");if (env) {wanted_nb_channels = atoi(env);wanted_channel_layout = av_get_default_channel_layout(wanted_nb_channels);}if (!wanted_channel_layout || wanted_nb_channels != av_get_channel_layout_nb_channels(wanted_channel_layout)) {wanted_channel_layout = av_get_default_channel_layout(wanted_nb_channels);wanted_channel_layout &= ~AV_CH_LAYOUT_STEREO_DOWNMIX;}wanted_nb_channels = av_get_channel_layout_nb_channels(wanted_channel_layout);wanted_spec.channels = wanted_nb_channels;wanted_spec.freq = wanted_sample_rate;if (wanted_spec.freq <= 0 || wanted_spec.channels <= 0) {av_log(NULL, AV_LOG_ERROR, "Invalid sample rate or channel count!\n");return -1;}while (next_sample_rate_idx && next_sample_rates[next_sample_rate_idx] >= wanted_spec.freq)next_sample_rate_idx--;wanted_spec.format = AUDIO_S16SYS;wanted_spec.silence = 0;wanted_spec.samples = FFMAX(SDL_AUDIO_MIN_BUFFER_SIZE, 2 << av_log2(wanted_spec.freq / SDL_AoutGetAudioPerSecondCallBacks(ffp->aout)));wanted_spec.callback = sdl_audio_callback;wanted_spec.userdata = opaque;while (SDL_AoutOpenAudio(ffp->aout, &wanted_spec, &spec) < 0) {/* avoid infinity loop on exit. --by bbcallen */if (is->abort_request)return -1;av_log(NULL, AV_LOG_WARNING, "SDL_OpenAudio (%d channels, %d Hz): %s\n",wanted_spec.channels, wanted_spec.freq, SDL_GetError());wanted_spec.channels = next_nb_channels[FFMIN(7, wanted_spec.channels)];if (!wanted_spec.channels) {wanted_spec.freq = next_sample_rates[next_sample_rate_idx--];wanted_spec.channels = wanted_nb_channels;if (!wanted_spec.freq) {av_log(NULL, AV_LOG_ERROR,"No more combinations to try, audio open failed\n");return -1;}}wanted_channel_layout = av_get_default_channel_layout(wanted_spec.channels);}if (spec.format != AUDIO_S16SYS) {av_log(NULL, AV_LOG_ERROR,"SDL advised audio format %d is not supported!\n", spec.format);return -1;}if (spec.channels != wanted_spec.channels) {wanted_channel_layout = av_get_default_channel_layout(spec.channels);if (!wanted_channel_layout) {av_log(NULL, AV_LOG_ERROR,"SDL advised channel count %d is not supported!\n", spec.channels);return -1;}}audio_hw_params->fmt = AV_SAMPLE_FMT_S16;audio_hw_params->freq = spec.freq;audio_hw_params->channel_layout = wanted_channel_layout;audio_hw_params->channels = spec.channels;audio_hw_params->frame_size = av_samples_get_buffer_size(NULL, audio_hw_params->channels, 1, audio_hw_params->fmt, 1);audio_hw_params->bytes_per_sec = av_samples_get_buffer_size(NULL, audio_hw_params->channels, audio_hw_params->freq, audio_hw_params->fmt, 1);if (audio_hw_params->bytes_per_sec <= 0 || audio_hw_params->frame_size <= 0) {av_log(NULL, AV_LOG_ERROR, "av_samples_get_buffer_size failed\n");return -1;}SDL_AoutSetDefaultLatencySeconds(ffp->aout, ((double)(2 * spec.size)) / audio_hw_params->bytes_per_sec);return spec.size;

}audio_open方法主要是调用SDL的相关接口,并注册了一个sdl_audio_callback回调让声卡获取pcm数据,以播放。此处暂不做详细阐述。

然后,便是对video和subtitle解码器初始化,并创建解码线程:

static void decoder_init(Decoder *d, AVCodecContext *avctx, PacketQueue *queue, SDL_cond *empty_queue_cond) {memset(d, 0, sizeof(Decoder));d->avctx = avctx;d->queue = queue;d->empty_queue_cond = empty_queue_cond;d->start_pts = AV_NOPTS_VALUE;d->first_frame_decoded_time = SDL_GetTickHR();d->first_frame_decoded = 0;SDL_ProfilerReset(&d->decode_profiler, -1);

}decoder_start(&is->viddec, video_thread, ffp, "ff_video_dec");

decoder_start(&is->subdec, subtitle_thread, ffp, "ff_subtitle_dec");start

准备工作完毕后,即可调用start方法开始播放了:

- (int) start

{return ijkmp_start(_nativeMediaPlayer);

}其实这是一个异步操作, 会触发投递1个FFP_REQ_START消息到MessageQueue,然后由msg loop thread消费该消息,并执行ffp_start_l方法请流:

int ffp_start_l(FFPlayer *ffp)

{assert(ffp);VideoState *is = ffp->is;if (!is)return EIJK_NULL_IS_PTR;toggle_pause(ffp, 0);return 0;

}stop

与start方法功能相反,stop方法请求退出播放器:

ijkmp_stop => ijkmp_stop_l => ffp_stop_l => is->abort_request = 1 && toggle_pause(ffp, 1);- (int) stop

{return ijkmp_stop(_nativeMediaPlayer);

}int ffp_stop_l(FFPlayer *ffp)

{assert(ffp);VideoState *is = ffp->is;if (is) {is->abort_request = 1;toggle_pause(ffp, 1);}if (ffp->enable_accurate_seek && is && is->accurate_seek_mutex&& is->audio_accurate_seek_cond && is->video_accurate_seek_cond) {SDL_LockMutex(is->accurate_seek_mutex);is->audio_accurate_seek_req = 0;is->video_accurate_seek_req = 0;SDL_CondSignal(is->audio_accurate_seek_cond);SDL_CondSignal(is->video_accurate_seek_cond);SDL_UnlockMutex(is->accurate_seek_mutex);}return 0;

}shutdown

播放完毕,调用ijkmp_shutdown来回收播放器底层资源:

void ijkmp_shutdown(IjkMediaPlayer *mp)

{return ijkmp_shutdown_l(mp);

}